- 1 Intro to Systems Programming

- 2 Memory

- 3 Intro to C

- 3.0.1 C constructs [if you’re familiar with Java, Python, etc.]

- 3.0.2 Compound types

- 3.0.3 Unix Manual (

man) pages - 3.0.4 Header Files

- 3.0.5 Some Common C libraries

- 3.0.6 Basic Types

- Code Samples

- 3.0.7 Compound types |

struct,enum,unions - Code Samples

- So what is a

union? - Code Samples

- 3.1 Intermediate C

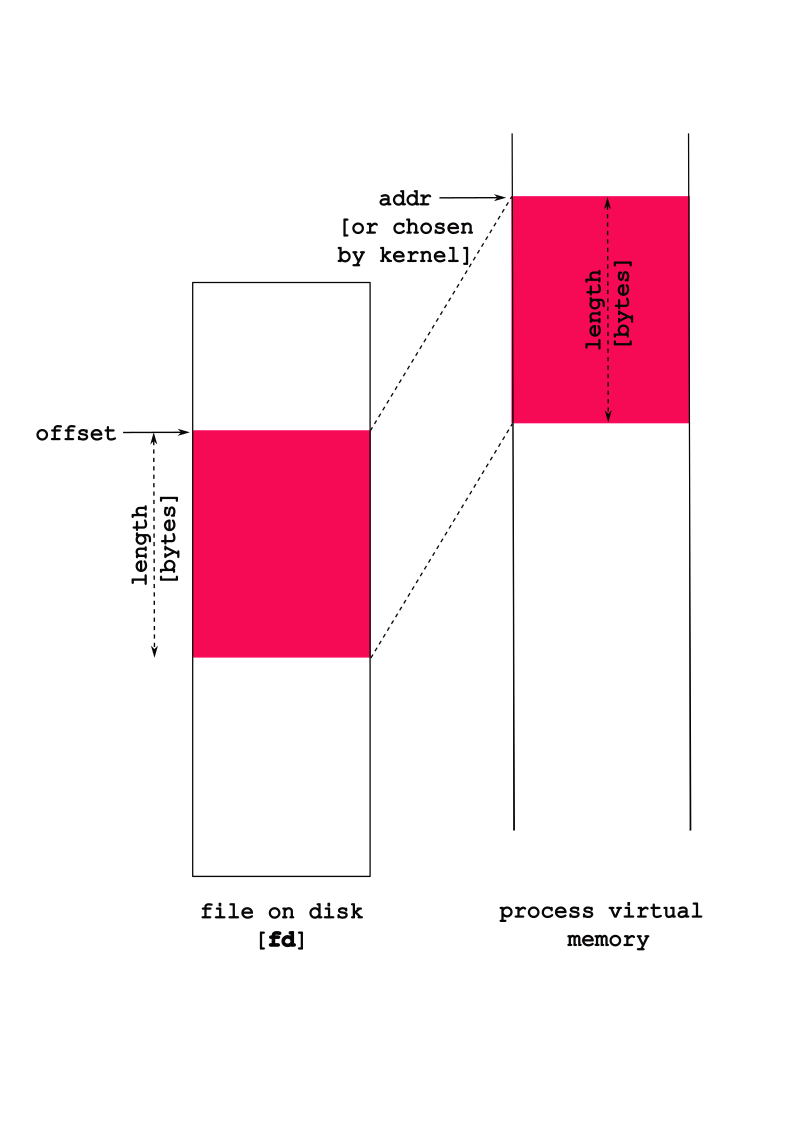

- 3.2 Objectives

- 3.3 Types

- 4 Pointers and Arrays

- 5

CScoping Rules - 6 Function Pointers

- 7 C Memory Model, Data-structures, and APIs

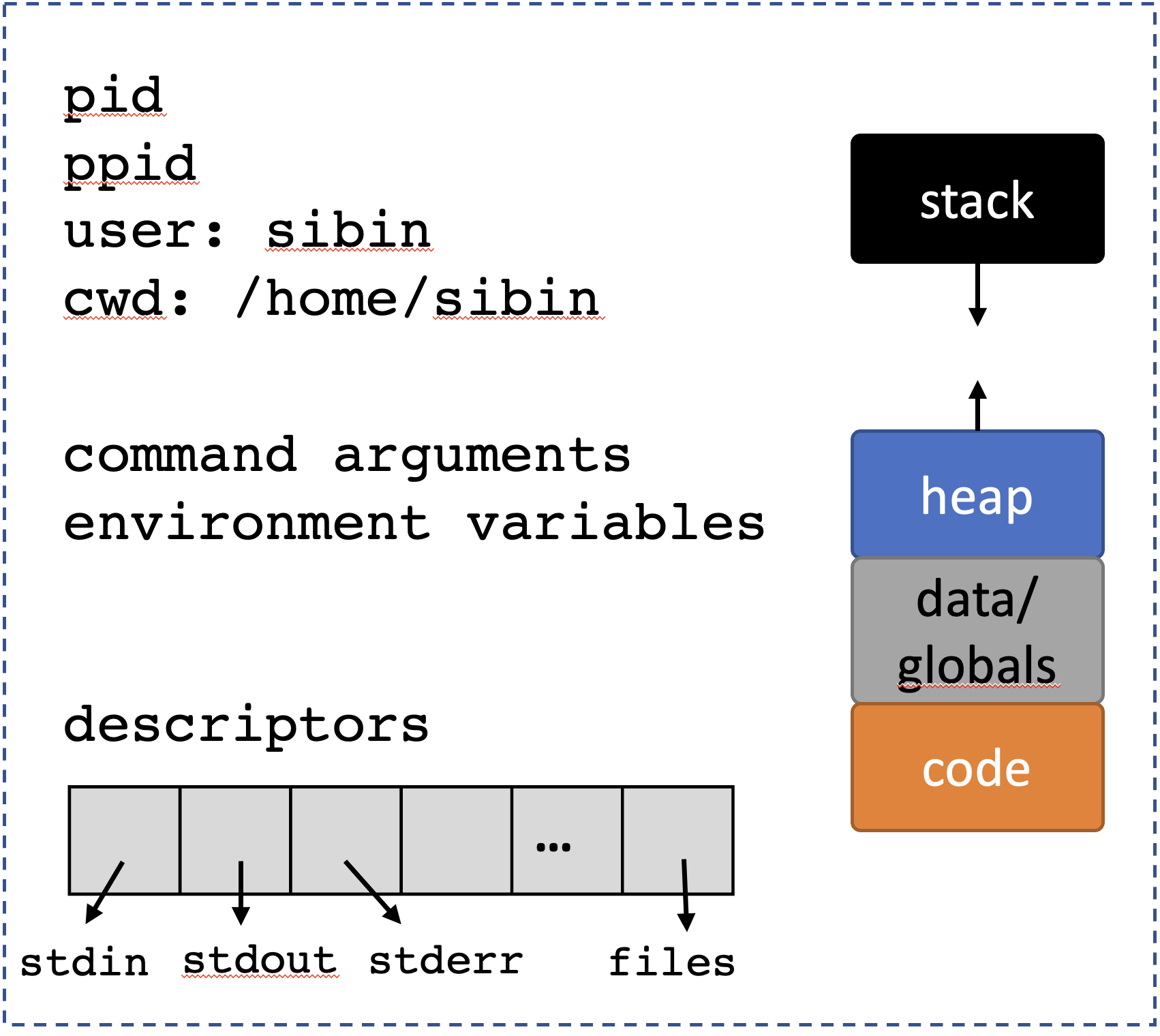

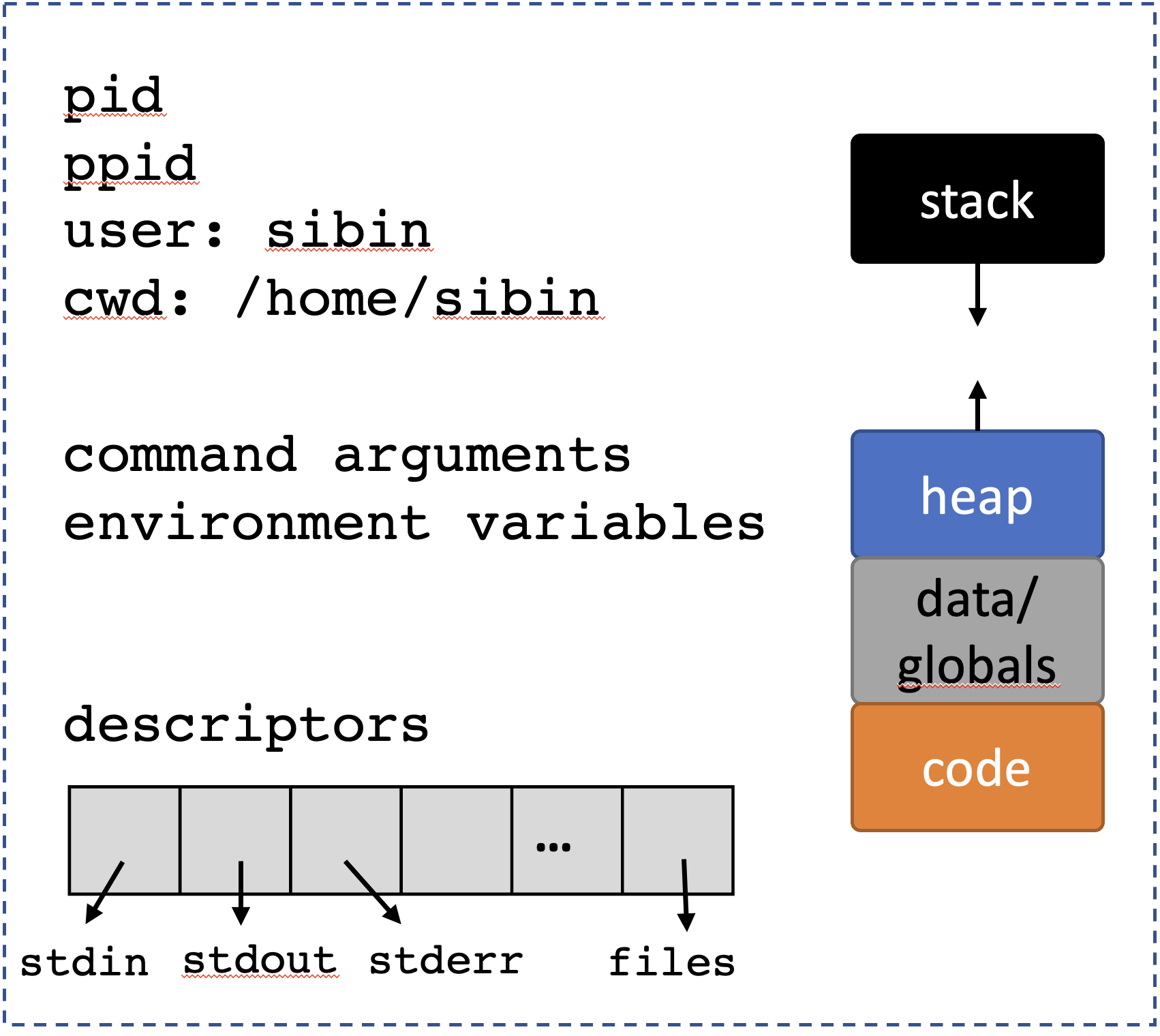

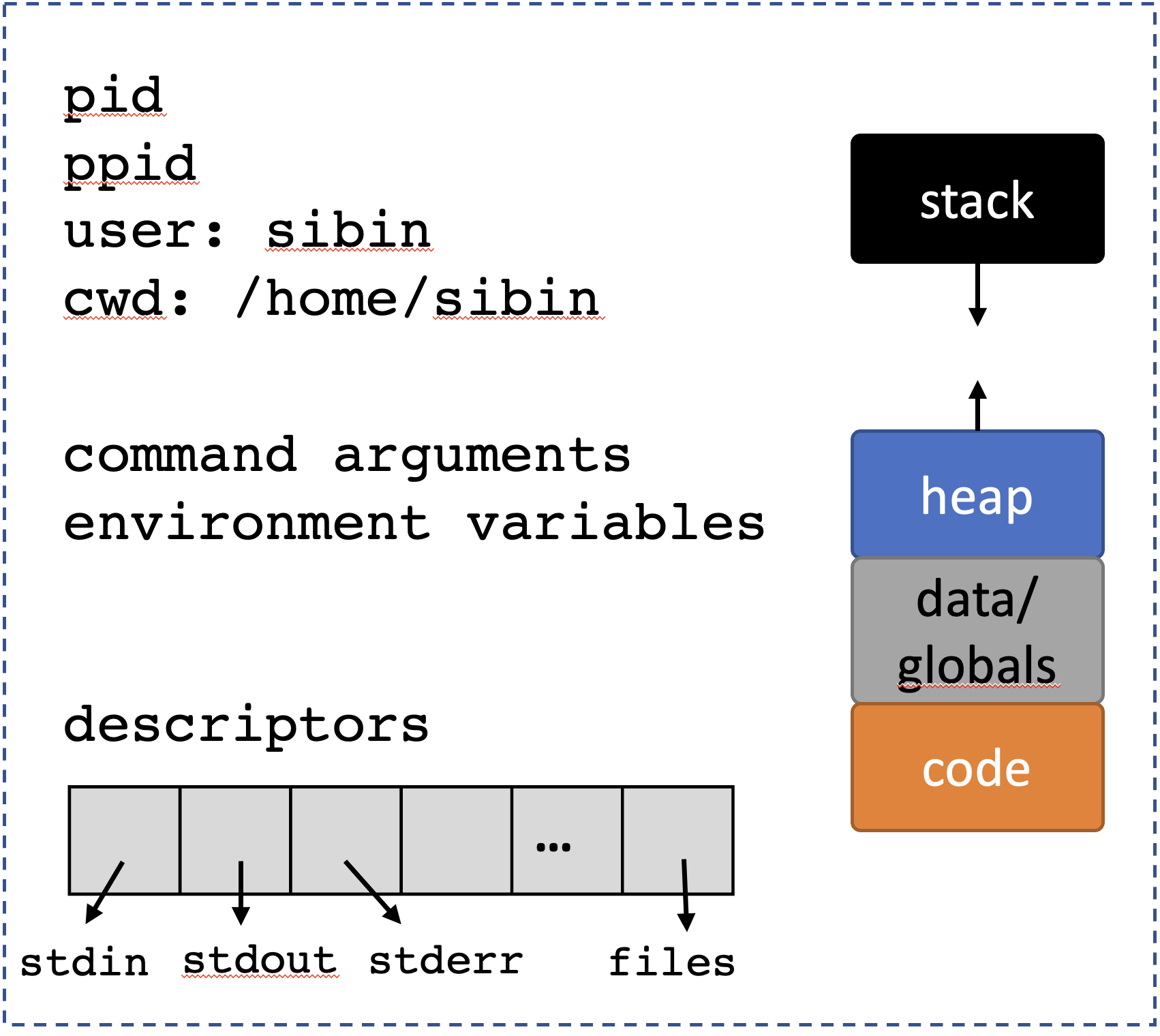

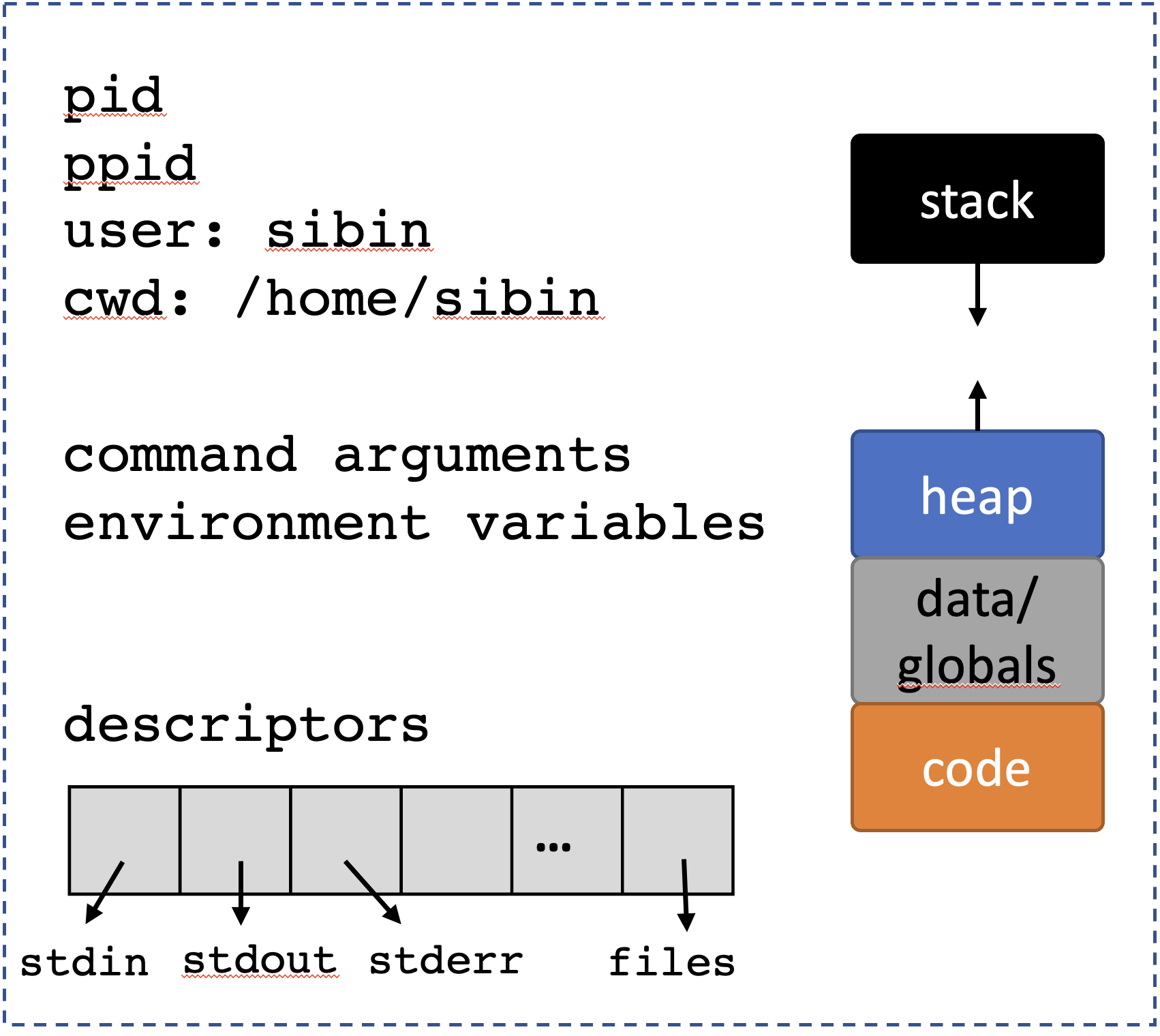

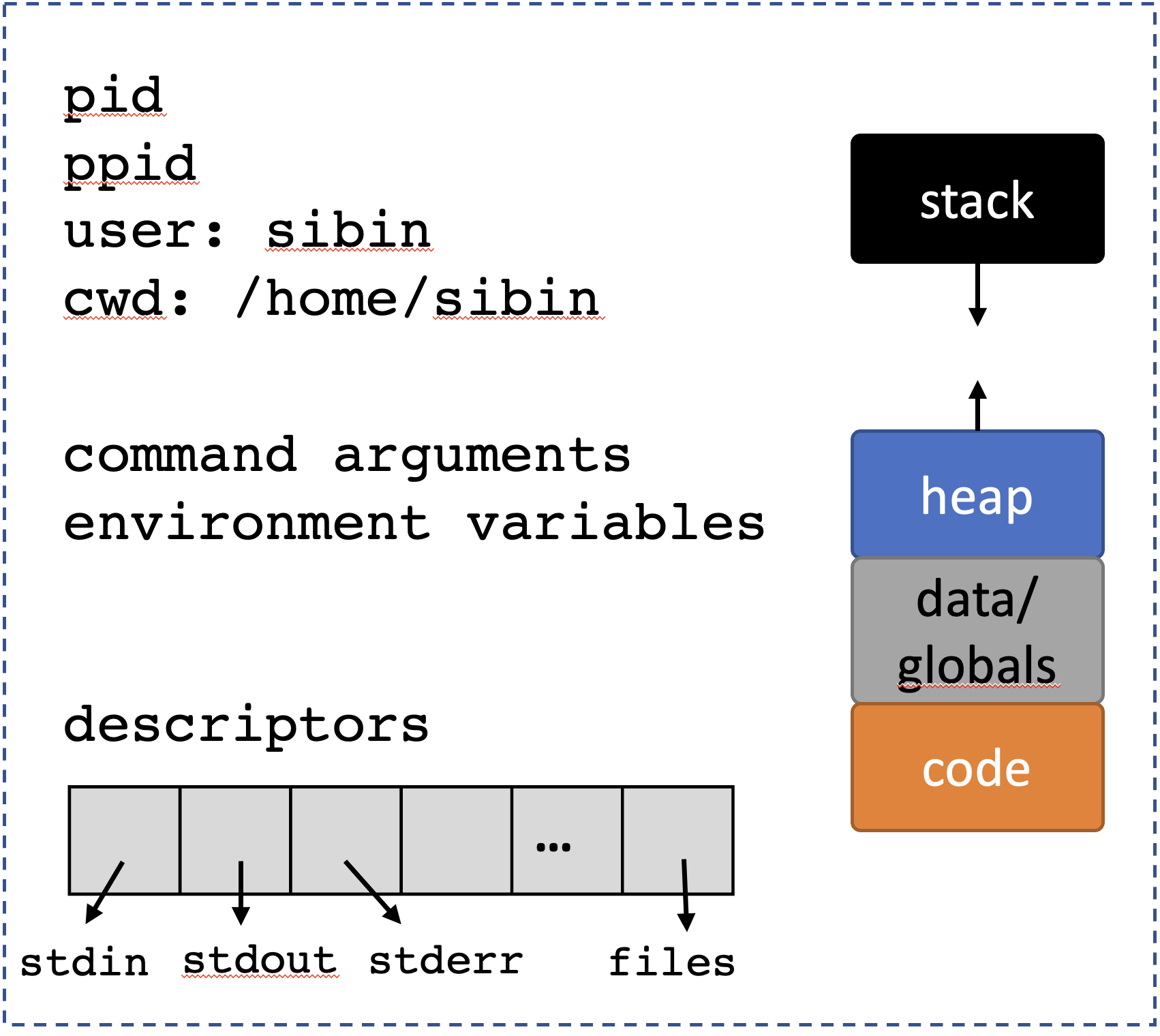

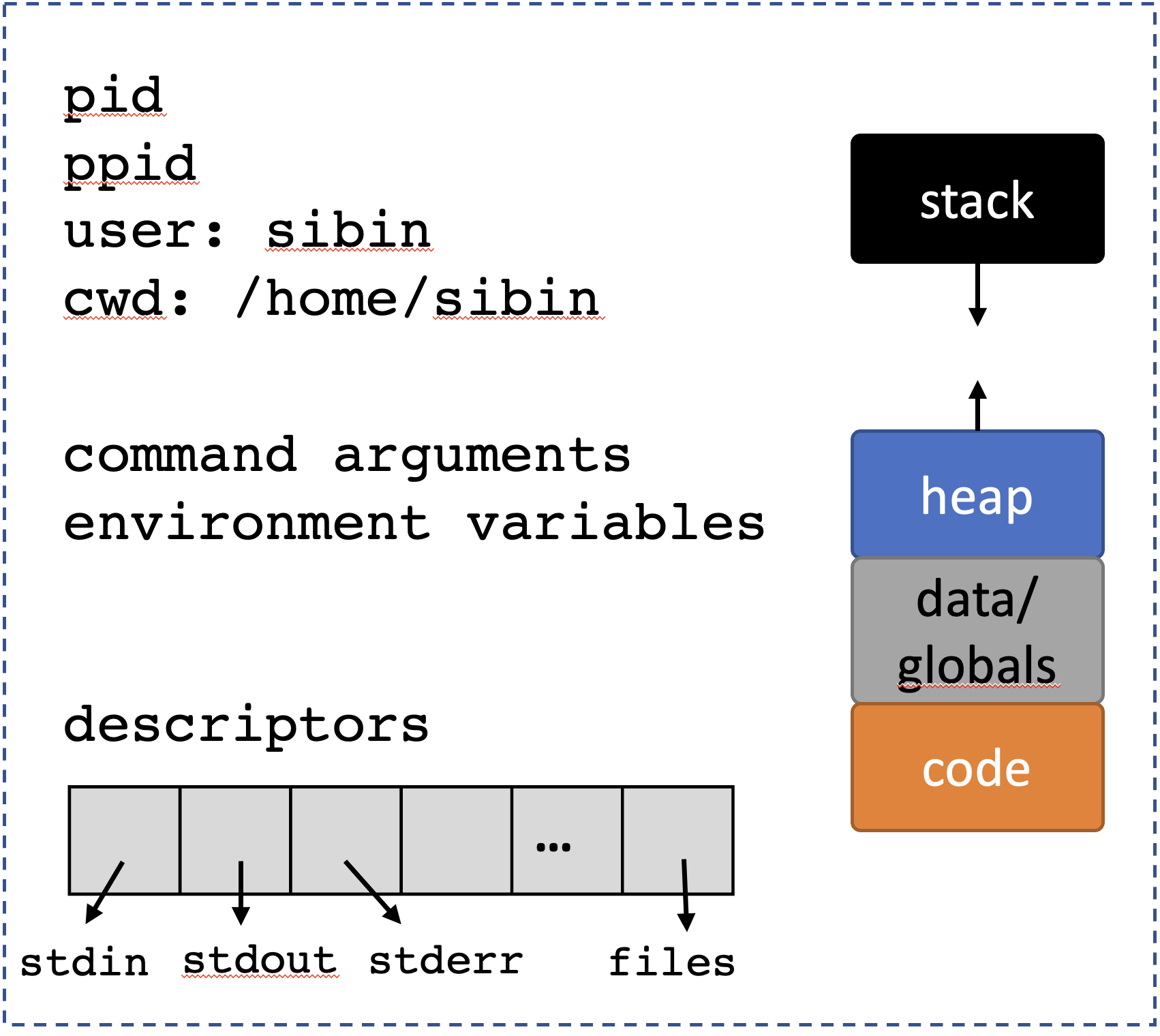

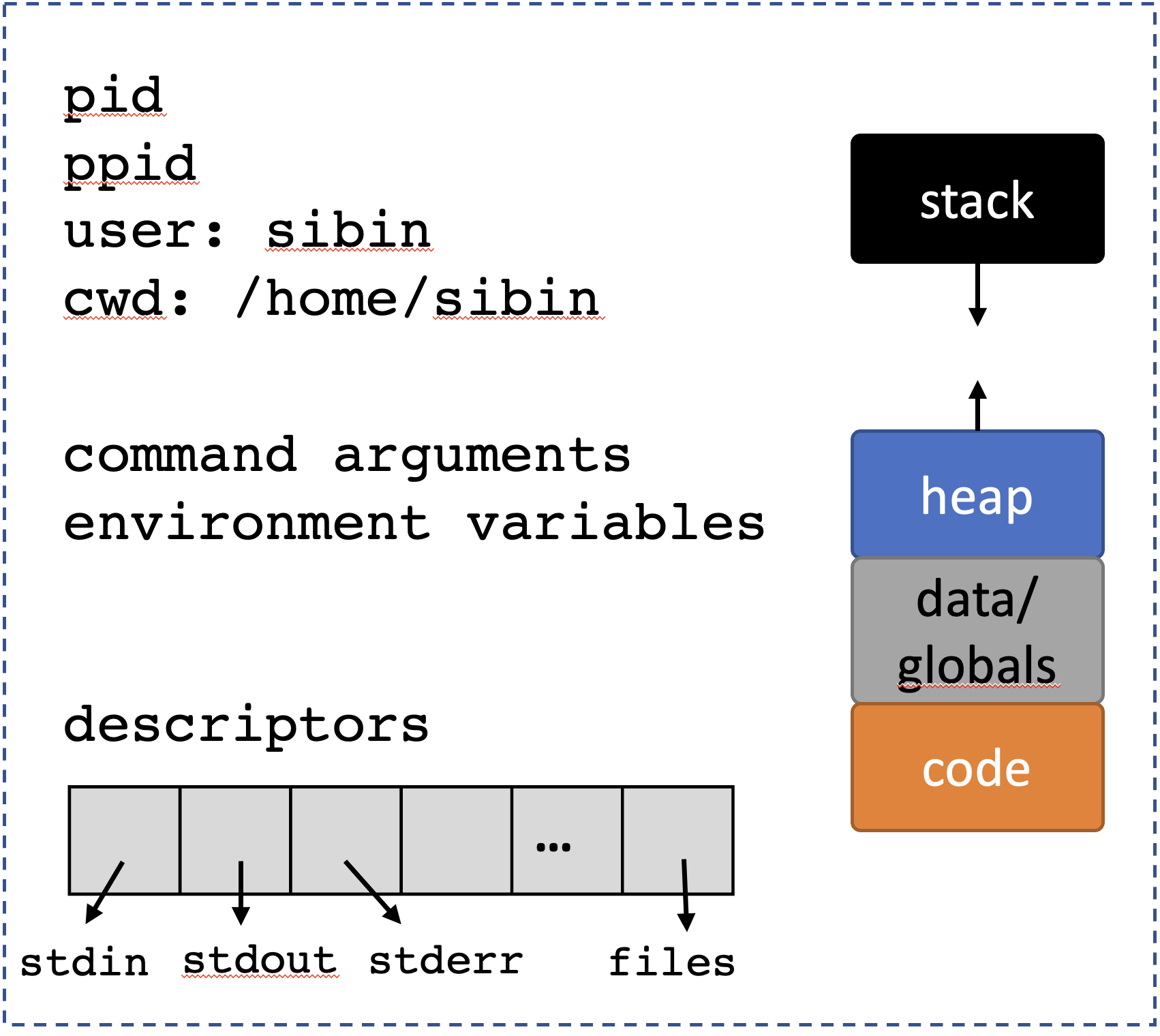

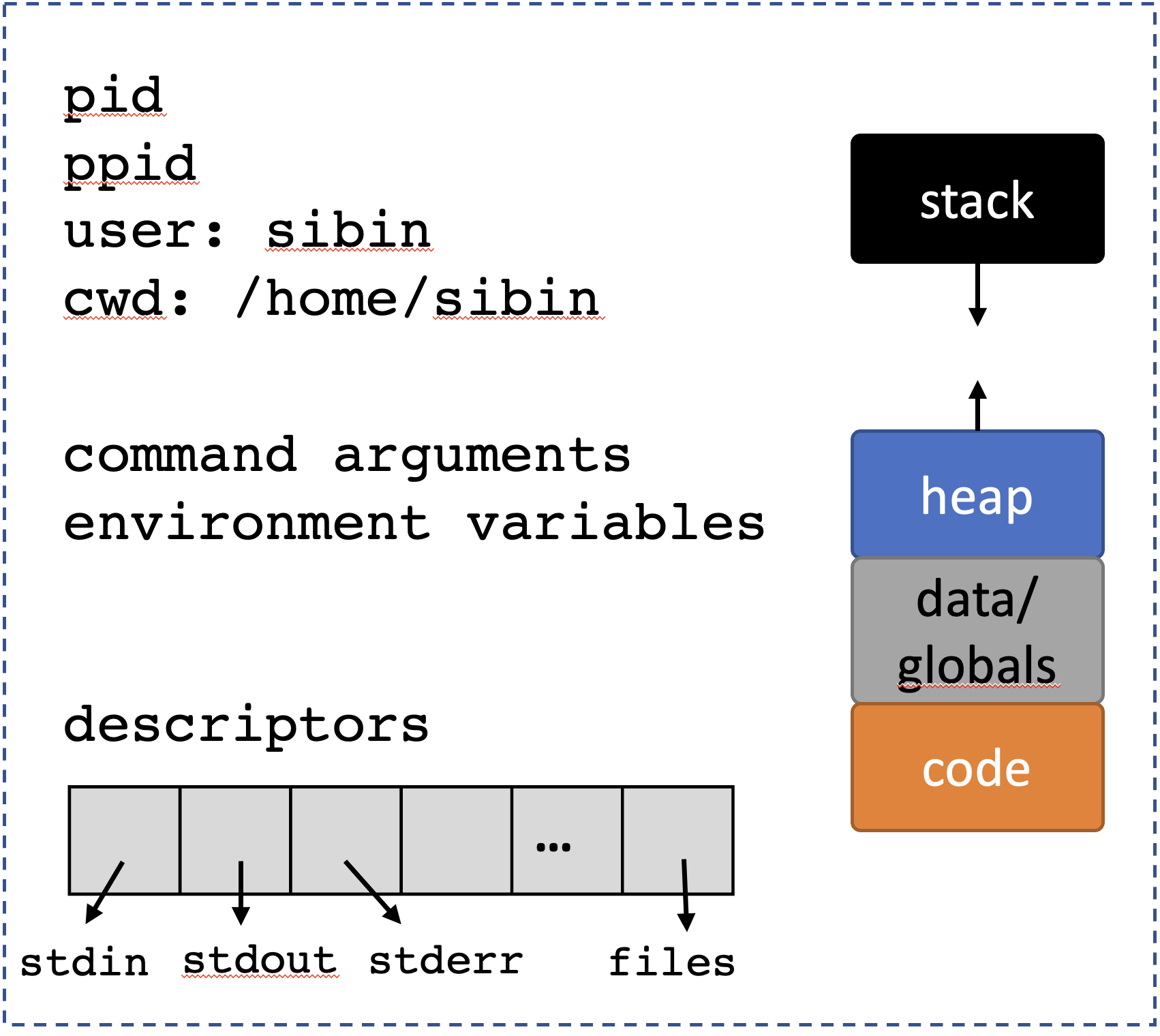

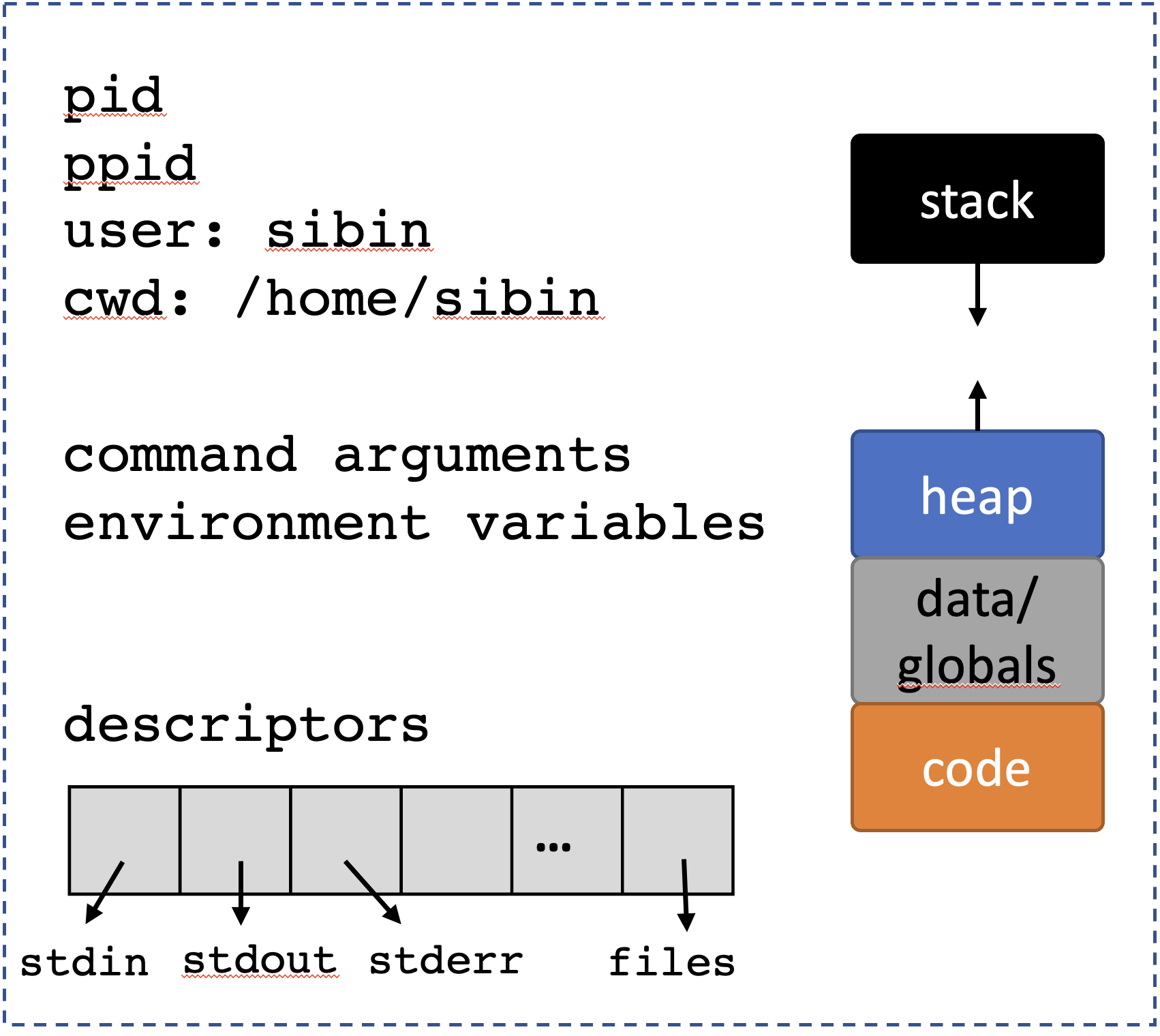

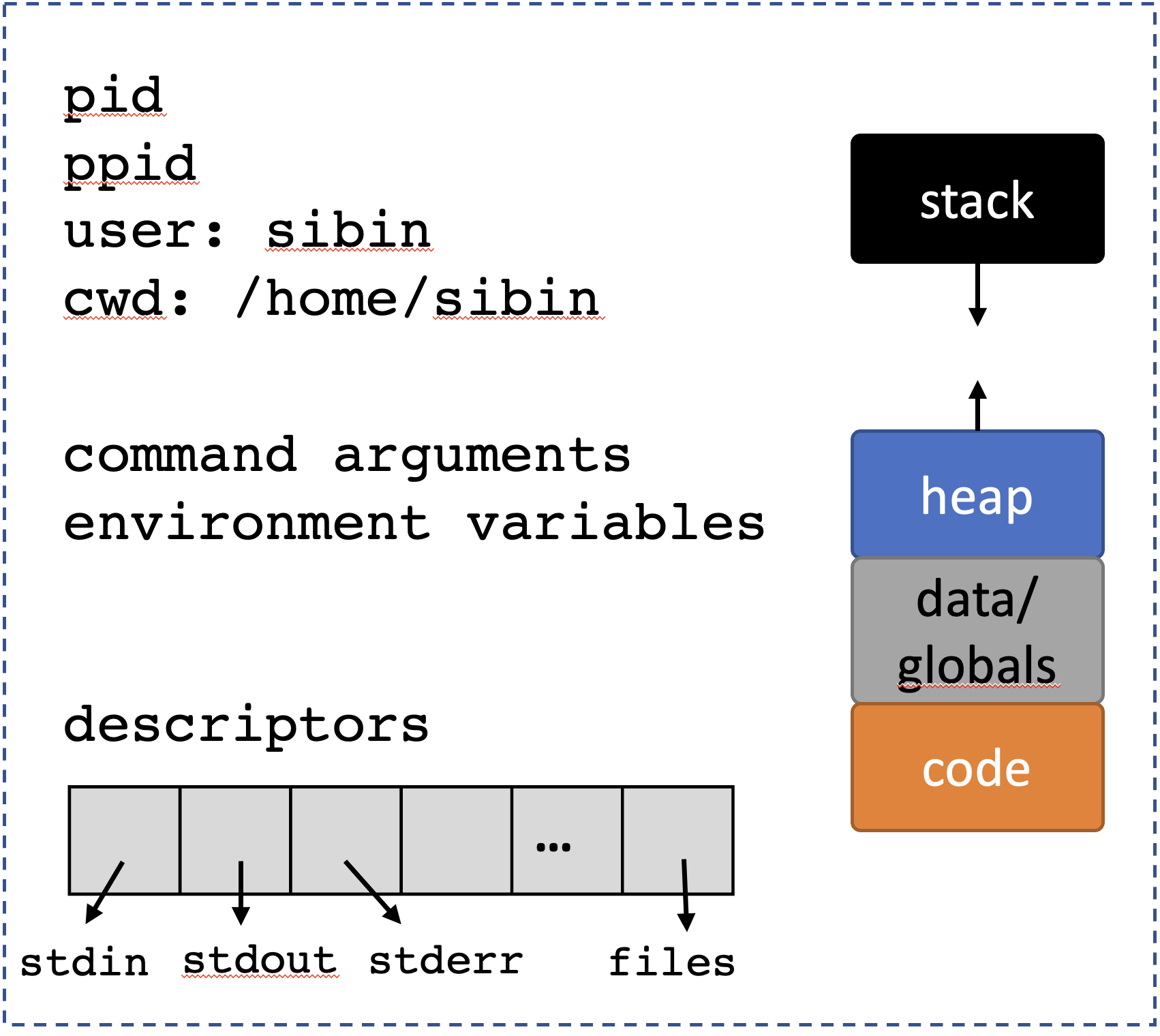

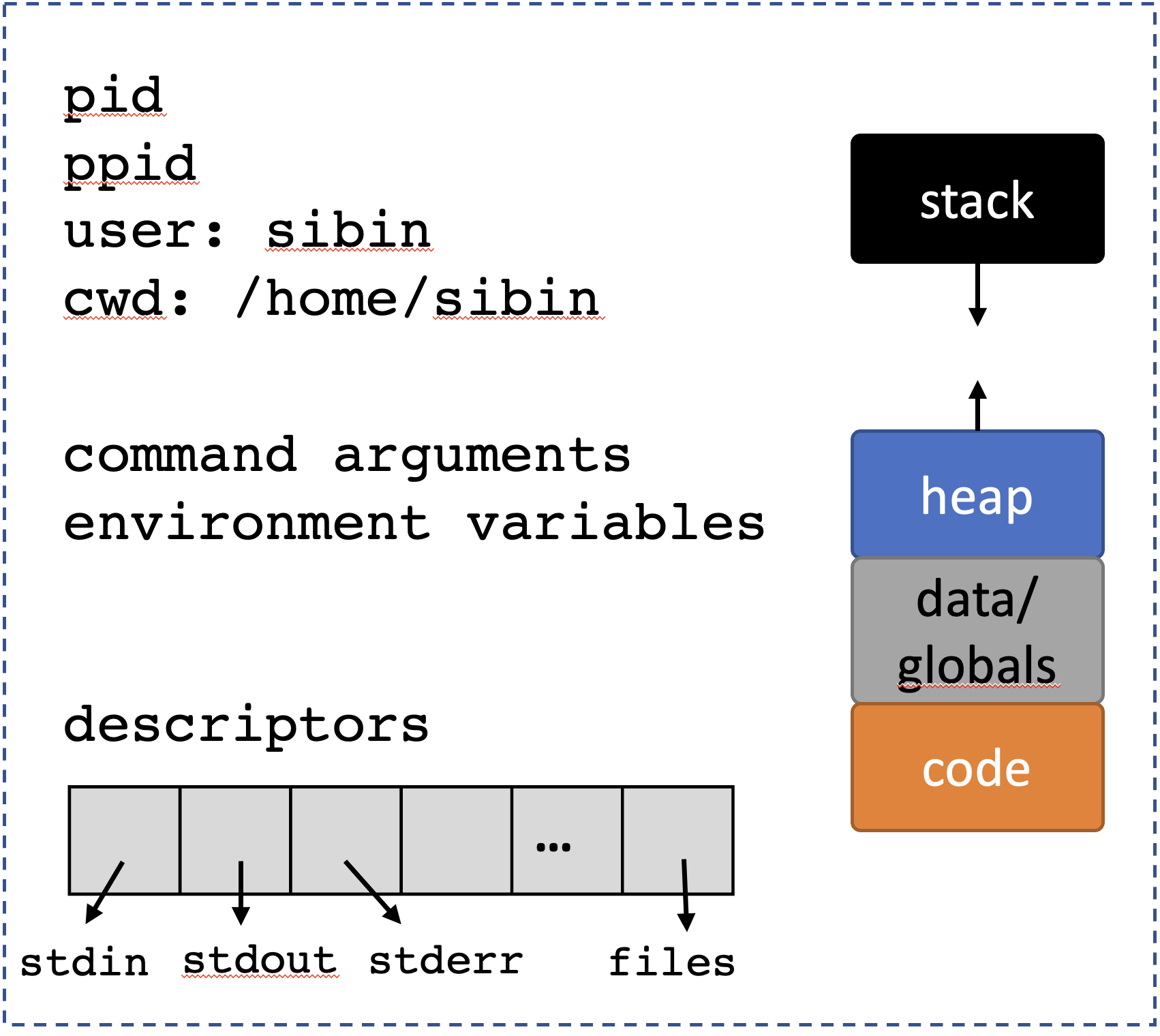

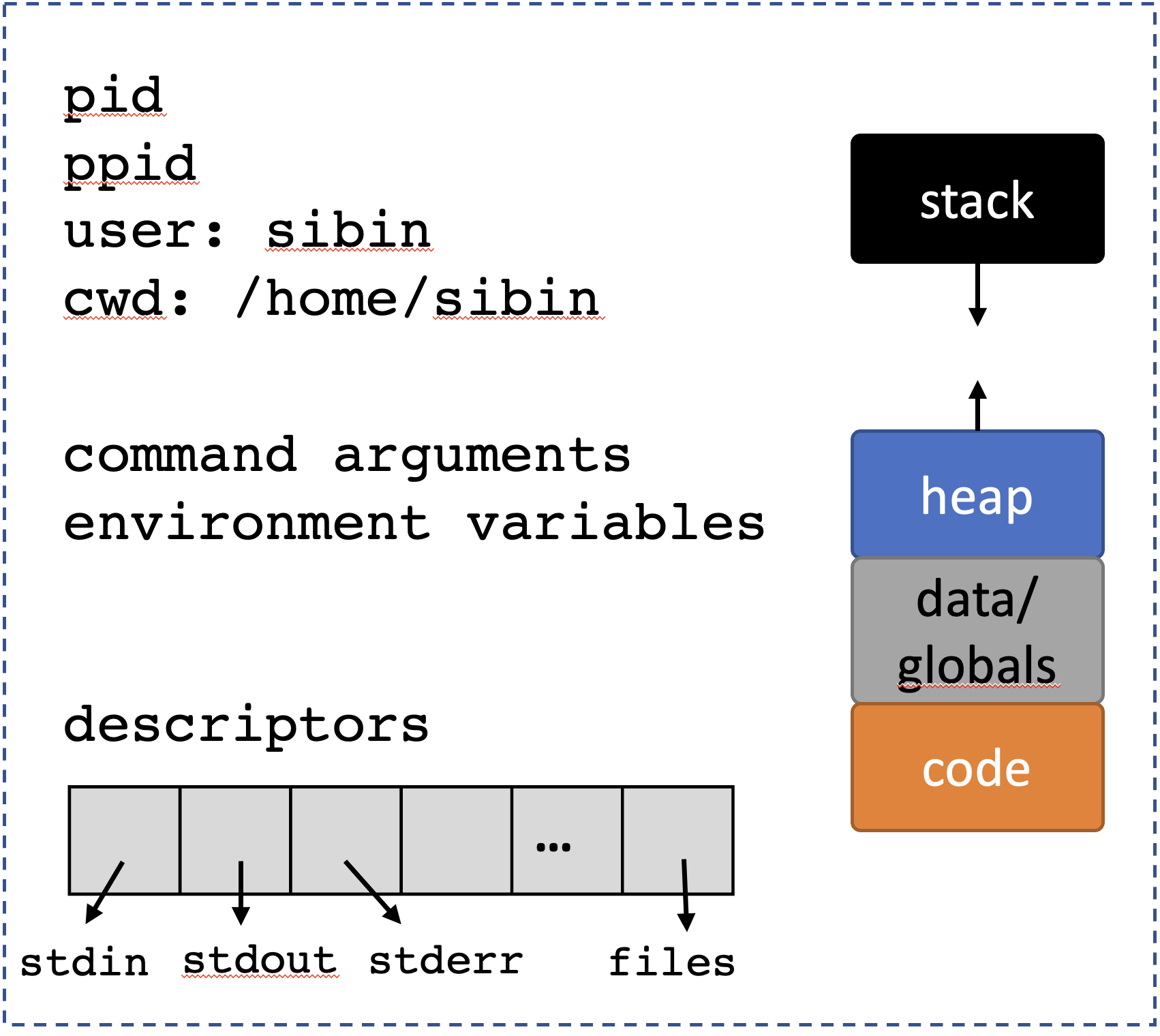

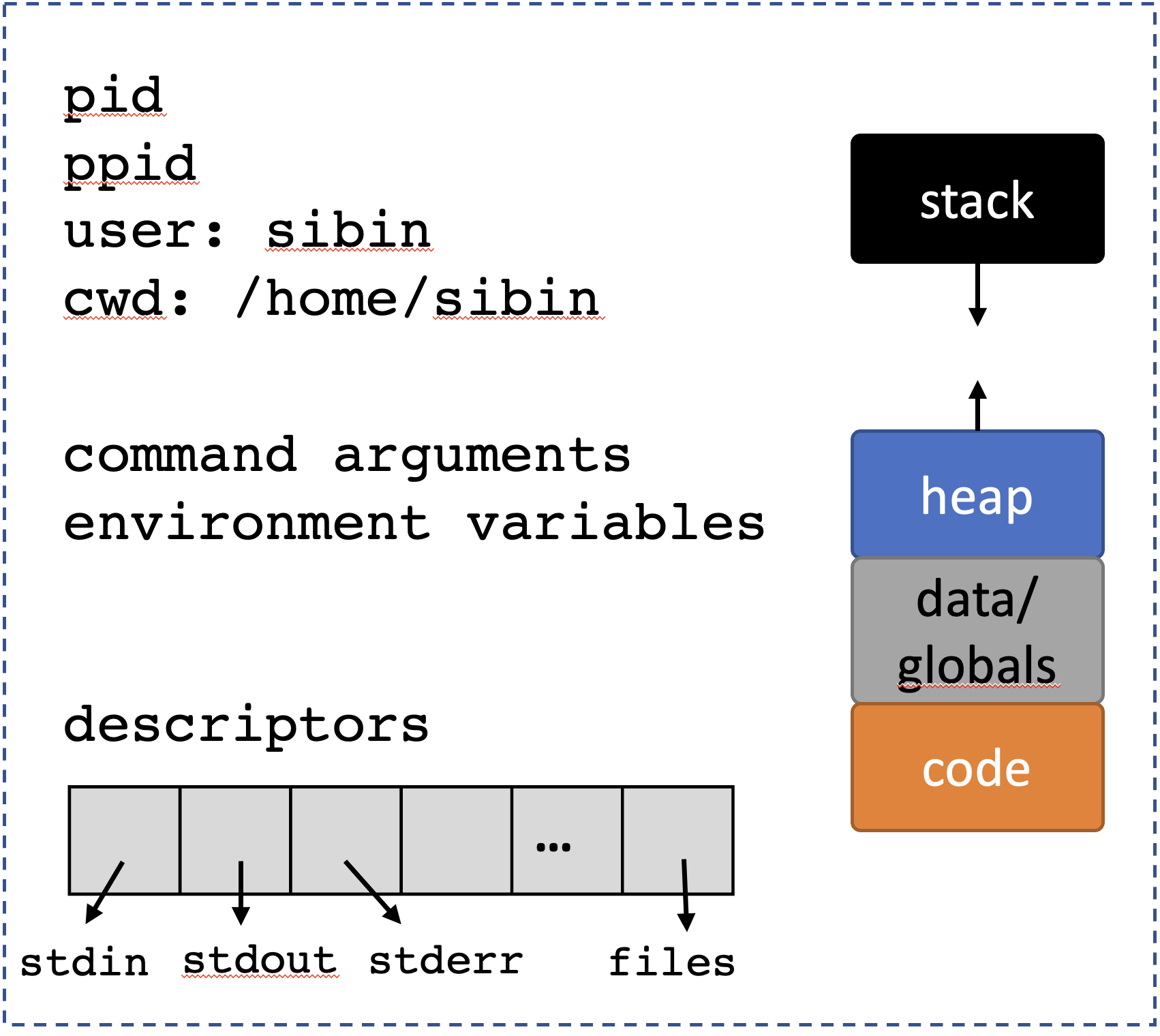

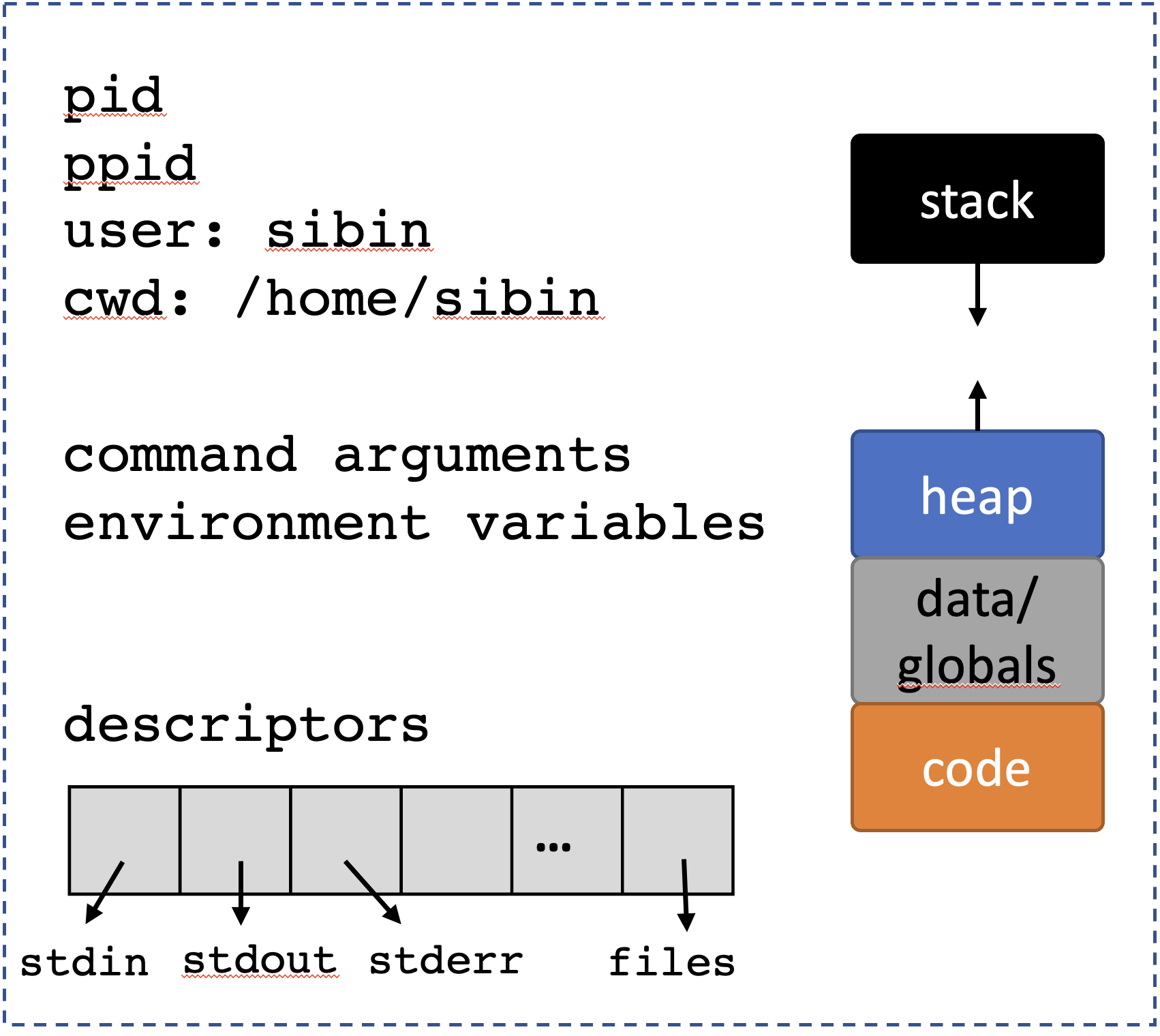

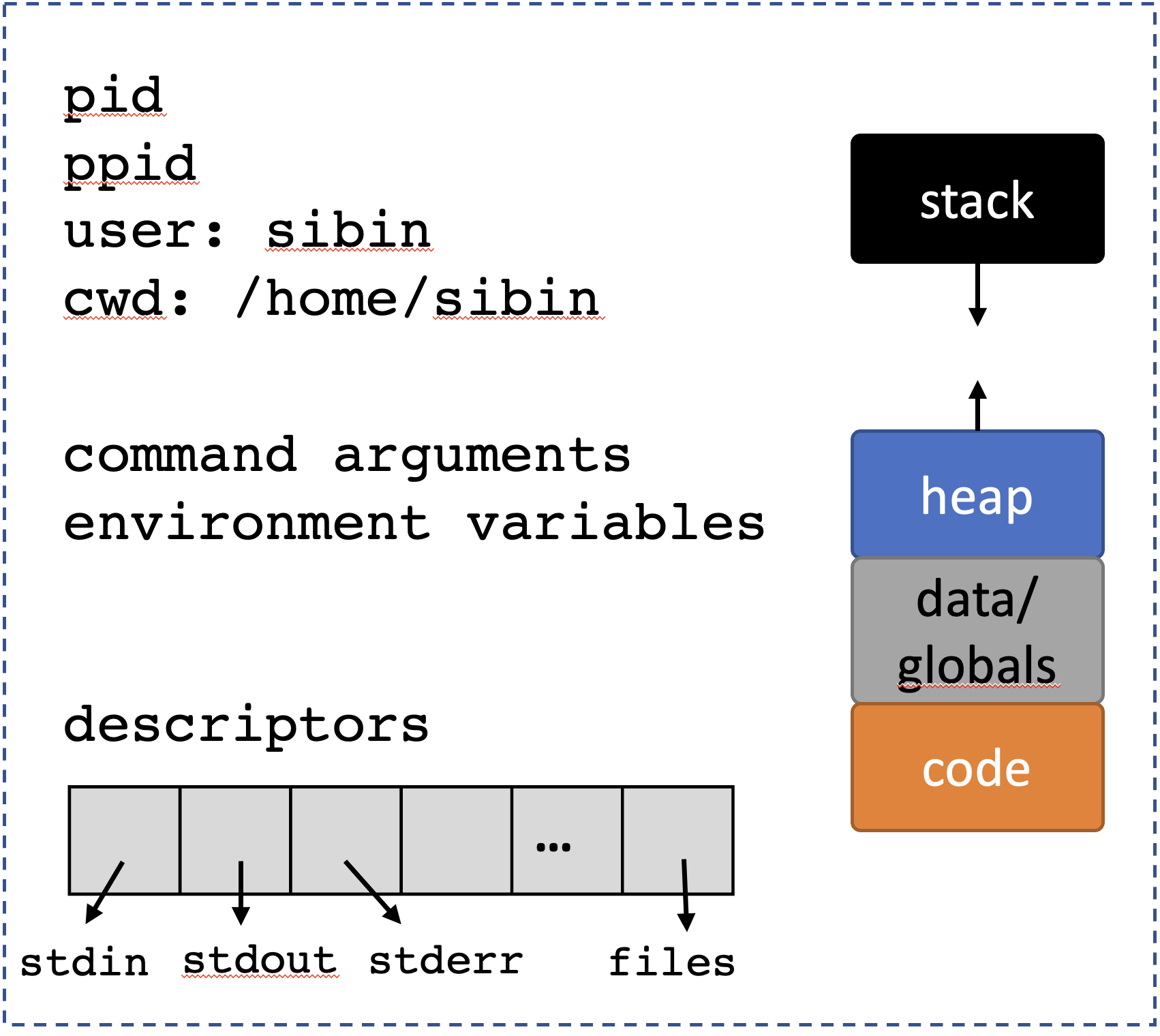

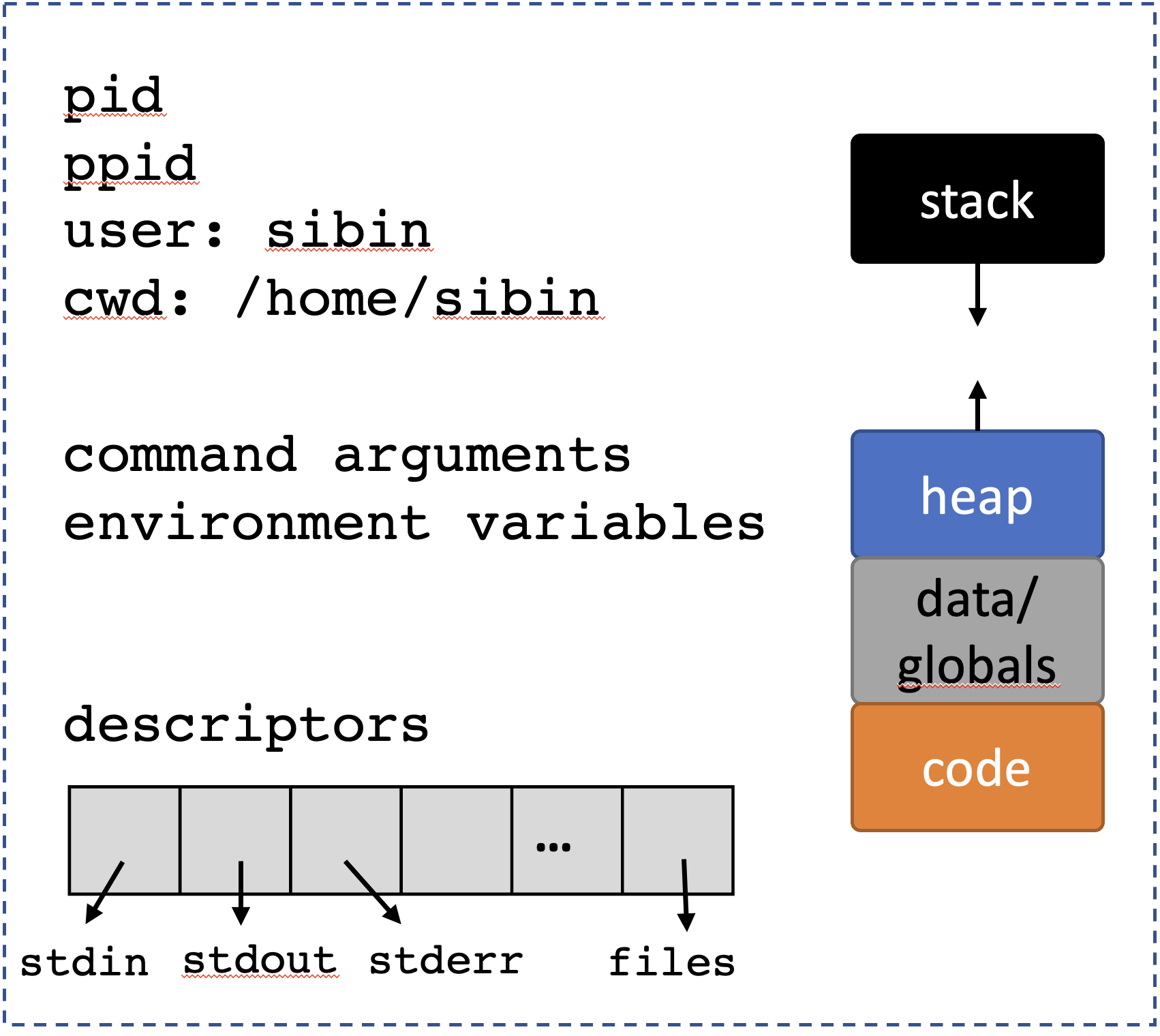

- 8 Processes

- 9 Process Descriptors

- 10 Files and File Handling

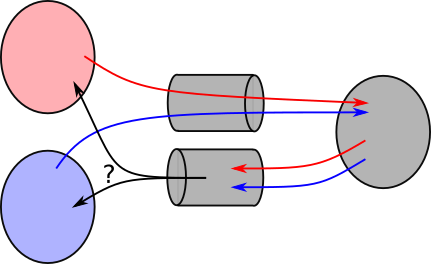

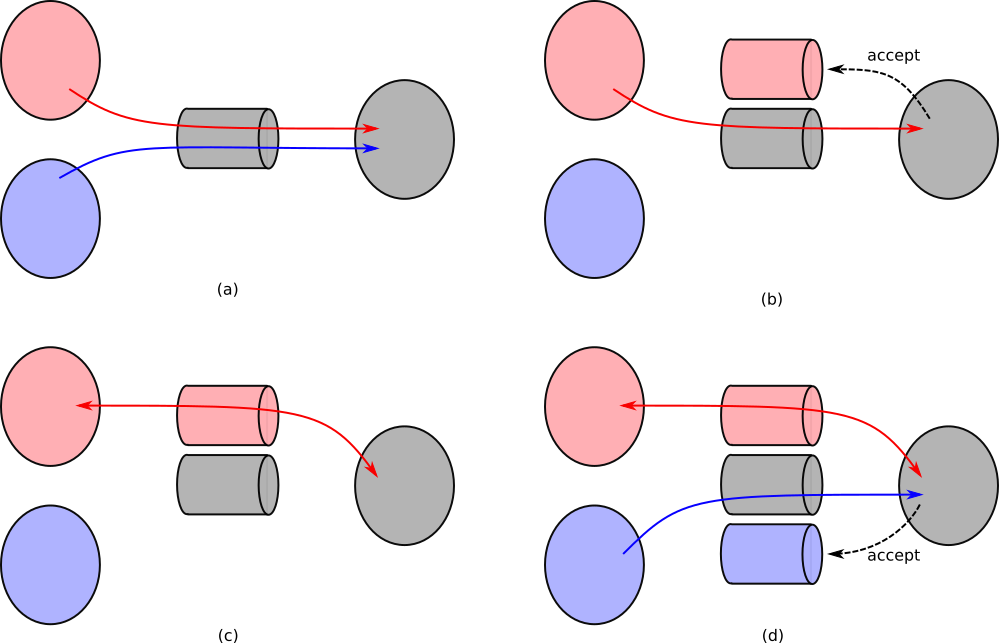

- 11 I/O: Inter-Process Communication

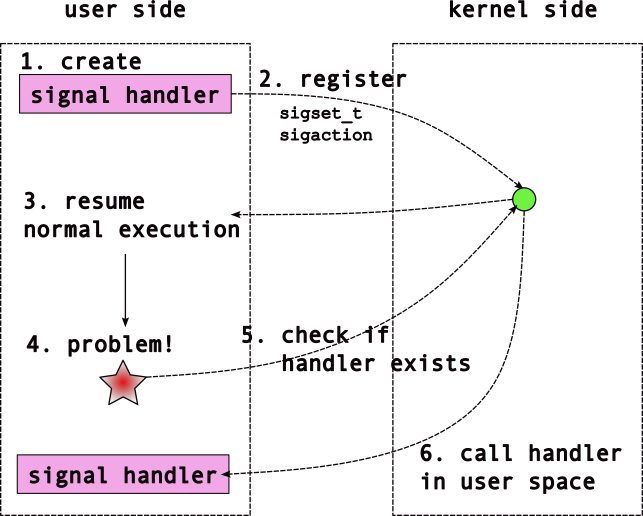

- 12 Reinforcing Ideas: Assorted Exercises and Event Notification

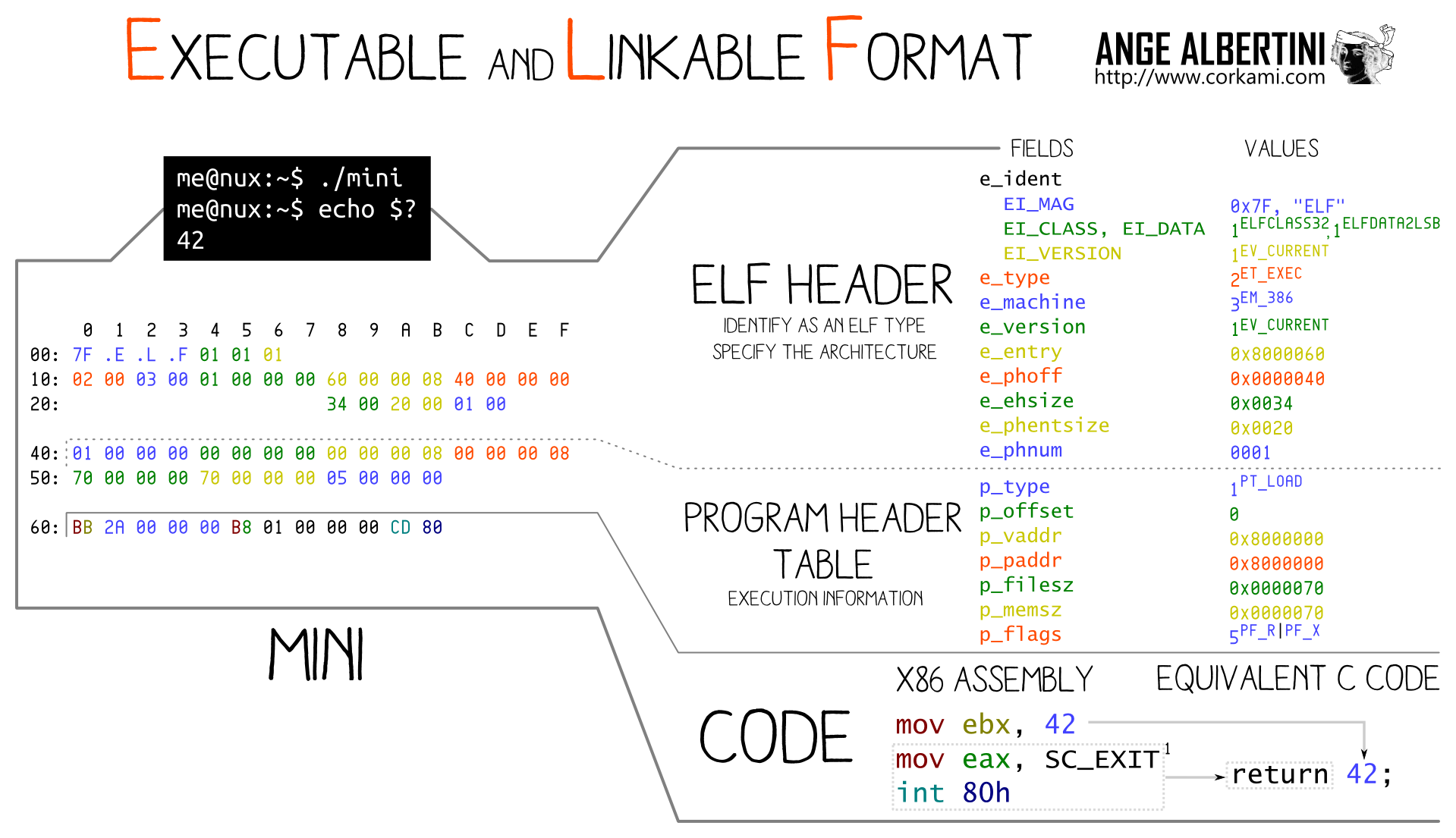

- 13 Libraries

- 14 Organizing Software with Dynamic Libraries: Exercises and Programming

- 15 System Calls and Memory Management

- 16 UNIX Security: Users, Groups, and Filesystem Permissions

- 17 Security: Attacks on System Programs

- 18 The Android UNIX Personality

- 19 UNIX Containers

- 20 Appendix: C Programming Conventions

1 Intro to Systems Programming

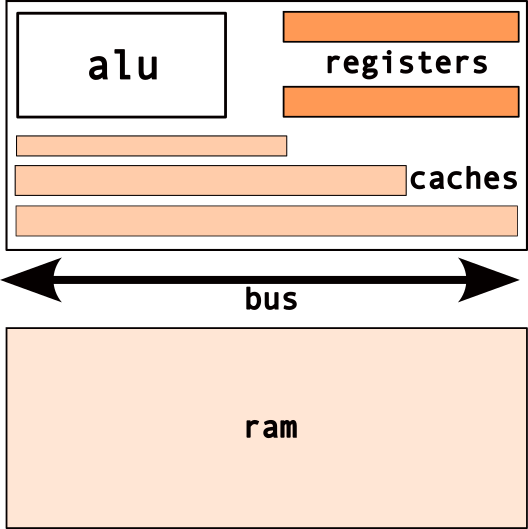

1.1 Computer Organization

Find the slides.

2 Memory

2.1 Computer memory

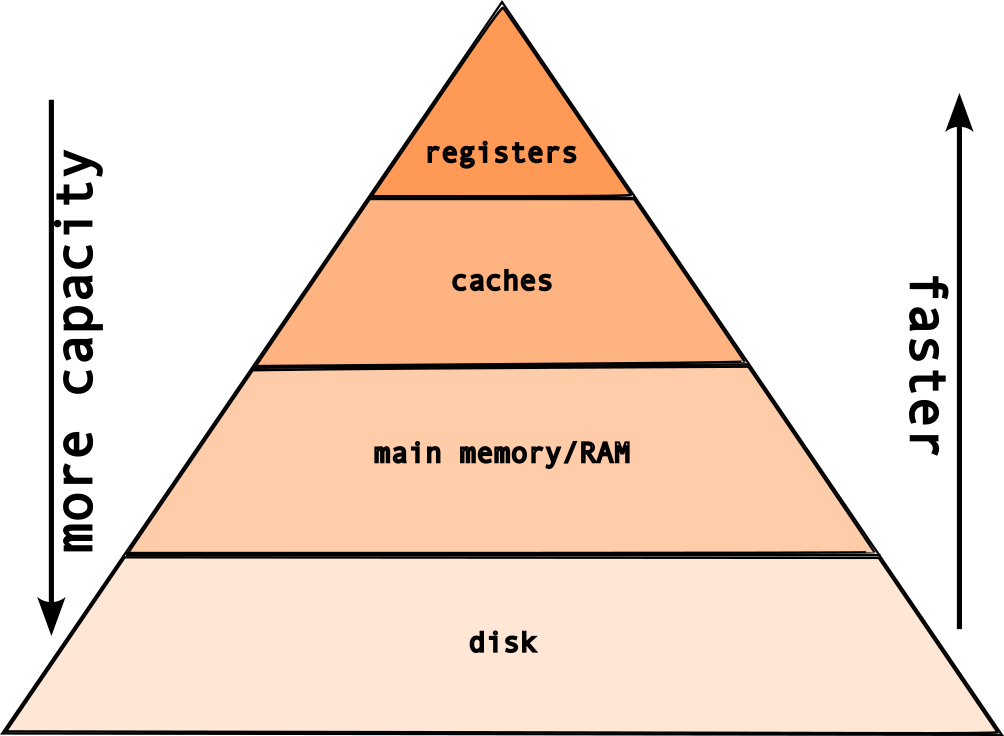

different shapes, sizes and speeds

- registers

- caches (L1, L2, L3)

- main memory/RAM

- disk (hard disks, flash)

2.1.1 Registers

- fastest form of memory

- this what the processor uses

- e.g.,

a = 3 + 4 ; 3and4→ loaded into registers

2.1.1.1 register “width” | defines “width” of cpu

- “32 bit processor” → 32-bit registers

- “64 bit processor” → 64-bit registers

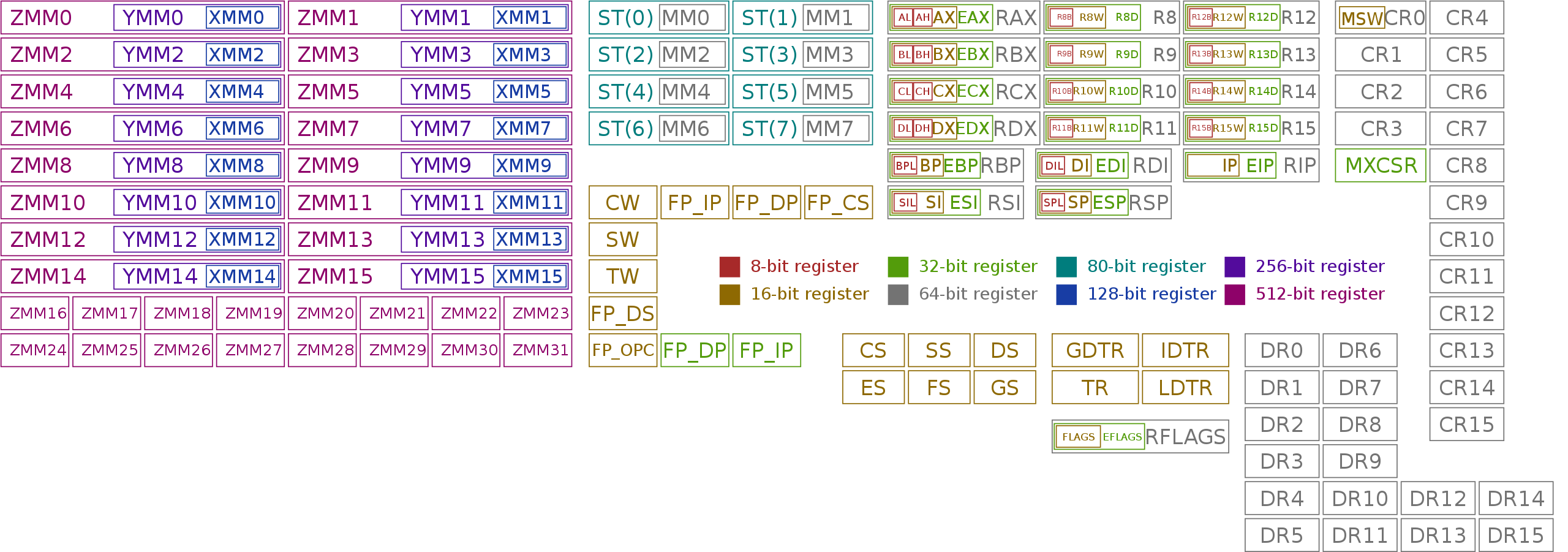

2.1.1.2 All (known) x86 registers

for the 64-bit architecture [source: wikipedia]

2.1.2 Important question: how much memory is necessary?

But before we answer that, we need to answer this question: why do we need memory?

2.1.3 Why do we need memory?

| component | (typical) sizes |

|---|---|

| programs/code | kilobytes to megabytes |

| temporary values | kilobytes to megabytes* |

| data | kilobytes to terabytes! |

[*depends on the program]

2.1.3.1 So, the memory hierarchy looks like this:

But, if the registers are the fastest type of memory, then why do we need these layers?

Because, registers are expensive!

The classic tradeoff: speed vs size! The faster the memory → more expensive!

2.2 memory layout

2.2.1 Caches

- fast, on-chip memory

- transparent to program

- used by cpu to improve performance

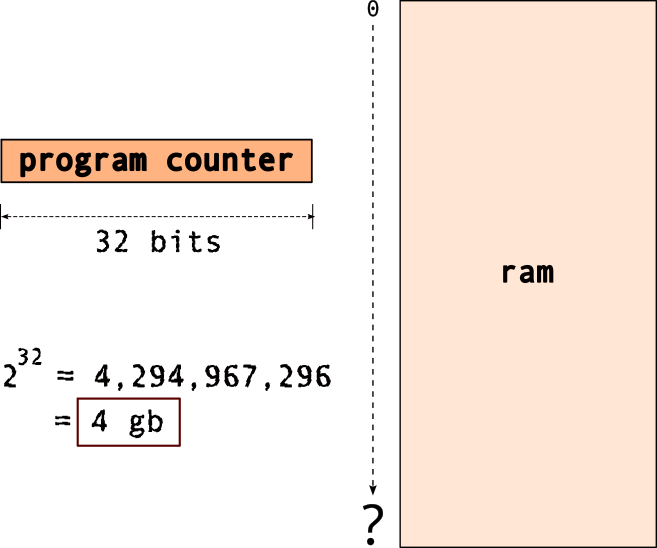

2.2.2 Main Memory

- random access memory [ram]

- typical → gigabytes range

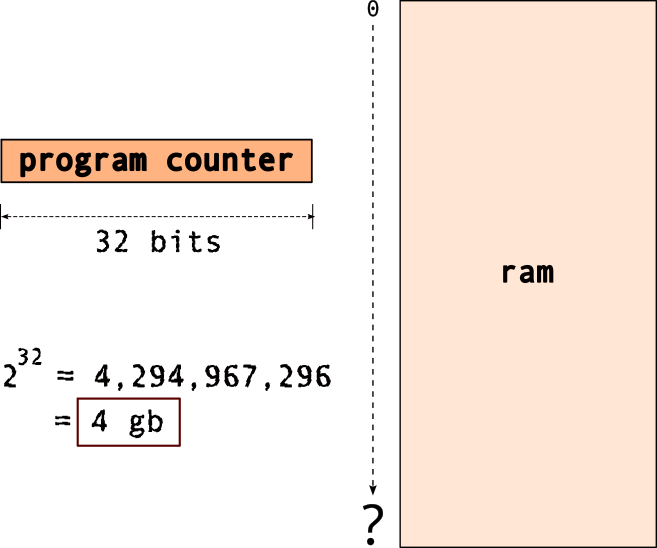

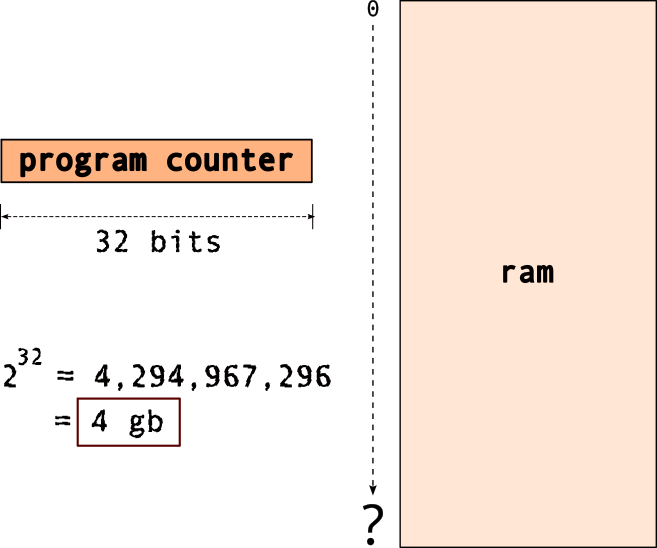

- limited by “width” of cpu/registers

- e.g.

32 bit cpu → max4 gb memory!

Wait, what’s with the “register width”, “memory”…

Memory Addressing:

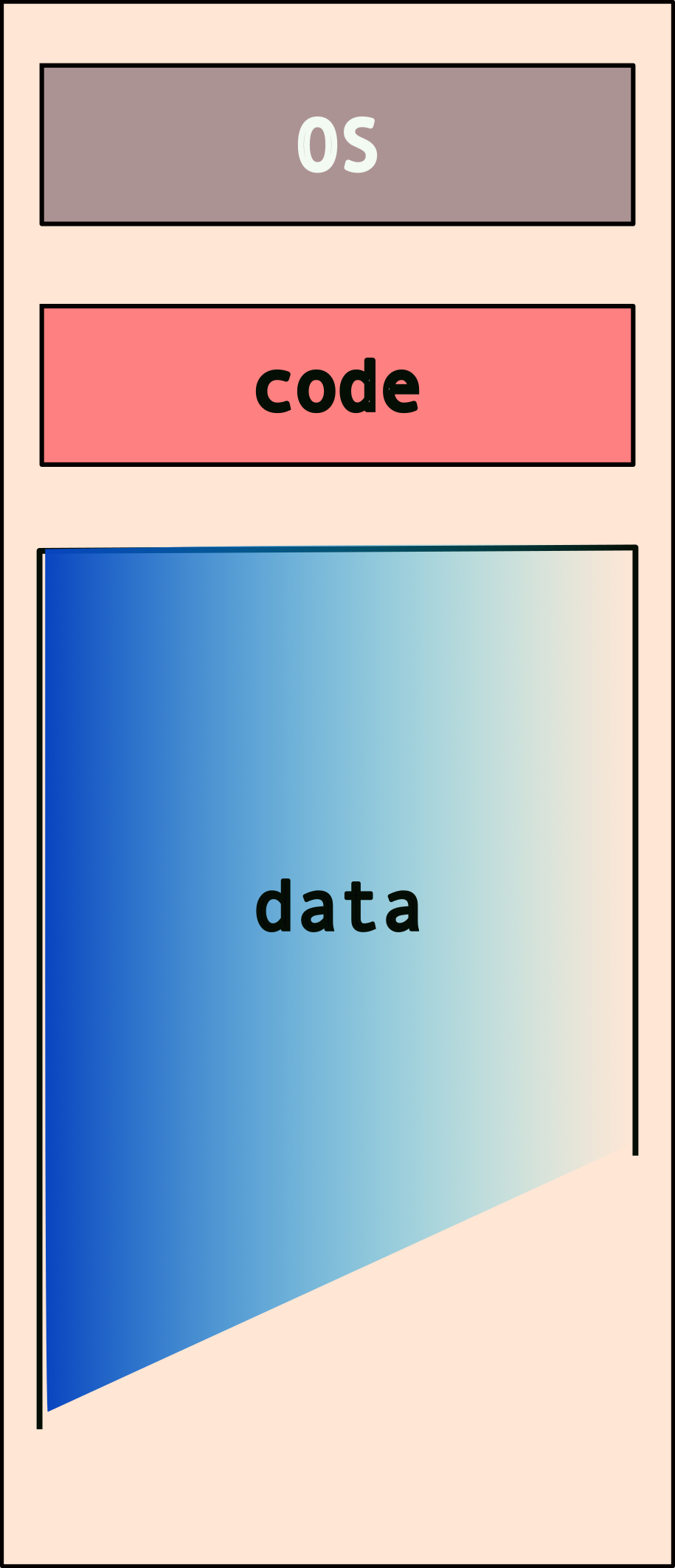

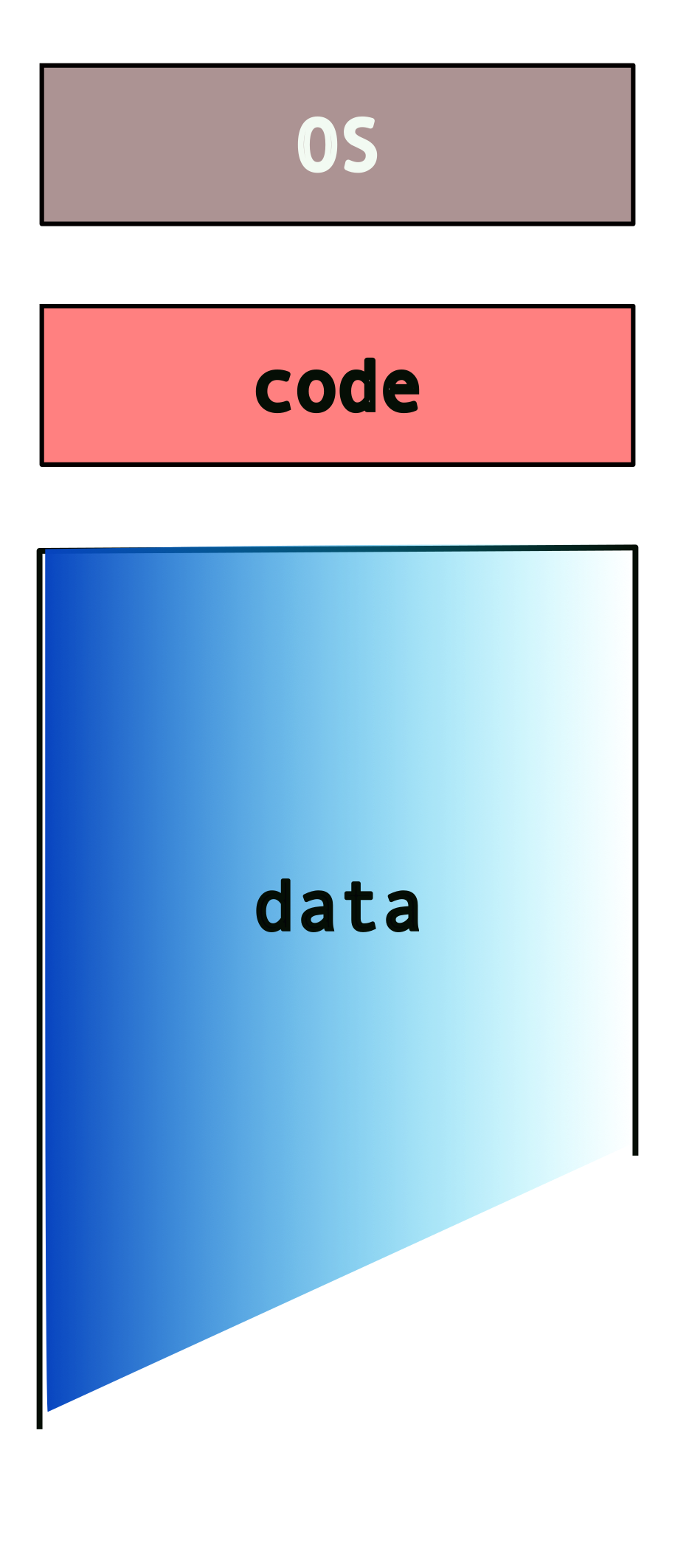

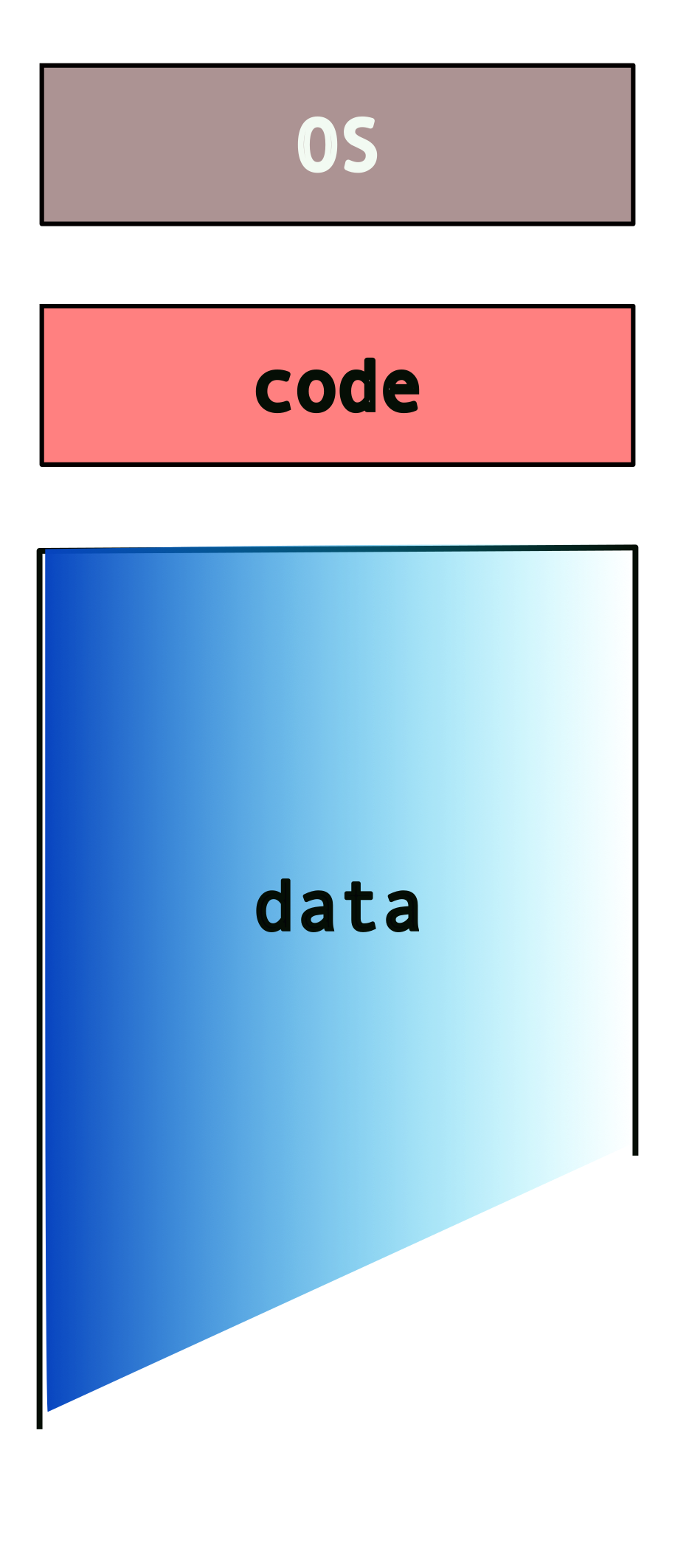

But what goes into this memory?

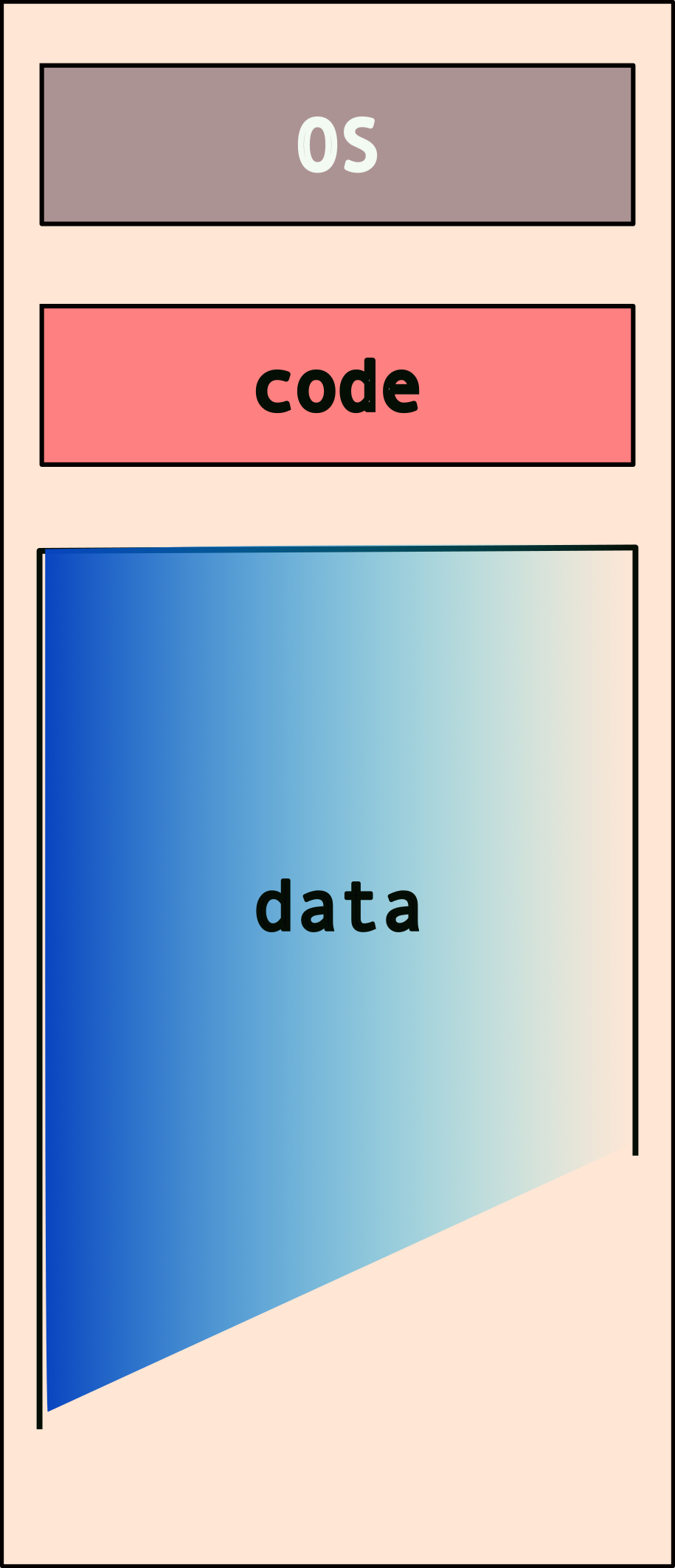

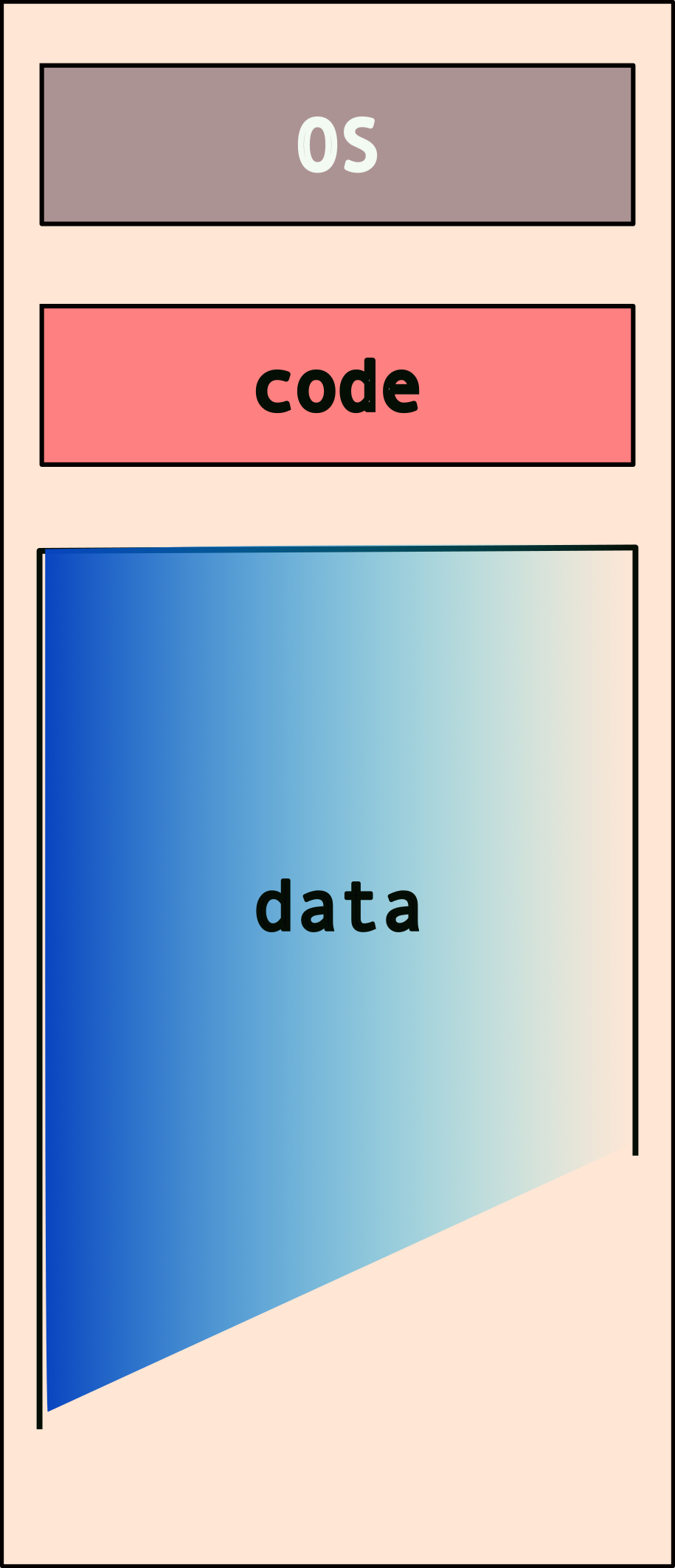

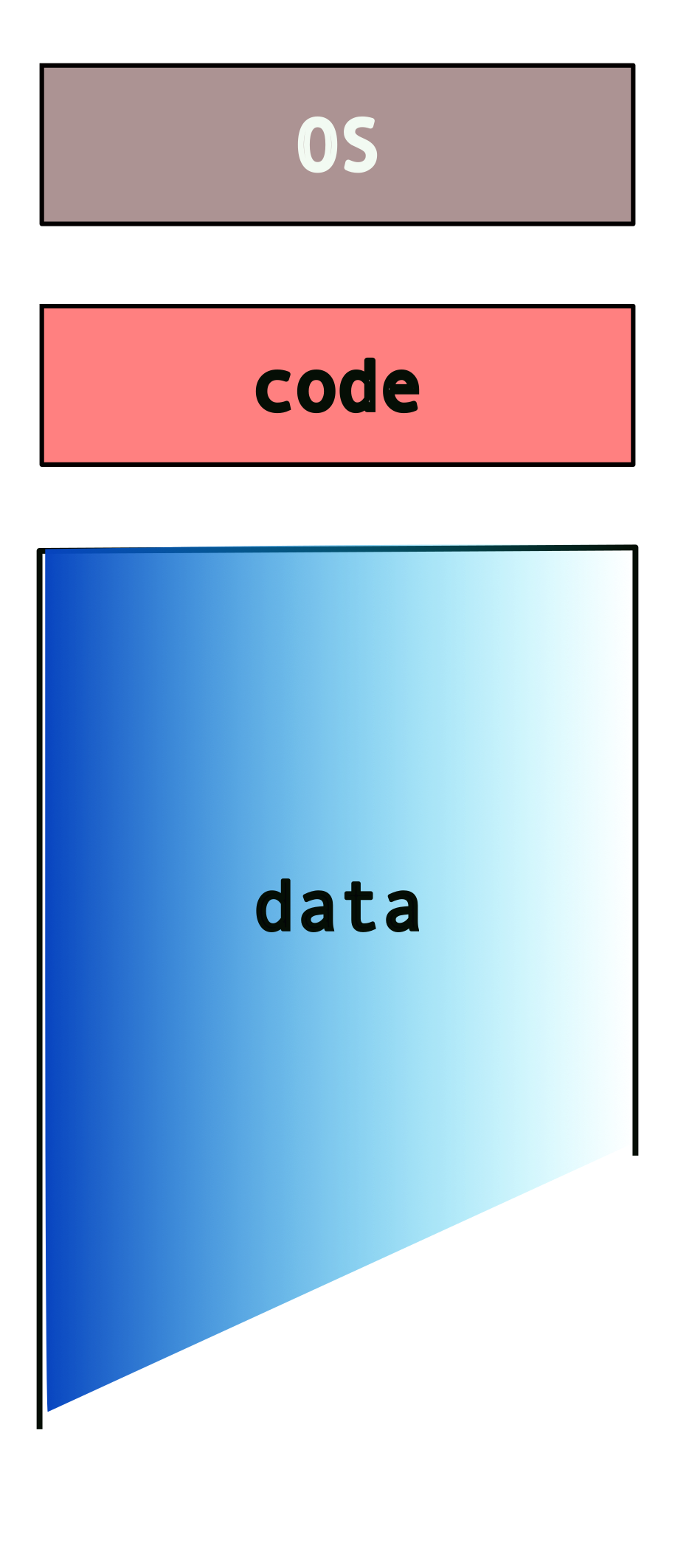

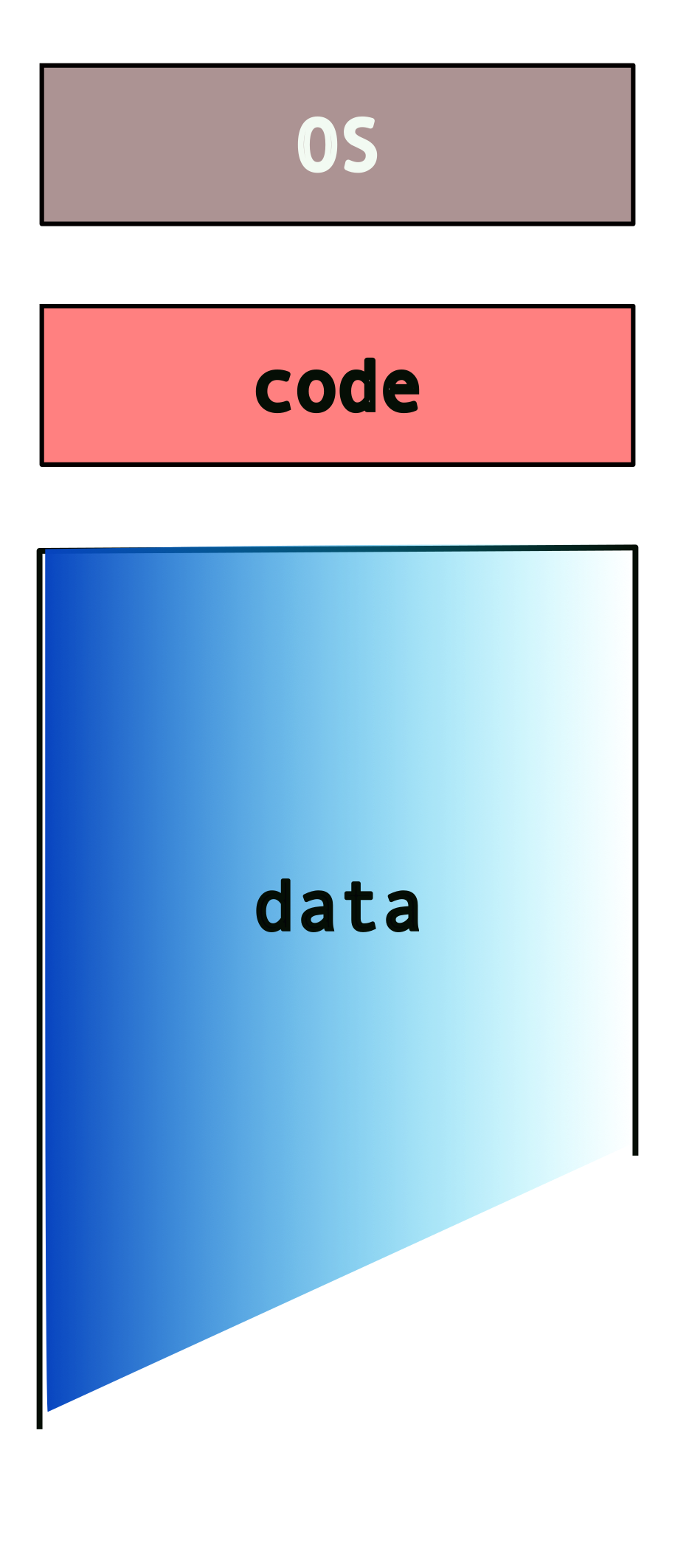

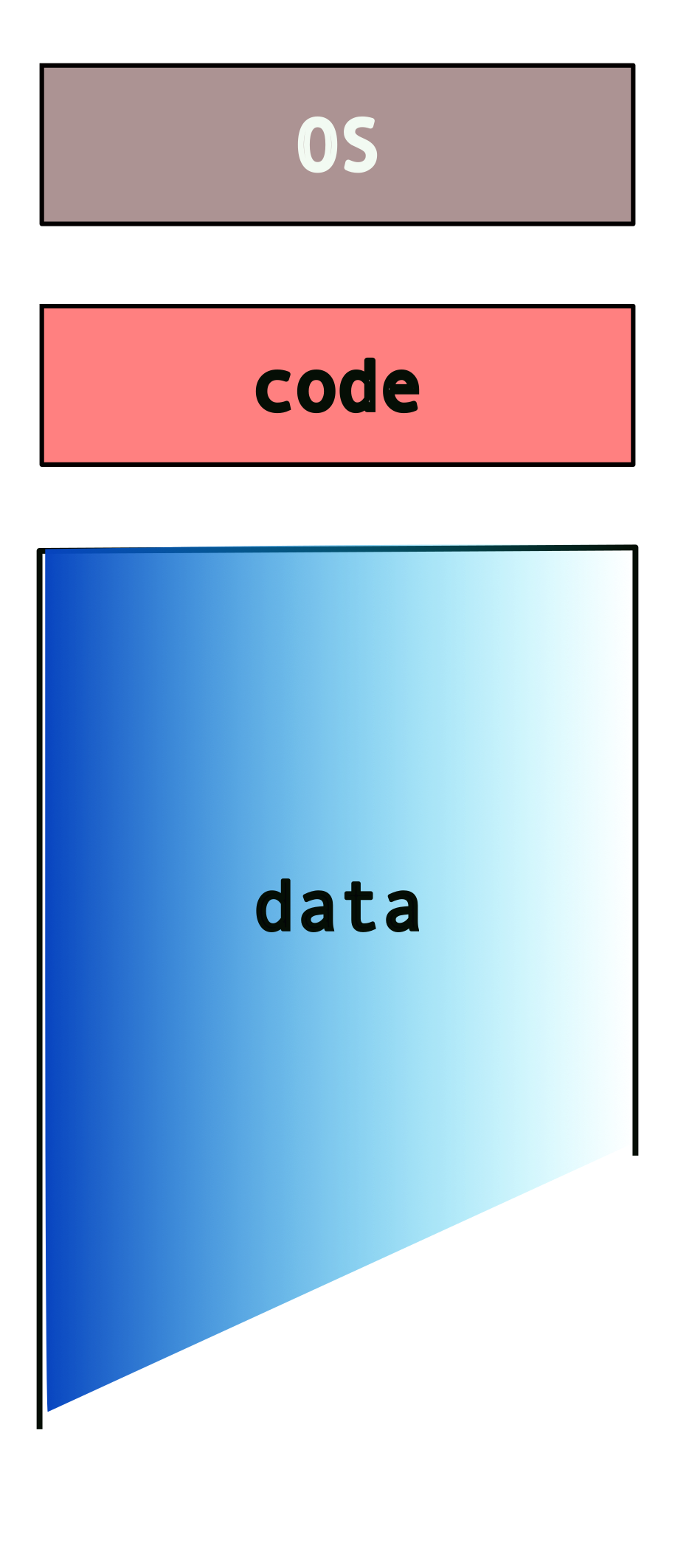

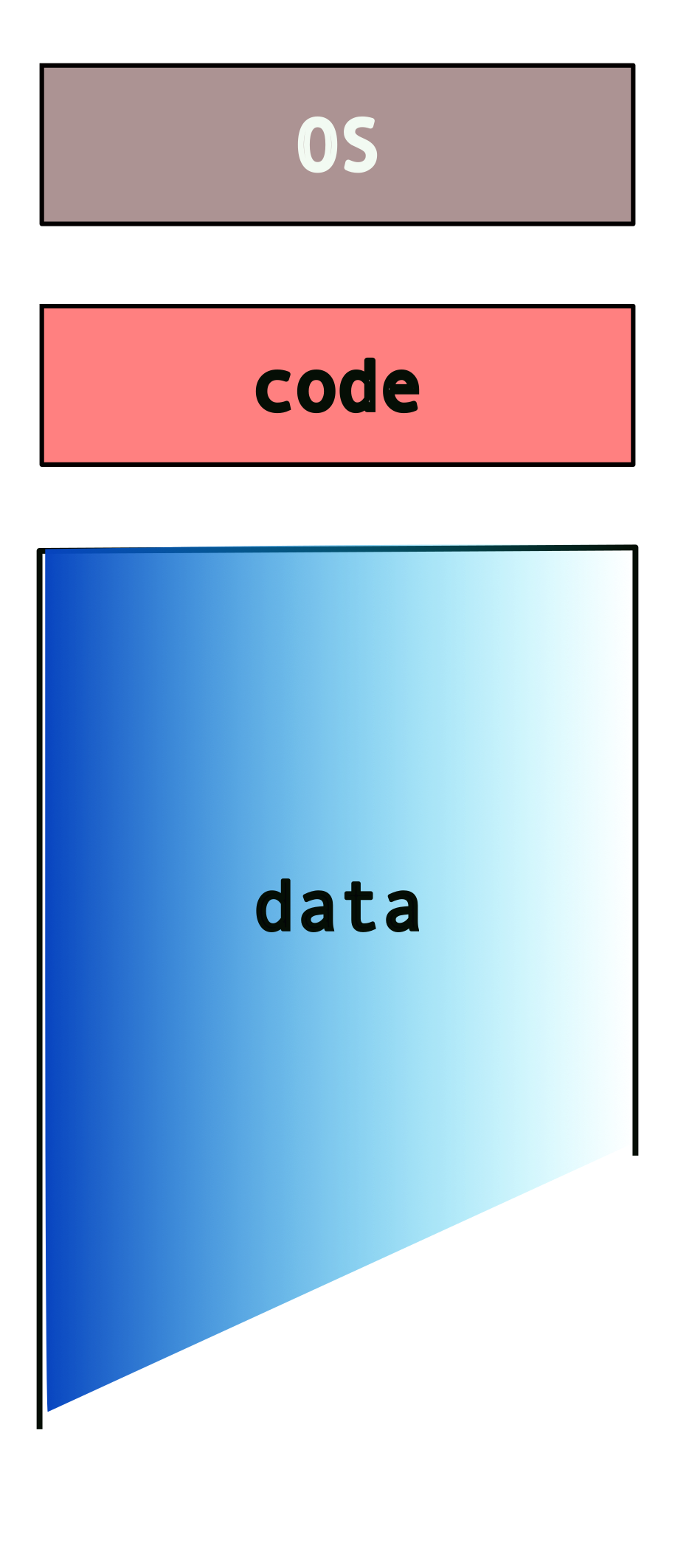

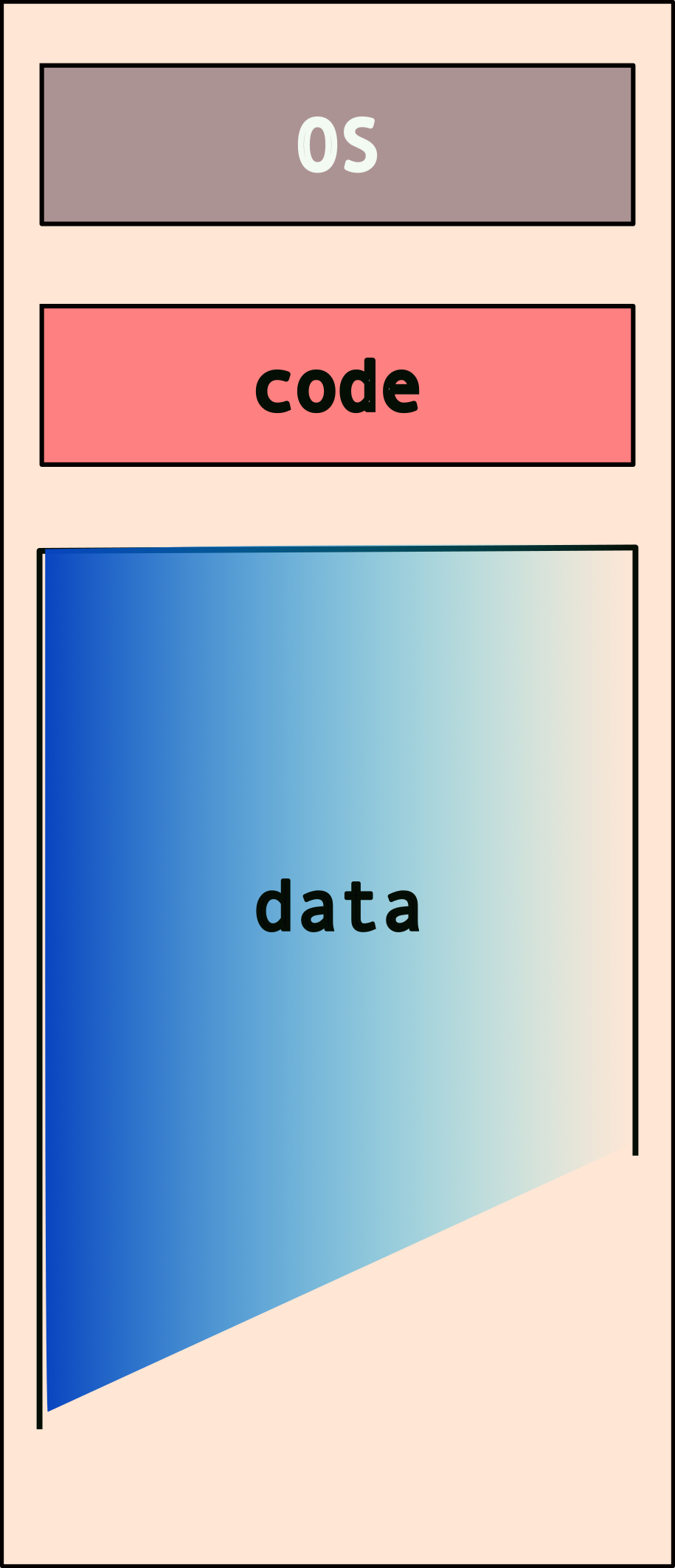

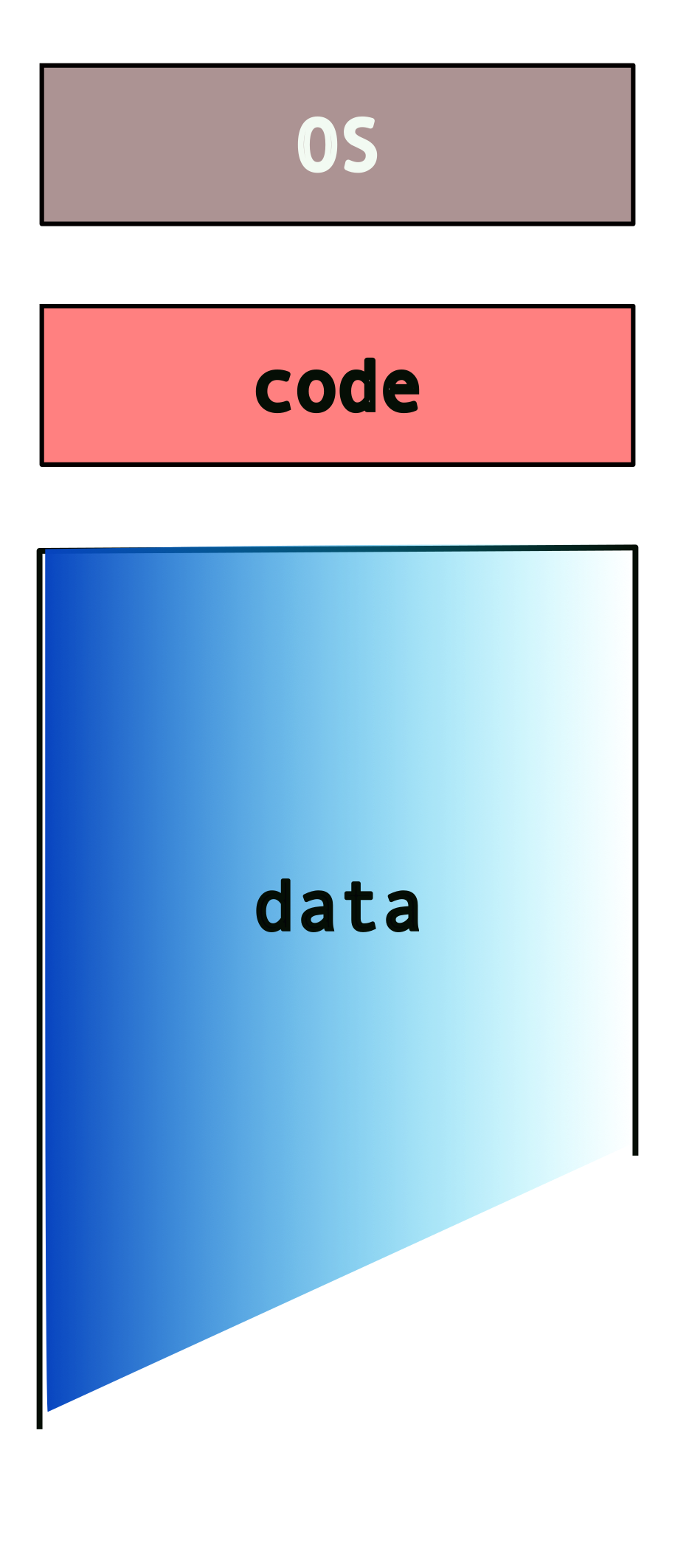

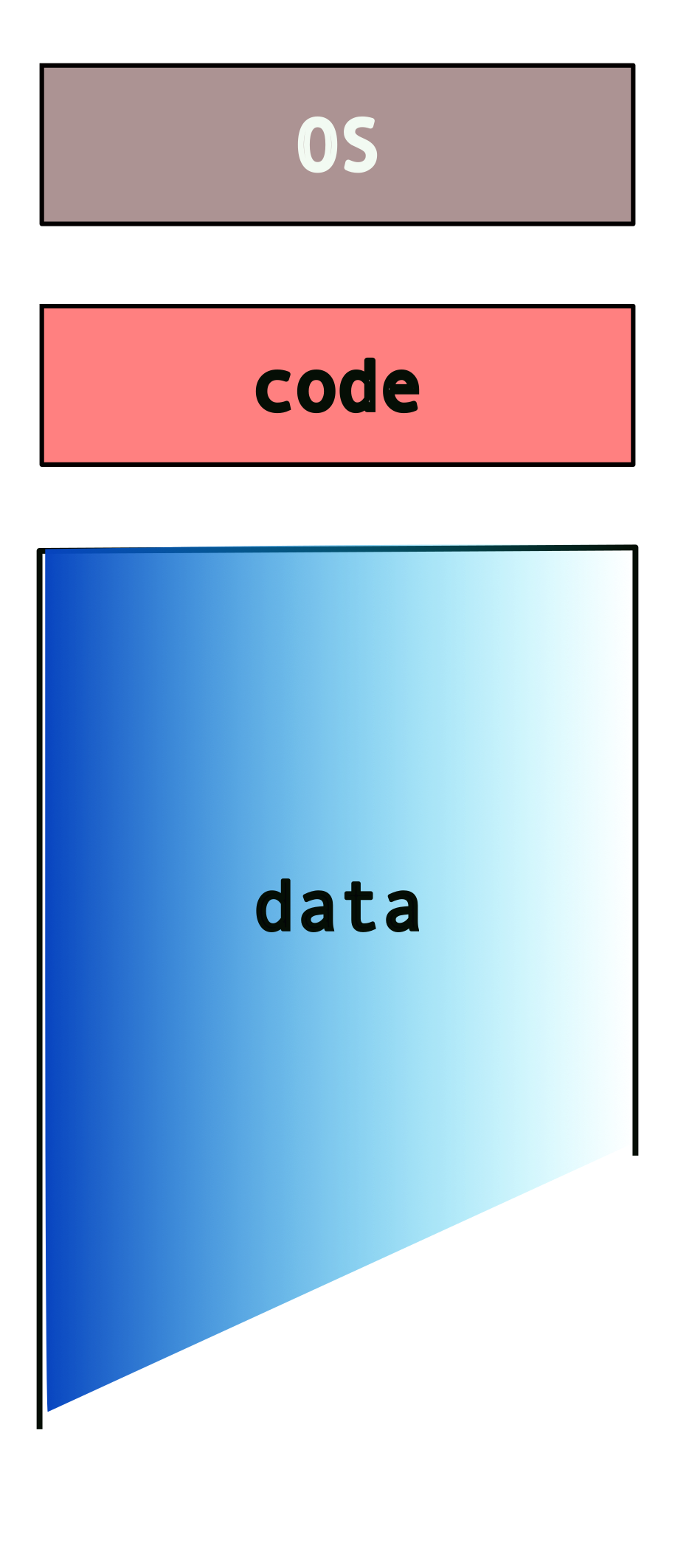

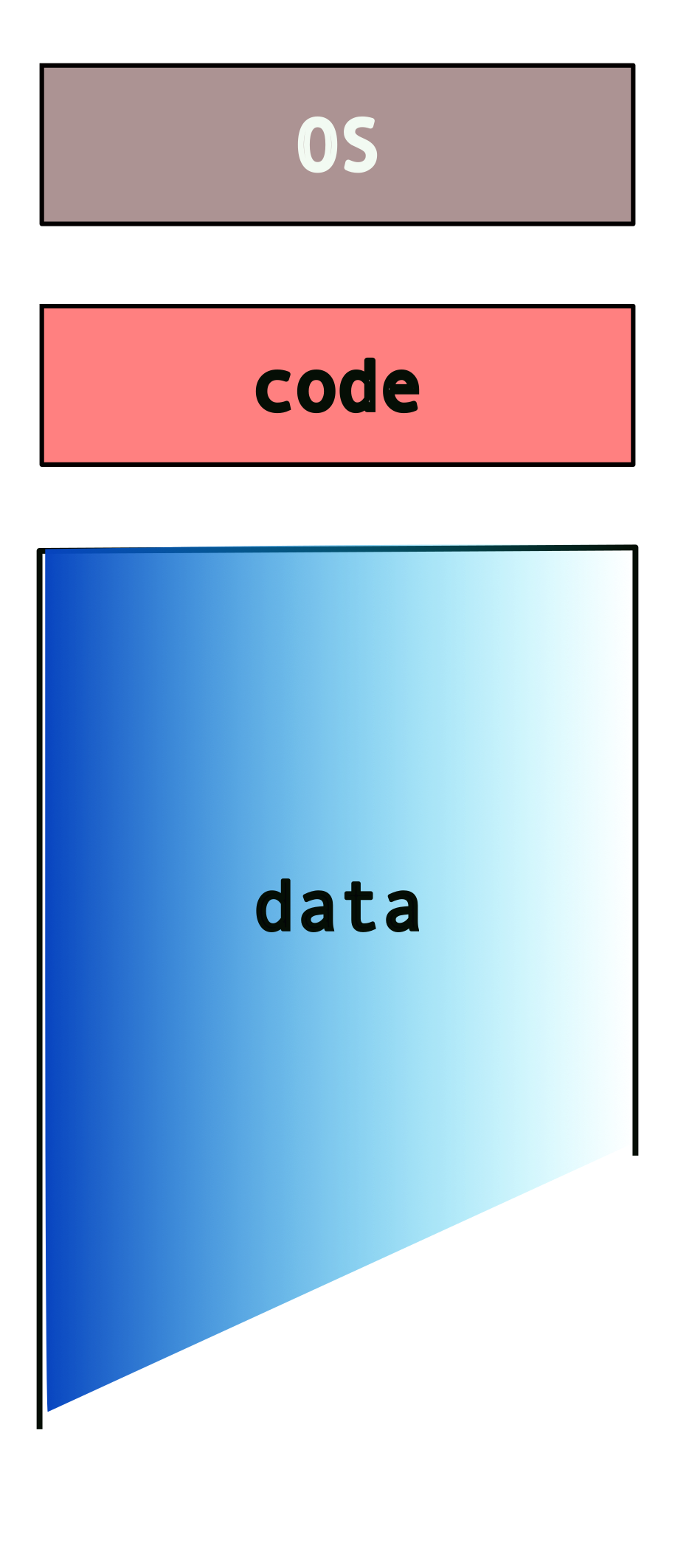

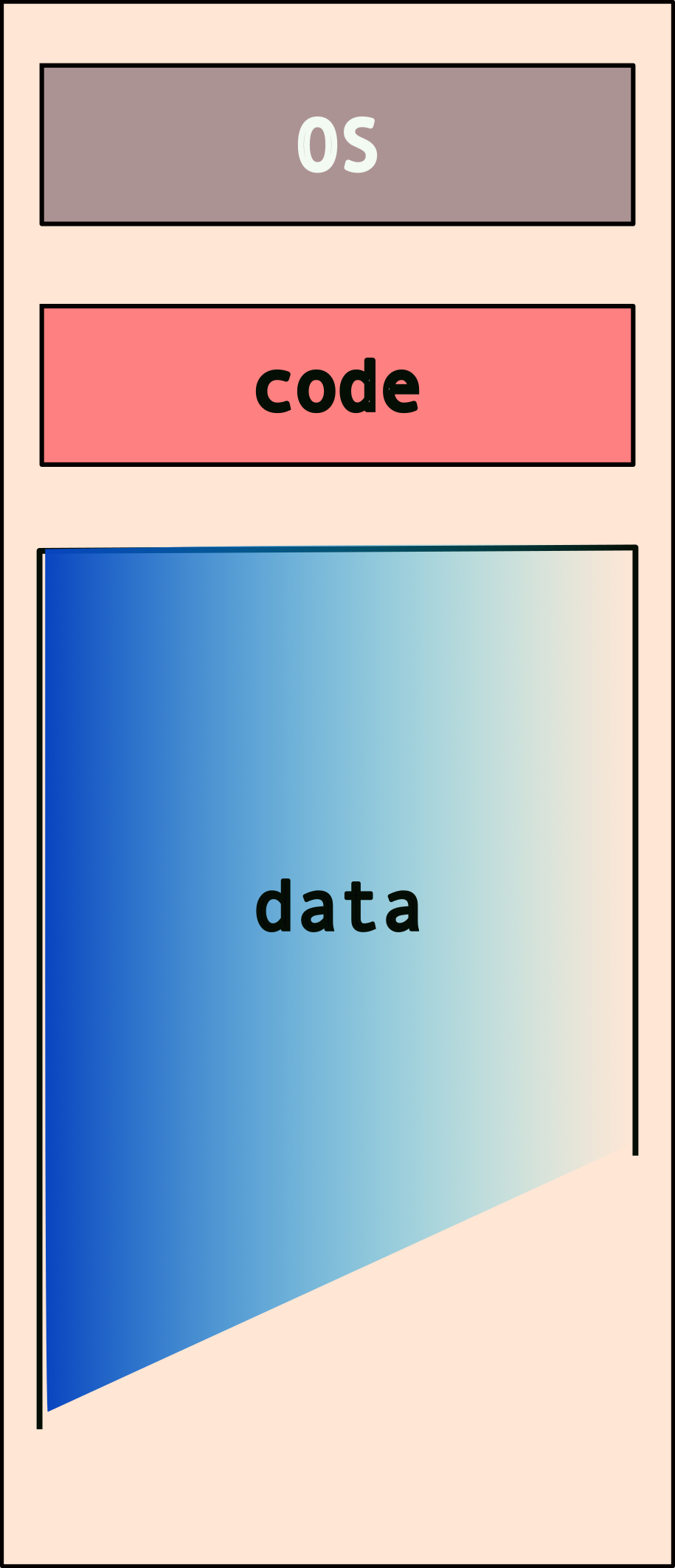

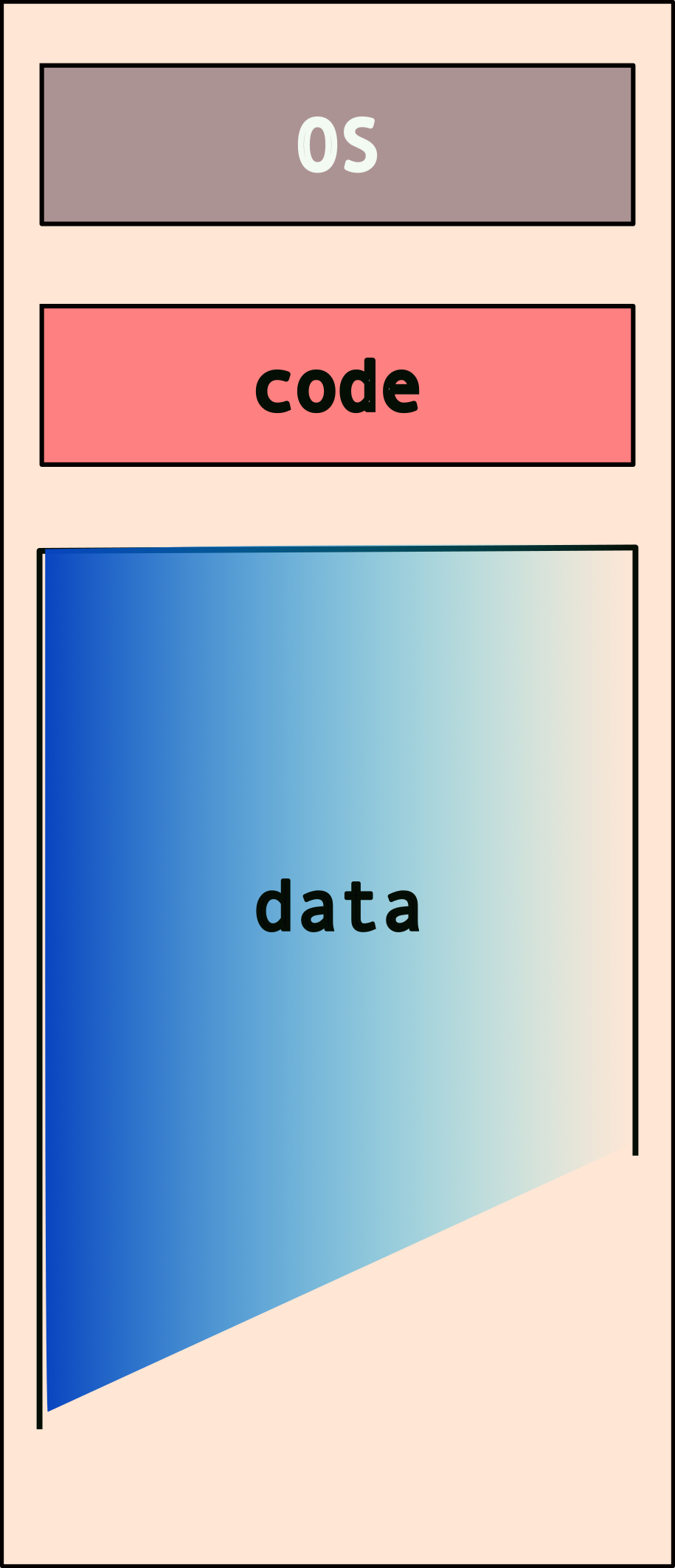

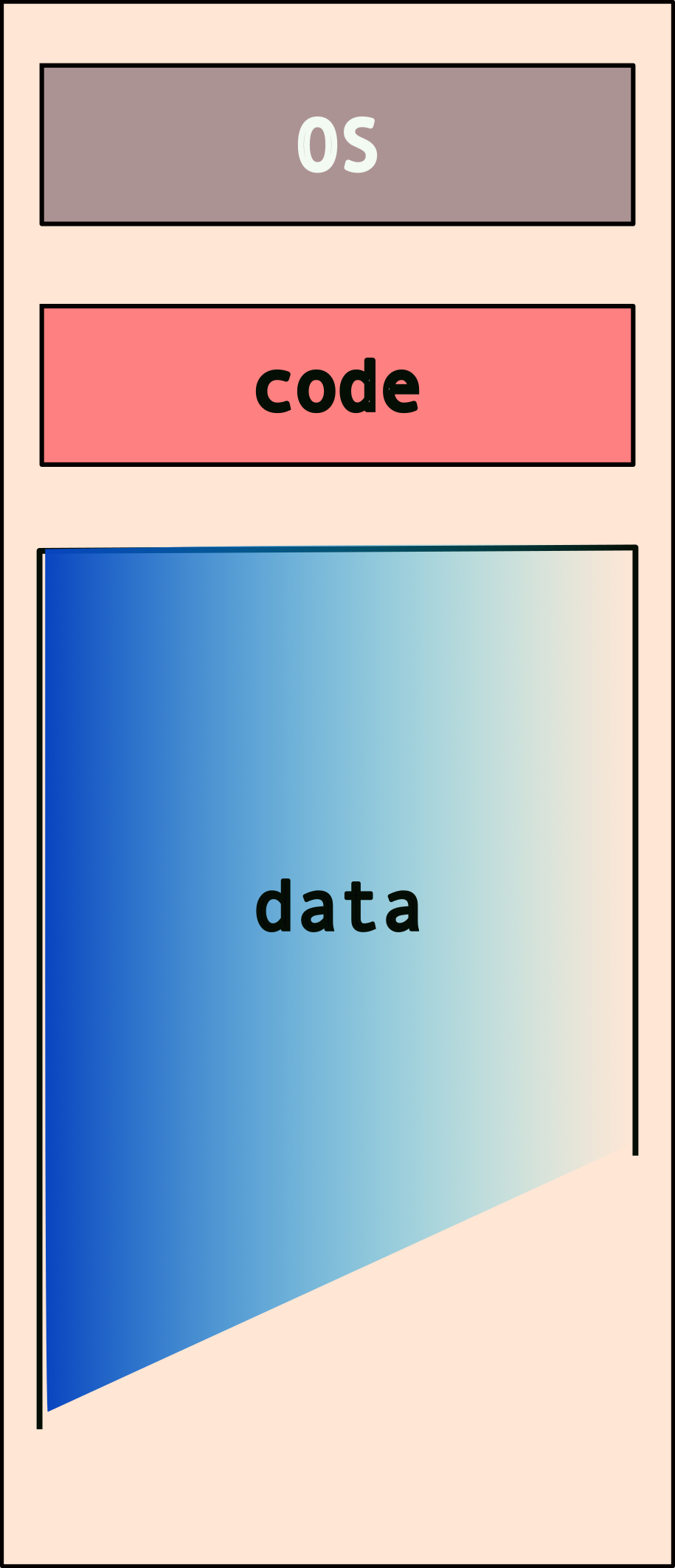

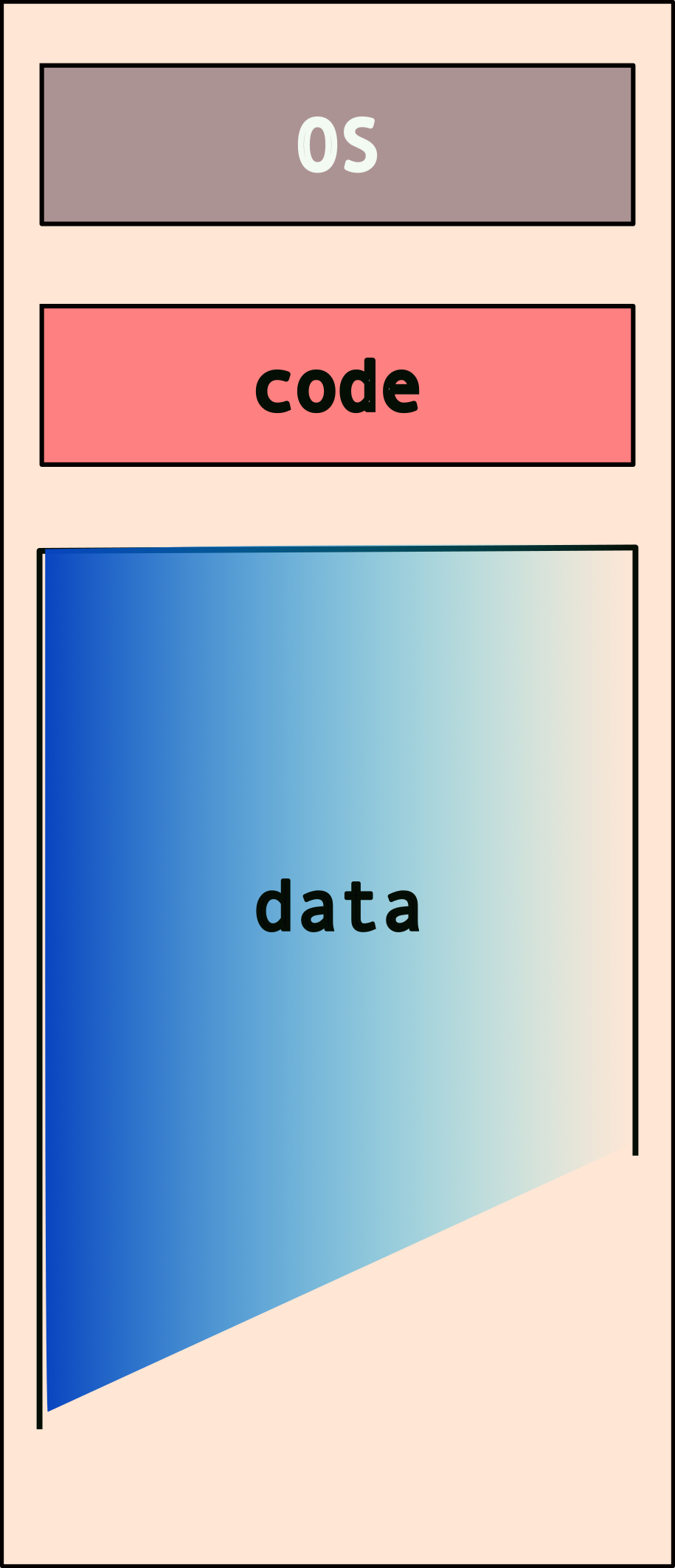

The typical memory layout for a program/system looks like this:

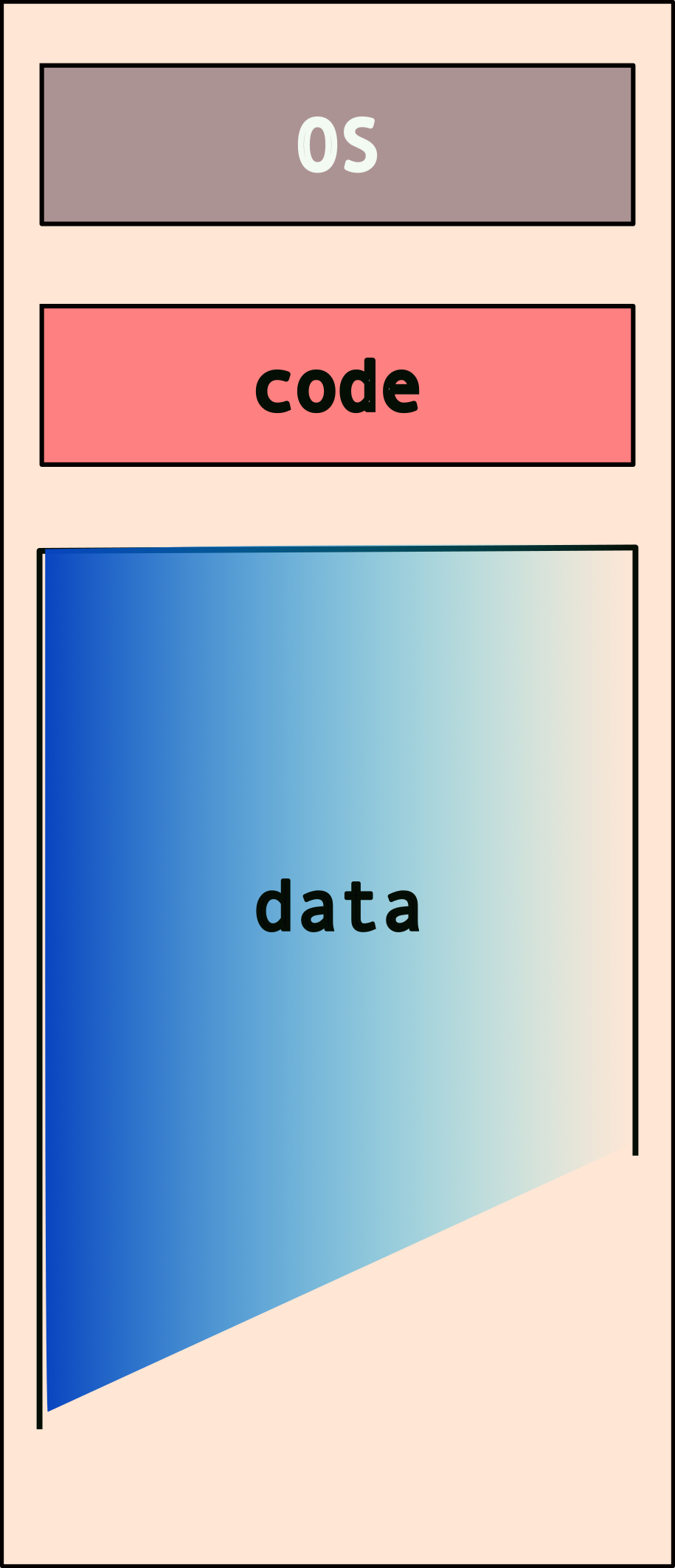

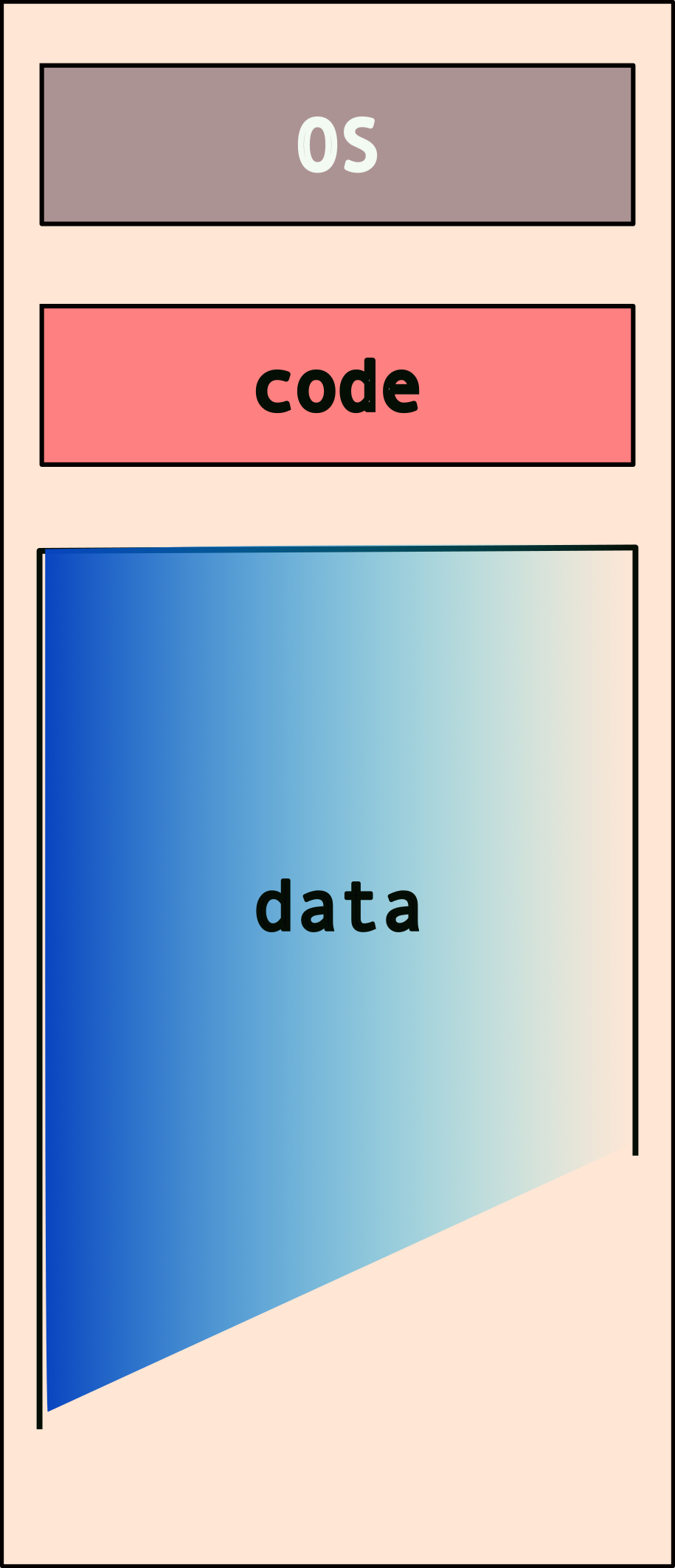

But what happens when you have more than one program? Note that each program needs its own access to the OS services and also has to store/mainpulate its own data!

So, do we have something like this (for two programs)?

What if we have more than two programs?

This can get messy, really quick! Also, note that the system may not have enough memory to fit all of these programs and their data. We nave to deal with additional issues such as programs overwiting other programs’ data/memory!

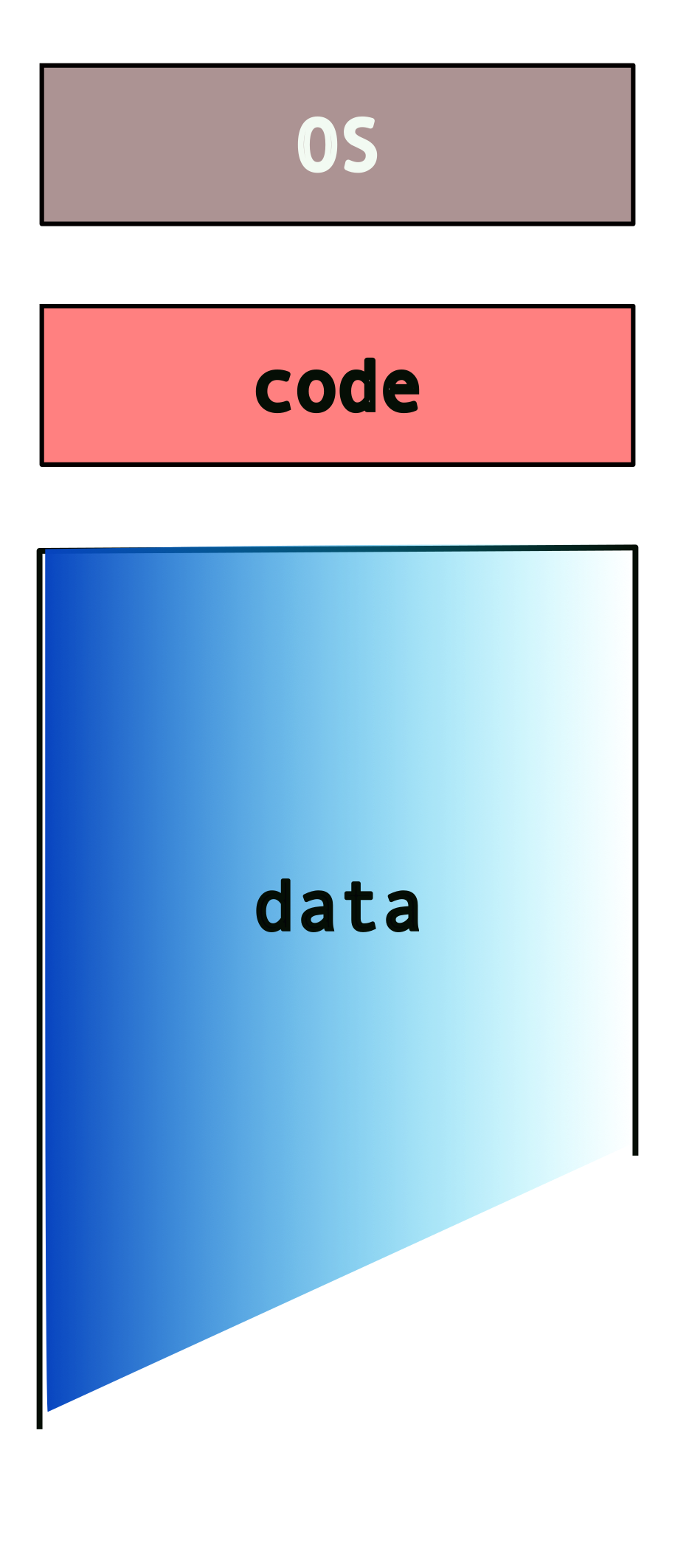

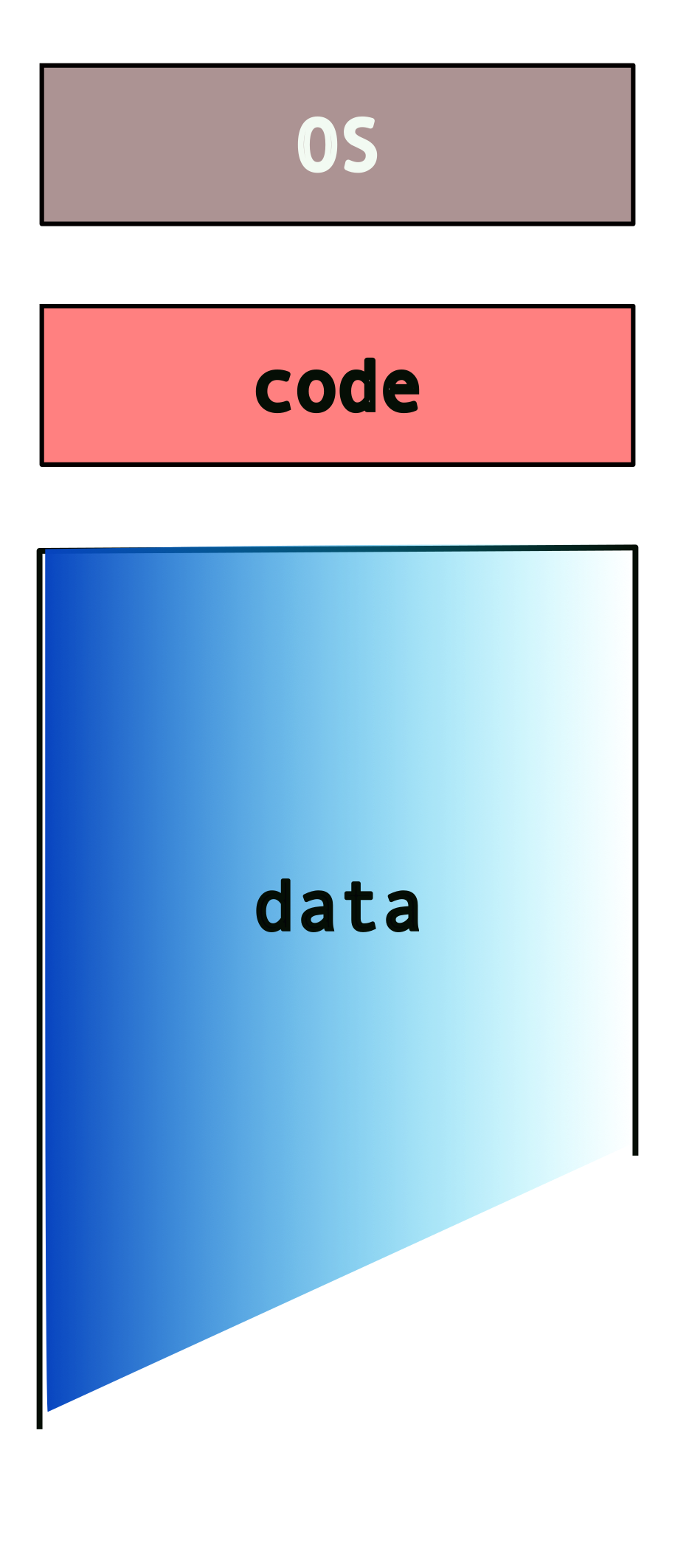

2.2.3 Enter Virtual Memory!

- makes each program “believe”

- it has the entire memory to itself!

- combination of os+hardware

So, instead of this…

we get…

or, at least the programs “think” so!

Hence, programmers need not** worry about other programs!**

3 Intro to C

You already know C!

well, some of it anyways…

3.0.1 C constructs [if you’re familiar with Java, Python, etc.]

| construct | syntax |

|---|---|

| conditionals | if and else |

| loops | while{}, do...while, for |

| basic types | int, float, double, char |

| compound | arrays*, struct, union, enum |

| functions | ret_val func_name (args){} |

* no range checks!!

C will let you access an array beyond the maximum size that you have specified while creating it. The effects of such access are implementation specific – each platform/operating system will handle it differently. Note that on platforms that don’t have memory protection, this can cause some serious problems!

What’s different [from Java]

- no object-oriented programming

- no function overloading

- no classes!

- we have

structinstead- which is similar but very different

- compiled not interpreted

- pointers!!!

3.0.2 Compound types

contiguous memory layouts: objects within these data types are laid out in memory, next to each other. This becomes important when you’re trying to use pointers to access various elements.

| type | usage |

|---|---|

struct |

related variables (like class) |

union |

same, but shared memory |

enum |

enumeration, assign names |

| array | pointer to contiguous memory |

More details in following sections.

no (built-in) boolean!

C does not have a built in boolean data type. We can mimic it by using integer values, e.g.,

0 |

false |

| any non-zero value | true |

3.0.3 Unix Manual (man) pages

- compilation of unix/C knowledge

man \<topic\>

Organized into sections:

| # | contents |

|---|---|

| 1 | general commands |

| 2 | system calls |

| 3 | library funtions (c) |

| 4 | special files |

| 5 | file formats and conventions |

| 6 | games and screensavers |

| 7 | miscellaneous |

| 8 | system administration |

[the highlighted sections are most relevant to us.]

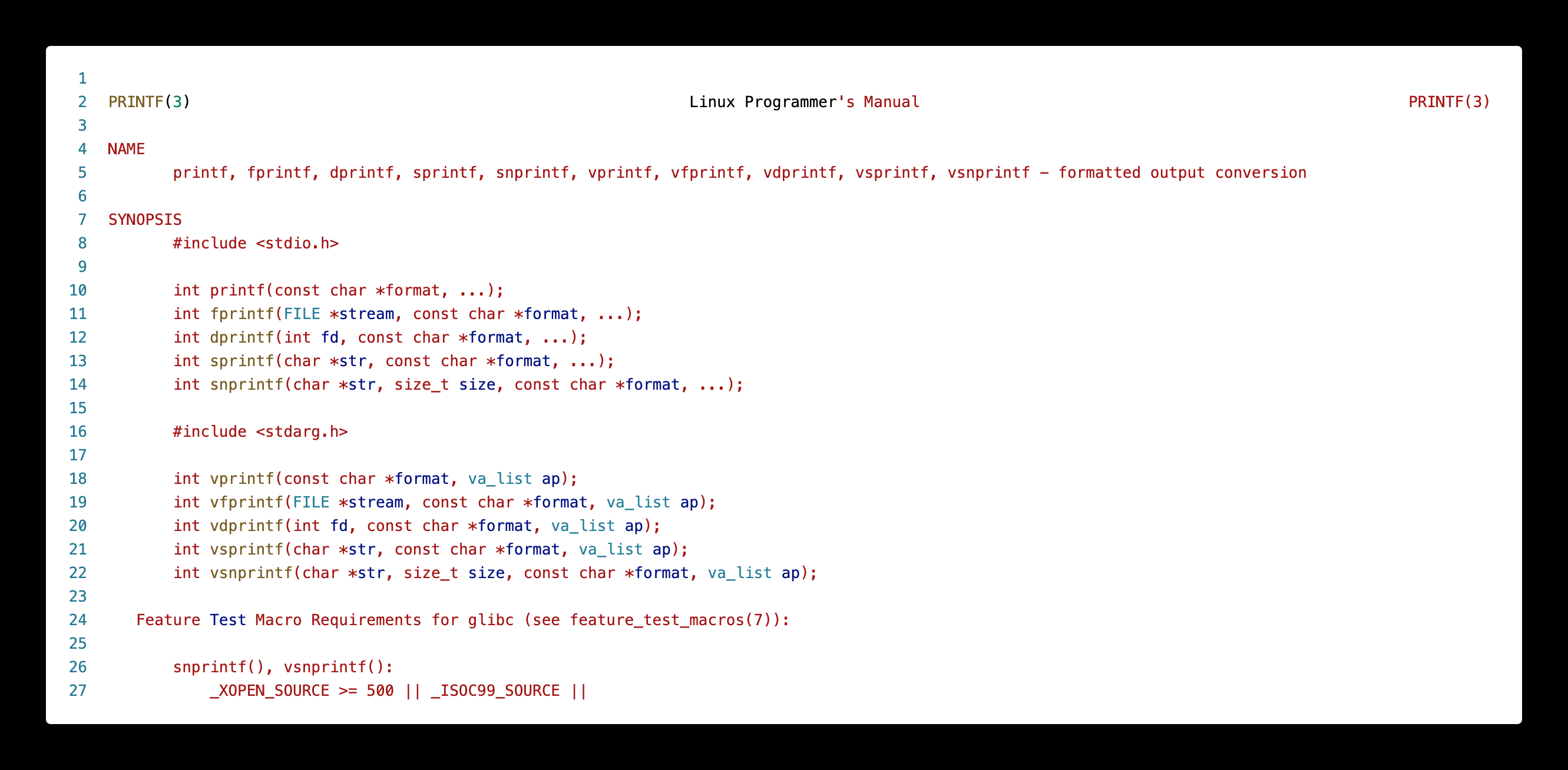

Consider the following example. If we want to see details about the common C standard library function, printf(), we type:

The initial part of the output will look something like this (run the above command in a terminal for the full output):

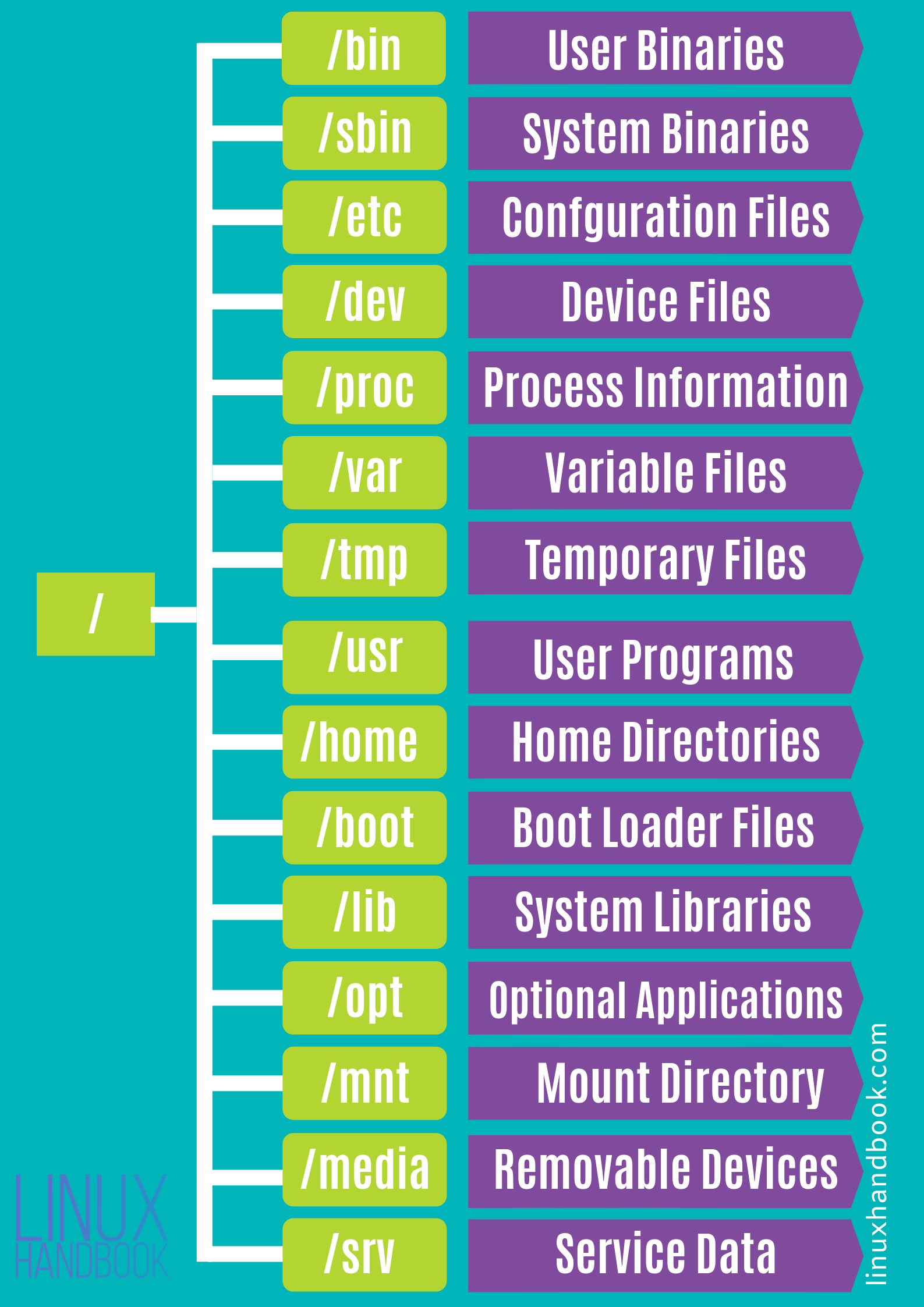

3.0.4 Header Files

- include libraries in your program

- even the C standard library

- similar to

importin java

| method | what is included |

|---|---|

\#include \<stdio.h\> |

common libraries |

\#include \"my_header.h" |

user header file |

Depending on how you invlude your header files, is determined by where they are located on your system:

| method | location |

|---|---|

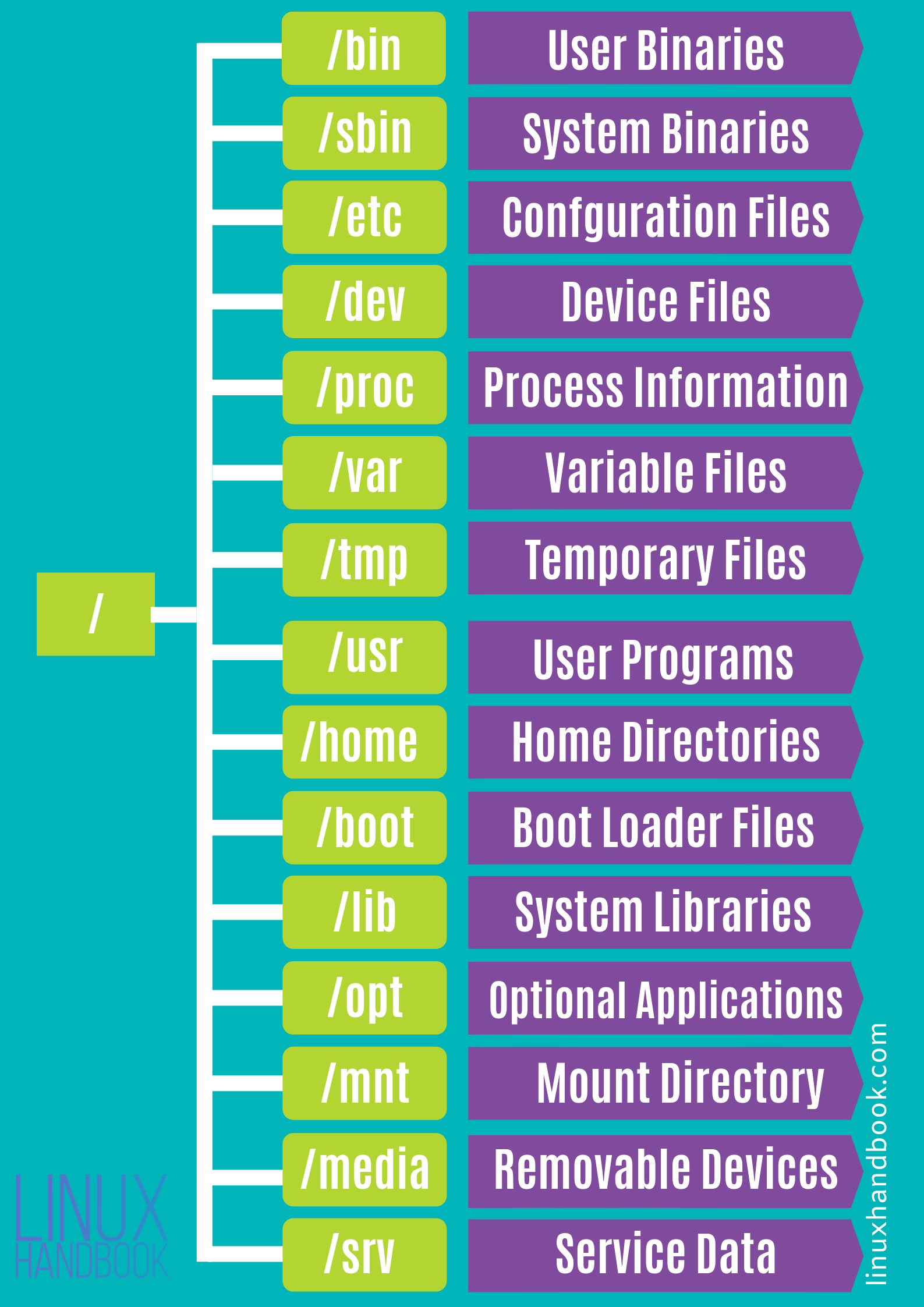

\#include \<...\> |

header path, e.g., \/usr\/include\/* |

\#include \"..." |

current directory |

* not exhaustive

3.0.5 Some Common C libraries

| library | function |

|---|---|

stdio.h |

standard input/ouput |

stdlib.h |

C standard library/utilities |

unistd.h |

unix standard library |

sys/types.h |

system types library |

string.h |

string manipulation |

math.h |

math utility functions |

3.0.6 Basic Types

These are the basic data types defined by the C language:

| type | description/size |

|---|---|

char |

smallest type, one byte |

short int |

two bytes |

int |

four bytes |

long int/long |

larger int, four-eight bytes |

float |

floating point, four bytes* |

double |

double precision, eight bytes* |

void |

lack of a type |

Important Caveats!

- when we say “one byte” → depends on platform

charis one byte, typically on intel- varies based on,

- architectures (ARM, IBM, INTEL)

- 32-bit vs 64-bit

- * Similarly, the size of the

floatanddoubledata types are not defined by C. Different compilers (and platforms) implement them with different sizes.

Code Samples

Now, let’s look at some code!

Program output:

This is a basic C program. Some details to note:

<stdio.h>is the header file for the standard I/O library in C- Every C program requires a

main()function – this is where the execution starts and ends (for the most part – we will look at nuances later) - This function expects a return value as an

int. Hence we return0at the end. This return value from main, is the value returned by your program when it completes execution.

Note that this doesn’t have to be the signature of main() but it is typical. We will explore the “proper” signature for main() later on but let’s stick to this.

// this is a single line comment

#include <stdio.h>

int main()

{

char c = 'a' ;

int i ;

float f ;

double d ;

i = 100 ;

f = 1.0 ;

d = 12398723897.2332 ;

printf( "Memory sizes of variables...\n\n \

size of char: %lu \

size of int: %lu \

size of float: %lu \

size of double: %lu\n",

sizeof(c), sizeof(int), sizeof(f), sizeof(d) ) ;

printf( "\n" ) ; // adding an extra line for nice printing at the end

return 0 ;

}Program output:

Memory sizes of variables...

size of char: 1 size of int: 4 size of float: 4 size of double: 8

sizeof() is a unary operator in the programming languages C and C++. It generates the storage size of an expression or a data type, measured in the number of char-sized units. Consequently, the construct sizeof (char) is guaranteed to be 11.

What happens when you try:

man sizeof?

3.0.7 Compound types | struct, enum, unions

The C standard defines multiple compound data types, viz.,

| type | description | size |

|---|---|---|

struct |

collection of different values | sum of all fields |

union |

one of a set of values | size of largest field |

enum |

an enumeration with “named” values | typically size of int |

Code Samples

Consider the following use case: we want to build a calendar. What information do we need? * date * month * year

// this is a single line comment

#include <stdio.h>

struct calendar{

int _date ;

int _month ;

int _year ;

} ;

int main()

{

struct calendar today ; // creating an object of type "calendar"

printf( "size of struct calendar: %lu\n", sizeof(today) ) ;

// let's initialize the object, "today"

// remember, no "constructors"

today._month = 9 ;

today._date = 5 ;

today._year = 2024 ;

printf( "date: %d/%d/%d\n",

today._month, today._date, today._year ) ;

printf( "\n" ) ; // adding an extra line for nice printing at the end

return 0 ;

}Program output:

size of struct calendar: 12

date: 9/5/2024

Are we missing anything else? * what about the day of the week? * so let’s add a field in the struct for the day of the week

// this is a single line comment

#include <stdio.h>

struct calendar{

int _date ;

int _month ;

int _year ;

int _day_of_week ; // 1 -- sunday, 2 -- monday, etc.

} ;

int main()

{

struct calendar today ; // creating an object of type "calendar"

printf( "size of struct calendar: %lu\n", sizeof(today) ) ;

// let's initialize the object, "today"

// remember, no "constructors"

today._month = 9 ;

today._date = 5 ;

today._year = 2024 ;

today._day_of_week = 5 ;

printf( "date: %d/%d/%d\t day: %d\n",

today._month, today._date, today._year, today._day_of_week ) ;

printf( "\n" ) ; // adding an extra line for nice printing at the end

return 0 ;

}Program output:

size of struct calendar: 16

date: 9/5/2024 day: 5

But this is a little tedious. We need to keep track of the mapping, i.e., “1” → “sunday”, “2” → “monday”, etc. Liable to make a mistake or forget, especially if we’re writing a of code that needs to use this mapping.

3.0.7.1 Enter enum

An enum is a way to create an “enumeration”, i.e., a list of things that are spelled out in natural language, but are really just numbers (typically int).

So, we could define something like,

and use it in the code as follows,

// this is a single line comment

#include <stdio.h>

enum weekdays{ sunday, monday, tuesday, wednesday, thursday, friday, saturday } ;

struct calendar{

int _date ;

int _month ;

int _year ;

// int _day_of_week ; // 1 -- sunday, 2 -- monday, etc.

enum weekdays _day_of_week ;

} ;

int main()

{

struct calendar today ; // creating an object of type "calendar"

printf( "size of struct calendar: %lu\n", sizeof(today) ) ;

// let's initialize the object, "today"

// remember, no "constructors"

today._month = 9 ;

today._date = 5 ;

today._year = 2024 ;

// today._day_of_week = 5 ;

today._day_of_week = thursday ;

printf( "date: %d/%d/%d\t day: %d\n",

today._month, today._date, today._year, today._day_of_week ) ;

printf( "\n" ) ; // adding an extra line for nice printing at the end

return 0 ;

}Program output:

size of struct calendar: 16

date: 9/5/2024 day: 4

You can choose the values in an enum explicitly, e.g..

But, to be honest, this is not very useful. It still prints out a number instead of a string, like “monday”, “tuesday”, etc.

Well, if what we want is a string, then we need to store a string.

// this is a single line comment

#include <stdio.h>

enum weekdays{ sunday, monday, tuesday, wednesday, thursday, friday, saturday } ;

struct calendar{

int _date ;

int _month ;

int _year ;

// int _day_of_week ; // 1 -- sunday, 2 -- monday, etc.

// weekdays _day_of_week ;

char _day_of_week[64] ;

} ;

int main()

{

struct calendar today ; // creating an object of type "calendar"

printf( "size of struct calendar: %lu\n", sizeof(today) ) ;

// let's initialize the object, "today"

// remember, no "constructors"

today._month = 9 ;

today._date = 5 ;

today._year = 2024 ;

// today._day_of_week = 5 ;

// today._day_of_week = thursday ;

today._day_of_week = "thursday" ;

printf( "date: %d/%d/%d\t day: %s\n",

today._month, today._date, today._year, today._day_of_week ) ;

printf( "\n" ) ; // adding an extra line for nice printing at the end

return 0 ;

}Program output:

inline_exec_tmp.c: In function main:

inline_exec_tmp.c:29:24: error: assignment to expression with array type

29 | today._day_of_week = "thursday" ;

| ^

make[1]: *** [Makefile:33: inline_exec_tmp] Error 1Wait, why does this fail?

We cannot assign one array to another! C has no way of knowing how to do this.

One way to bypass this, is to do it at creation time for the today object, as follows:

// all items created and initialized together so this works!

struct calendar today = {9, 5, 2024, "thursday"} ; One alternative is to explicitly set the elements of the array, as follows:

// this is a single line comment

#include <stdio.h>

enum weekdays{ sunday, monday, tuesday, wednesday, thursday, friday, saturday } ;

struct calendar{

int _date ;

int _month ;

int _year ;

// int _day_of_week ; // 1 -- sunday, 2 -- monday, etc.

// weekdays _day_of_week ;

char _day_of_week[10] ;

} ;

int main()

{

struct calendar today ; // creating an object of type "calendar"

printf( "size of struct calendar: %lu\n", sizeof(today) ) ;

// let's initialize the object, "today"

// remember, no "constructors"

today._month = 9 ;

today._date = 5 ;

today._year = 2024 ;

// today._day_of_week = 5 ;

// today._day_of_week = thursday ;

// today._day_of_week = "thursday" ;

today._day_of_week[0] = 't' ;

today._day_of_week[1] = 'h' ;

today._day_of_week[2] = 'u' ;

today._day_of_week[3] = 'r' ;

today._day_of_week[4] = 's' ;

today._day_of_week[5] = 'd' ;

today._day_of_week[6] = 'a' ;

today._day_of_week[7] = 'y' ;

today._day_of_week[8] = '\0' ;

printf( "date: %d/%d/%d\t day: %s\n",

today._month, today._date, today._year, today._day_of_week ) ;

printf( "\n" ) ; // adding an extra line for nice printing at the end

return 0 ;

}Program output:

size of struct calendar: 24

date: 9/5/2024 day: thursday

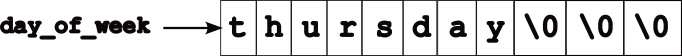

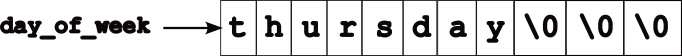

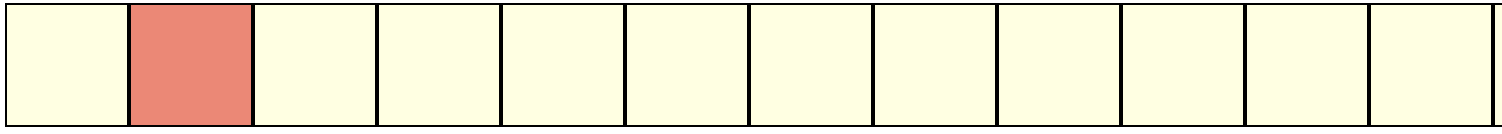

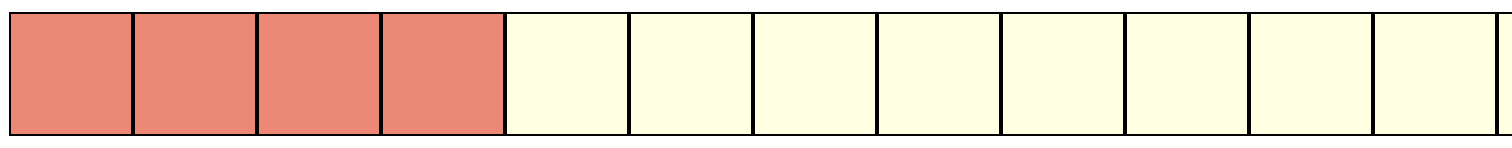

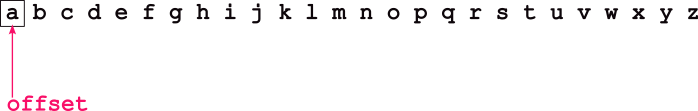

strings in C → an array of characters that are null terminated, i.e., \0. So the `day_of_week’ field looks like this.

There’s a reason I’ve used the arrow in the above image. :wink:

So what is a union?

A union is a value that may have any of multiple representations or formats within the same area of memory; that consists of a variable that may hold such a data structure.2

A union specifies the oermitted data types that may be stored in that region of memory, e.g., an int and a float, but never both. Hence, a union can hold only one data type at a time. Once a new value is assigned, the existing data is overwritten with the new value.

Syntax is similar to struct but the effects are very different.

Code Samples

#include <stdio.h>

struct calendar{

int _date ;

int _month ;

int _year ;

// int _day_of_week ; // 1 -- sunday, 2 -- monday, etc.

// weekdays _day_of_week ;

char _day_of_week[10] ;

} ;

union info{

int _age ;

double _weight ;

} ;

int main()

{

union info my_info ;

struct calendar today ; // creating an object of type "calendar"

// look at the output of this sizeof!

printf( "size of struct = %lu\t size of union = %lu\n",

sizeof(today), sizeof(my_info) ) ;

// now I'm using the "int" part of the union

my_info._age = 23452345 ;

printf( "\n age = %d\t weight = %f\n", my_info._age, my_info._weight ) ;

// now I'm using the "float" part of the union

my_info._weight = 999999 ;

printf( "age = %d\t weight = %f\n", my_info._age, my_info._weight ) ;

printf( "\n" ) ;

return 0 ; // default value

}Program output:

size of struct = 24 size of union = 8

age = 23452345 weight = 0.000000

age = 0 weight = 999999.000000

As we see, we can only use one of the fields at any point in time. Unions aren’t very common today bit still do find use in may systems with limited memory, e.g., embedded systems.

3.1 Intermediate C

3.2 Objectives

- diving into some details about C

- format strings

- variable modifiers

- nuances about compound types

3.3 Types

Modifiers

Most C types can have modifiers attached to them, i.e., one of,

unsigned- variables that cannot be negative. Given that variables have a fixed bit-width, they can use the extra bit (“negative” no longer needs to be tracked) to instead represent numbers twice the size ofsignedvariants.signed- signed variables. You don’t see this modifier as much becausechar,int,longall default tosigned.long- Used to modify another type to make it larger in some cases.long intcan represent larger numbers and is synonymous withlong.long long int(orlong long) is an even larger value!static- this variable should not be accessible outside of the .c file in which it is defined.const- an immutable value. We won’t focus much on this modifier.volatile- this variable should be “read from memory” every time it is accessed. Confusing now, relevant later, but not a focus.

3.3.0.1 Examples

int

main(void)

{

char a;

signed char a_signed; /* same type as `a` */

char b; /* values between [-128, 127] */

unsigned char b_unsigned; /* values between [0, 256] */

int c;

short int c_shortint;

short c_short; /* same as `c_shortint` */

long int c_longint;

long c_long; /* same type as `c_longint` */

return 0;

}Program output:

You might see all of these, but the common primitives, and their sizes:

#include <stdio.h>

/* Commonly used types that you practically see in a lot of C */

int

main(void)

{

char c;

unsigned char uc;

short s;

unsigned short us;

int i;

unsigned int ui;

long l;

unsigned long ul;

printf("char:\t%ld\nshort:\t%ld\nint:\t%ld\nlong:\t%ld\n",

sizeof(c), sizeof(s), sizeof(i), sizeof(l));

return 0;

}Program output:

char: 1

short: 2

int: 4

long: 83.3.1 Common POSIX types and values

stddef.h3:size_t,usize_t,ssize_t- types for variables that correspond to sizes. These include the size of the memory request tomalloc, the return value fromsizeof, and the arguments and return values fromread/write/…ssize_tis signed (allows negative values), while the others are unsigned.NULL- is just#define NULL ((void *)0)

limits.h4:INT_MAX,INT_MIN,UINT_MAX- maximum and minimum values for a signed integer, and the maximum value for an unsigned integer.LONG_MAX,LONG_MIN,ULONG_MAX- minimum and maximum numerical values forlongs andunsigned longs.- Same for

short ints(SHRT_MAX, etc…) andchars (CHAR_MAX, etc…).

3.3.2 Format Strings

Many standard library calls take “format strings”. You’ve seen these in printf. The following format specifiers should be used:

%d-int%ld-long int%u-unsigned int%c-char%x-unsigned intprinted as hexadecimal%lx-long unsigned intprinted as hexadecimal%p- prints out any pointer value,void *%s- prints out a string,char *

Format strings are also used in scanf functions to read and parse input.

You can control the spacing of the printouts using %NX where N is the number of characters you want printed out (as spaces), and X is the format specifier above. For example, "%10ld"would print a long integer in a 10 character slot. Adding \n and \t add in the newlines and the tabs. If you need to print out a “\”, use \\.

3.3.2.1 Example

#include <stdio.h>

#include <limits.h>

int

main(void)

{

printf("Integers: %d, %ld, %u, %c\n"

"Hex and pointers: %lx, %p\n"

"Strings: %s\n",

INT_MAX, LONG_MAX, UINT_MAX, '*',

LONG_MAX, &main,

"hello world");

return 0;

}Program output:

Integers: 2147483647, 9223372036854775807, 4294967295, *

Hex and pointers: 7fffffffffffffff, 0x555555555149

Strings: hello world3.3.3 More about Compound types (struct and union)

Consider the following exmaple:

#include <stdio.h>

struct hamburger {

int num_burgers;

int cheese;

int num_patties;

};

union food {

int num_eggs;

struct hamburger burger;

};

/* Same contents as the union. */

struct all_food {

int num_eggs;

struct hamburger burger;

};

int

main(void)

{

union food f_eggs, f_burger;

/* now I shouldn't access `.burger` in `f_eggs` */

f_eggs.num_eggs = 10;

/* This is just syntax for structure initialization. */

f_burger.burger = (struct hamburger) {

.num_burgers = 5,

.cheese = 1,

.num_patties = 1

};

/* now shouldn't access `.num_eggs` in `f_burger` */

printf("Size of union: %ld\nSize of struct: %ld\n",

sizeof(union food), sizeof(struct all_food));

return 0;

}Program output:

Size of union: 12

Size of struct: 16We can see the effect of the union: The size is max(fields) rather than sum(fields). What other examples can you think of where you might want unions?

An aside on syntax: The structure initialization syntax in this example is simply a shorthand. The

struct hamburgerinitialization above is equivalent to:Though since there are so many

.s, this is a little confusing. We’d typically want to simply as:More on

->in the next section.

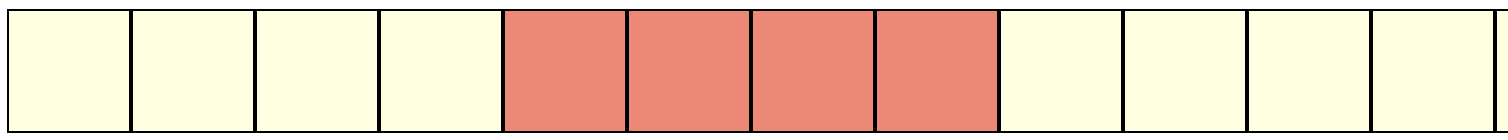

Arrays are simple contiguous data items, all of the same type. int a[4] = {6, 7, 8, 9} should be imagined as:

a -> +---+---+---+---+

| 6 | 7 | 8 | 9 |

+---+---+---+---+When you access an array item, a[2] == 8, C is really treating a as a pointer, doing pointer arithmetic, and dereferences to find offset 2.

#include <stdio.h>

int main(void) {

int a[] = {6, 7, 8, 9};

int n = 1;

printf("0th index: %p == %p; %d == %d\n", a, &a[0], *a, a[0]);

printf("nth index: %p == %p; %d == %d\n", a + n, &a[n], *(a + n), a[n]);

return 0;

}Program output:

0th index: 0x7fffffffe290 == 0x7fffffffe290; 6 == 6

nth index: 0x7fffffffe294 == 0x7fffffffe294; 7 == 7Making this a little more clear, lets understand how C accesses the nth item. Lets make a pointer int *p = a + 1 (we’ll just simply and assume that n == 1 here), we should have this:

p ---------+

|

V

a ---> +---+---+---+---+

| 6 | 7 | 8 | 9 |

+---+---+---+---+Thus if we dereference p, we access the 1st index, and access the value 7.

#include <stdio.h>

int

main(void)

{

int a[] = {6, 7, 8, 9};

/* same thing as the previous example, just making the pointer explicit */

int *p = a + 1;

printf("nth index: %p == %p; %d == %d\n", p, &a[1], *p, a[1]);

return 0;

}Program output:

nth index: 0x7fffffffe294 == 0x7fffffffe294; 7 == 7We can see that pointer arithmetic (i.e. doing addition/subtraction on pointers) does the same thing as array indexing plus a dereference. That is, *(a + 1) == a[1]. For the most part, arrays and pointers can be viewed as very similar, with only a few exceptions5.

Pointer arithmetic should generally be avoided in favor of using the array syntax. One complication for pointer arithmetic is that it does not fit our intuition for addition:

#include <stdio.h>

int

main(void)

{

int a[] = {6, 7, 8, 9};

char b[] = {'a', 'b', 'c', 'd'};

/*

* Calculation: How big is the array?

* How big is each item? The division is the number of items.

*/

int num_items = sizeof(a) / sizeof(a[0]);

int i;

for (i = 0; i < num_items; i++) {

printf("idx %d @ %p & %p\n", i, a + i, b + i);

}

return 0;

}Program output:

idx 0 @ 0x7fffffffe290 & 0x7fffffffe2a4

idx 1 @ 0x7fffffffe294 & 0x7fffffffe2a5

idx 2 @ 0x7fffffffe298 & 0x7fffffffe2a6

idx 3 @ 0x7fffffffe29c & 0x7fffffffe2a7Note that the pointer for the integer array (a) is being incremented by 4, while the character array (b) by 1. Focusing on the key part:

idx 0 @ ...0 & ...4

idx 1 @ ...4 & ...5

idx 2 @ ...8 & ...6

idx 3 @ ...c & ...7

^ ^

| |

Adds 4 ----+ |

Adds 1 -----------+Thus, pointer arithmetic depends on the size of the types within the array. There are types when one wants to iterate through each byte of an array, even if the array contains larger values such as integers. For example, the memset and memcmp functions set each byte in an range of memory, and byte-wise compare two ranges of memory. In such cases, casts can be used to manipulate the pointer type (e.g. (char *)a enables a to not be referenced with pointer arithmetic that iterates through bytes).

3.3.3.1 Example

#include <stdio.h>

/* a simple linked list */

struct student {

char *name;

struct student *next;

};

struct student students[] = {

{.name = "Penny", .next = &students[1]}, /* or `students + 1` */

{.name = "Gabe", .next = NULL}

};

struct student *head = students;

/*

* head --> students+------+

* | Penny | Gabe |

* | next | next |

* +-|-----+---|--+

* | ^ +----->NULL

* | |

* +-----+

*/

int

main(void)

{

struct student *i;

for (i = head; i != NULL; i = i->next) {

printf("%s\n", i->name);

}

return 0;

}Program output:

Penny

Gabe3.3.3.2 Generic Pointer Types

Generally, if you want to treat a pointer type as another, you need to use a cast. You rarely want to do this (see the memset example below to see an example where you might want it). However, there is a need in C to have a “generic pointer type” that can be implicitly cast into any other pointer type. To get a sense of why this is, two simple examples:

- What should the type of

NULLbe?

NULL is used as a valid value for any pointer (of any type), thus NULL must have a generic type that can be used in the code as a value for any pointer. Thus, the type of NULL must be void *.

mallocreturns a pointer to newly-allocated memory. What should the type of the return value be?

C solves this with the void * pointer type. Recall that void is not a valid type for a variable, but a void * is different. It is a "generic pointer that cannot be dereferenced*. Note that dereferencing a void * pointer shouldn’t work as void is not a valid variable type (e.g. void *a; *a = 10; doesn’t make much sense because *a is type void).

#include <stdlib.h>

int

main(void)

{

int *intptr = malloc(sizeof(int)); /* malloc returns `void *`! */

*intptr = 0;

return *intptr;

}Program output:

Data-structures often aim to store data of any type (think: a linked list of anything). Thus, in C, you often see void *s to reference the data they store.

3.3.3.3 Relationship between Pointers, Arrays, and Arrows

Indexing into arrays (a[b]) and arrows (a->b) are redundant syntactic features, but they are very convenient.

&a[b]is equivalent toa + bwhereais a pointer andbis an index.a[b]is equivalent to*(a + b)whereais a pointer andbis an index.a->bis equivalent to(*a).bwhereais a pointer to a variable with a structure type that hasbas a field.

Generally, you should always try and stick to the array and arrow syntax were possible, as it makes your intention much more clear when coding than the pointer arithmetic and dereferences.

4 Pointers and Arrays

what is a “pointer”?

“Pointing dogs, sometimes called bird dogs,

are a type of gundog typically used in finding game.”

4.0.1 Remember this?

ok…hold on to that…

4.0.2 What happens when we declare a variable?

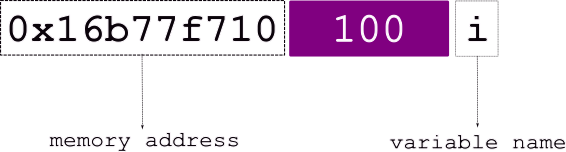

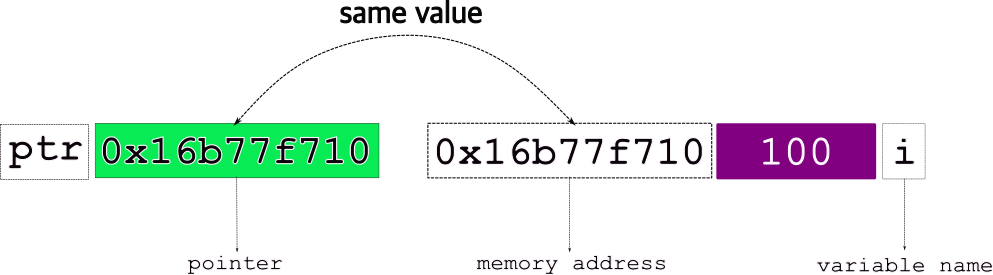

A visual representation of the above:

Let’s break it down a bit,

The various elements from the above figure:

| variable name | i |

| stored at address | 0x16677f710 |

| value | 100 |

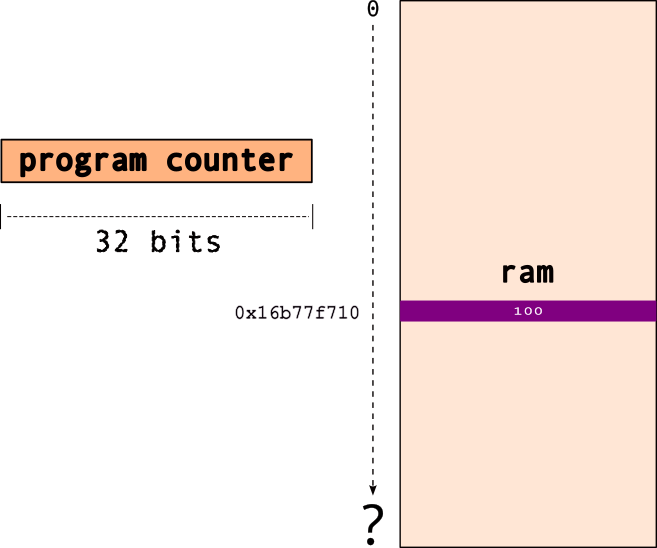

Let’s revisit this…

so it could look something like,

note that 0x16677f710 is the address i.e., the location in memory for the variable , i.

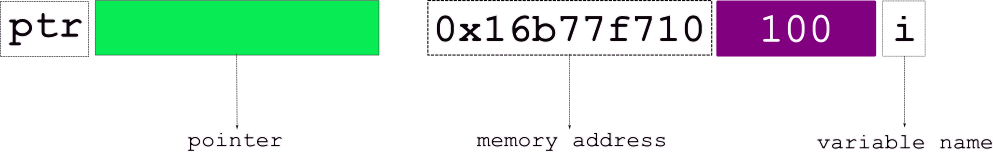

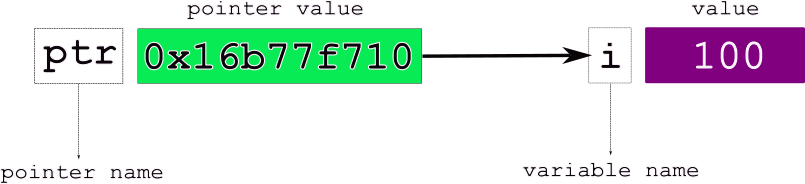

Now, what is a pointer, say ptr?

Well, a pointer “points to” → another object..in effect, points to a memory location!`

So, the pointer, e.g., ptr,

stores the address of the object it points to!

Final view of a pointer, ptr pointing to a variable, i,

The various elements from the above figure:

| variable name | i |

| stored at address | 0x16677f710 |

| value | 100 |

| pointer name | ptr |

| pointer value | 0x16677f710, i.e., i |

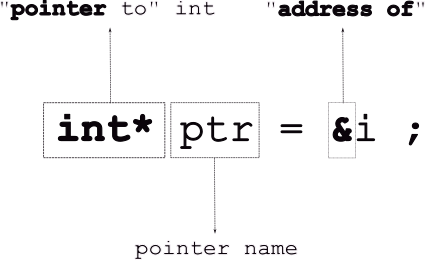

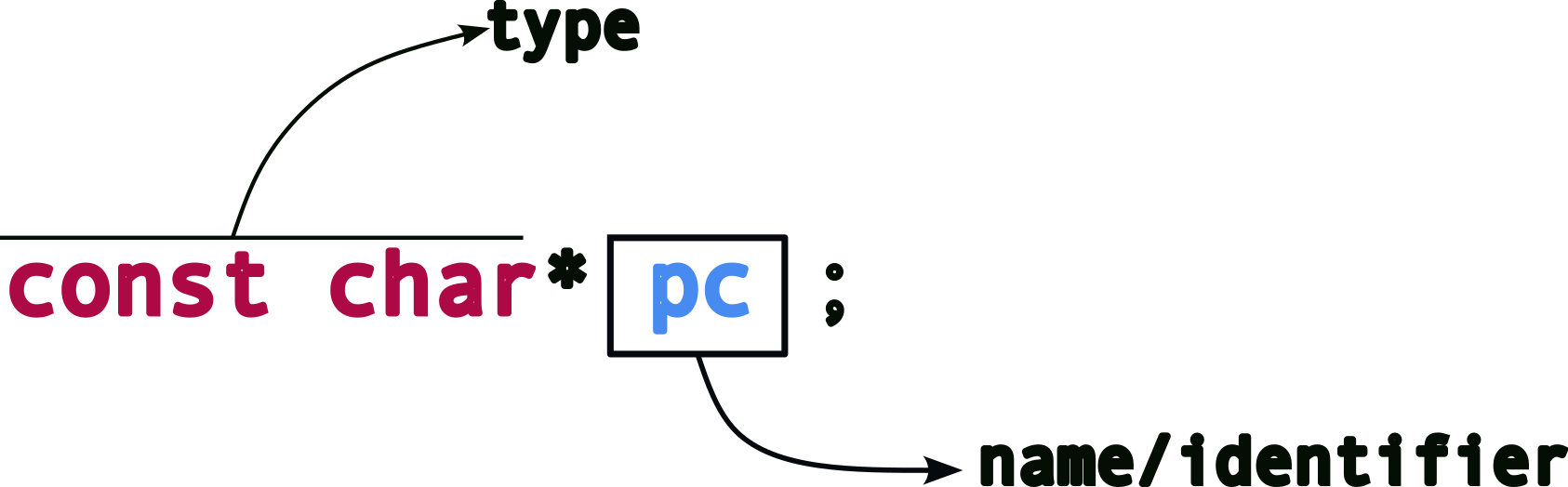

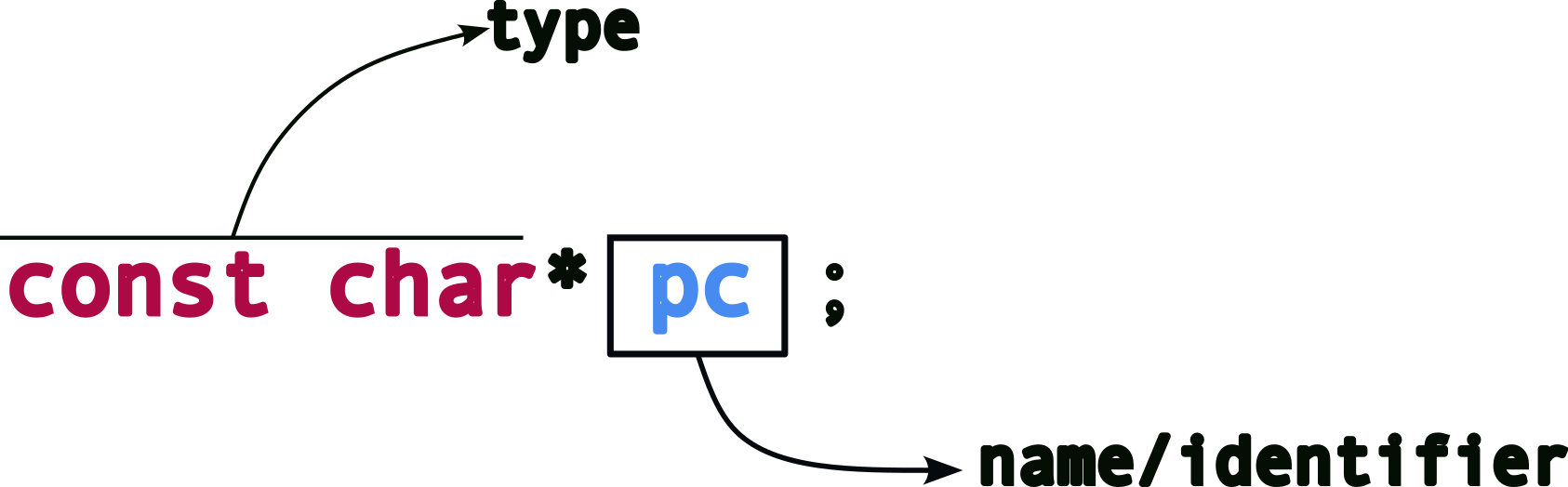

4.0.3 How do we declare/use pointers?

where,

Note, that the “address of”, & operator takes a variable and returns its address.

If you print out the pointer, printf("%p", ptr), you’ll get the address (i.e., the arrow).

Consider the following code example:

#include <stdio.h>

int main()

{

int i = 100 ;

int* p_int; // declare a pointer, NOT initialized

printf( "i = %d\t p_int = %p\n", i, p_int ) ;

p_int = &i ; //initialize pointer to point to address of 'i'

printf( "i = %d\t p_int = %p\t address of i = %p\n\n", i, p_int, &i ) ;

printf( "\n" ) ;

return 0 ;

}Program output:

inline_exec_tmp.c: In function main:

inline_exec_tmp.c:8:5: warning: p_int is used uninitialized in this function [-Wuninitialized]

8 | printf( "i = %d\t p_int = %p\n", i, p_int ) ;

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

i = 100 p_int = 0x7fffffffe3a0

i = 100 p_int = 0x7fffffffe29c address of i = 0x7fffffffe29c

To follow the arrow, i.e., get the value in the location pointed to by it (or to modify the actual value), you must dereference the pointer as follows: *ptr.

For the above code, if we change the following line,

the output changes to:

i = 100 p = 0x7fffffffe338 address of i = 0x7fffffffe338 value at i = 100What happens when you run the following?

#include <stdio.h>

int main()

{

int i = 100 ;

int* p_int; // declare a pointer, NOT initialized

printf( "i = %d\t p_int = %p\n", i, p_int ) ;

p_int = &i ; //initialize pointer to point to address of 'i'

printf( "i = %d\t p = %p\t address of i = %p\t value at i = %d\n\n", i, p_int, &i, *p_int ) ;

int j = 200 ;

p_int = j ; // works but NOT what we want

printf( "i = %d\t p_int = %p\t address of i = %p\n\n", i, p_int, &i ) ;

printf( "value at p_int = %d\n", *p_int ) ;

printf( "\n" ) ;

return 0 ;

}What can you do to fix the above code to make it compile?

Now, if we want to change the value of i from 100 to 200, using the pointer, we do:

#include <stdio.h>

int main()

{

int i = 100 ;

int* p_int; // declare a pointer, NOT initialized

printf( "i = %d\t p_int = %p\n", i, p_int ) ;

p_int = &i ; //initialize pointer to point to address of 'i'

printf( "i = %d\t p = %p\t address of i = %p\t value at i = %d\n\n", i, p_int, &i, *p_int ) ;

*p_int = 200 ;

printf( "i = %d\t p_int = %p\t address of i = %p\t value at i = %d\n\n", i, p_int, &i, *p_int ) ;

printf( "\n" ) ;

return 0 ;

}Pointers are necessary as they enable us to build linked data-structures (linked-lists, binary trees, etc…). Languages such as Java assume that every single object variable is a pointer, and since all object variables are pointers, they don’t need special syntax for them.

4.1 Pointers and Modifiers

We can use modifiers in front of types, e.g., const double pi = 3.14 ; which means that the variable pi cannot be modified in the program. Try the following program:

But, we can declare a pointer to it! We can even dereference it and access the value.

#include <stdio.h>

int main()

{

const double pi = 3.14 ;

// pi = 728.0 ; BAD!

const double* p_double = &pi ;

printf( "p_double points to the value = %f\n", *p_double ) ;

printf( "\n" ) ;

return 0 ;

}Will this work?

The modifiers can be applied to the pointers themselves and not only the variables they point to. So, the following are all valid C statements:

double d ; // a regular 'double'

double* p_d ; // a pointer to a 'double'

const double cd ; // a 'constant' double

const double* p_cd ; // a pointer to double that is constant

double* const c_pd ; // a 'constant pointer' to a double

const double* const c_p_cd ; // a constant pointer to a double that is a constant4.2 Arrays

We have seen arrays before…in the form of strings!

Arrays are a contiguous collection of data items, all of the same type.

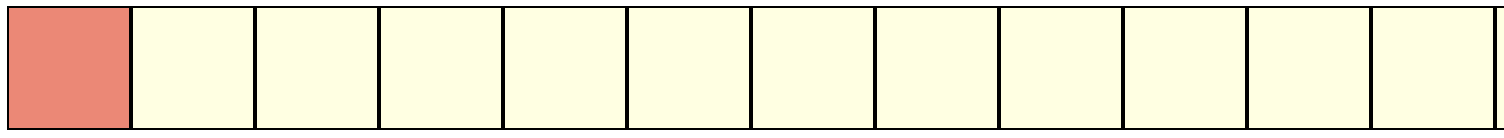

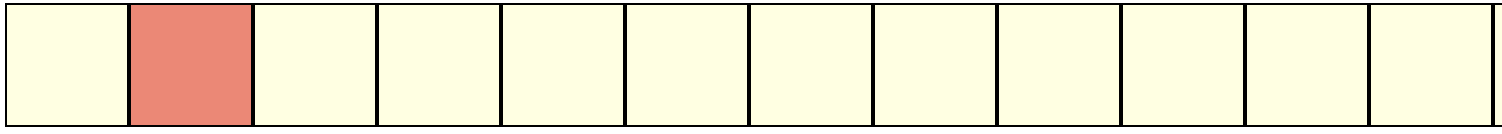

so, if we declare an integer array,

this is what it looks like in memory:

a →

To access individual elements of the array, use the array access operator, []

e.g., a[0]:

e.g., a[2]:

Now, there’s a reason why I drew it like this:

a →

because, a is actually a…pointer…to the start of the array, i.e., the first element.

When we access an array item, e.g., a[2]…C is basically doing pointer arithmetic. So,

a[2] → *(a+2):

a |

pointer to start of array |

a+2 |

add 2 to the pointer, i.e., to the address a |

*(a+2) |

dereference address a+2, i.e., get data from location a+2 |

A simple example of using pointers vs array name:

#include <stdio.h>

int main()

{

int a[5] = { 100, 200, 300, 400, 500 } ;

int* p_a = a ;

printf( "%d\n", *(a+2) ) ;

printf( "%d\n", *(p_a++) ) ;

printf( "\n" ) ;

return 0 ;

}Program output:

300

100

4.3 Pointers | Memory Allocation

Typically, in C, there are two types of memory allocations:

- static

- dynamic

4.3.1 Static Memory Allocation

- most definitions we encounter

- all local, global, file scope variables

| Examples |

|---|

int i ; |

struct student st ; |

char name[128] ; |

const double* pd ; |

| … |

- memory allocated at compile time

- compiler needs to to exactly how much memory

the following is illegal: char array[n] ;

- the value of n can change at run time

- e.g., we can do

n = 200 ; before the array is defined

[caveat: newer C compilers may allow it. Avoid doing this.]

4.3.2 Dynamic Memory Allocation

- everything allocated using

malloc[and other calls as we shall see] memory allocated at run time

char* pc = (char*) malloc( 128*sizeof(char) ) ;128 bytesof memory allocated dynamically- this is completely legal: (char) malloc( nsizeof(char) )`

value of n can change at run time

4.3.3 C Standard Library Functions for Memory Allocation

| function name | bytes allocated | inititalize? |

|---|---|---|

malloc(size) |

size |

no |

calloc<br>(nmemb,size) |

nmemb*size |

0 |

realloc<br>(*ptr,size) |

grow/shrink*ptr to size<Scb>|origptr| |free(ptr)` |

n/a |

all defined in <stdlib.h>.

4.3.3.1 size_t

- unsigned integer data type

- lots of system calls use

size_t - not a real data type

typedefis used → depends on platform!

| platform | size_t |

|---|---|

| 32 bit | unsigned int |

| 64 bit | unsigned long long int |

4.3.3.2 malloc

Signature: void *malloc(size_t size);

- allocate raw memory of

size_t sizebytes - no initialization

- “data” is whatever is in memory

- use

memset()to set memory to0 - returns → pointer to newly allocated memory

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

// ALWAYS good to define array sizes as such constants

#define ARRAY_SIZE 16

int main()

{

// random size

int* temp = (int*) malloc( 12323445 ) ;

int* pi = (int*) malloc( sizeof(int) * ARRAY_SIZE ) ;

assert(pi) ; // check that a valid address was returned

pi[0] = 233 ;

printf( "After malloc\n") ;

for( unsigned int i = 0 ; i < ARRAY_SIZE ; ++i )

printf( "pi[%d] = %d\t", i, pi[i] ) ;

printf( "\n" ) ;

// remember to release the memory!

free(pi) ;

free(temp) ;

printf("\n") ;

return 0 ;

}Let’s look at another example:

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

int main()

{

// Create a new string to store a Haiku

char haiku[] = "'You Laughed While I Slept'\n\

- by Bertram Dobell\n\

\n\

You laughed while I wept,\n\

Yet my tears and your laughter\n\

Had only one source." ;

char* new_haiku = (char*)malloc( sizeof(char)*128 ) ;

assert(new_haiku) ; // check if we got a valid pointer

// copy from one to the other?

new_haiku = haiku ;

printf( "haiku = %s\n\n", haiku ) ;

printf( "new_haiku = %s\n\n", new_haiku ) ;

// Exactly the same, so all ok?

// But, what if we do this?

haiku [1] = '#' ;

// new_haiku has changed!

printf( "new_haiku = %s\n\n", new_haiku ) ;

printf("\n") ;

return 0 ;

}The above change happens, because the copy new_haiku = haiku was a shallow copy, i.e., it only copied the pointers and not the underlying string!

To fix this problem, do a deep copy instead.

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

int main()

{

// Create a new string to store a Haiku

char haiku[] = "'You Laughed While I Slept'\n\

- by Bertram Dobell\n\

\n\

You laughed while I wept,\n\

Yet my tears and your laughter\n\

Had only one source." ;

char* new_haiku = (char*)malloc( sizeof(char)*128 ) ;

assert(new_haiku) ; // check if we got a valid pointer

// copy from one to the other?

// SHALLOW COPY

// new_haiku = haiku ;

// Deep Copy

unsigned int i ;

for( i = 0 ; i < sizeof(haiku) ; ++i )

new_haiku[i] = haiku[i] ;

// modify original Haiku

haiku [1] = '#' ;

printf( "haiku = %s\n\n", haiku ) ;

printf( "new_haiku = %s\n\n", new_haiku ) ; //unchanged

printf("\n") ;

return 0 ;

}Note: we can use a C standard library function, strcpy() (defined in <string.h>) to do the copy:

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

#include <string.h>

int main()

{

// Create a new string to store a Haiku

char haiku[] = "'You Laughed While I Slept'\n\

- by Bertram Dobell\n\

\n\

You laughed while I wept,\n\

Yet my tears and your laughter\n\

Had only one source." ;

char* new_haiku = (char*)malloc( sizeof(char)*128 ) ;

assert(new_haiku) ; // check if we got a valid pointer

// Deep Copy

strcpy( new_haiku, haiku ) ;

// modify original Haiku

haiku [1] = '#' ;

printf( "haiku = %s\n\n", haiku ) ;

printf( "new_haiku = %s\n\n", new_haiku ) ; //unchanged

printf("\n") ;

return 0 ;

}What happens if I do,

4.3.3.3 calloc

Signature: void* calloc(size_t nmemb, size_t size);

- allocate raw memory of

nmemb*sizebytes - guaranteed to initialize memory to

0 - e.g.,

calloc(10, sizeof(int)) ;- creates memory for

10integers - each one set to

0

- creates memory for

- returns → pointer to newly allocated memory

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

// ALWAYS good to define array sizes as such constants

#define ARRAY_SIZE 16

int main()

{

// replace with calloc()

// int* pi = (int*) malloc( sizeof(int) * ARRAY_SIZE ) ;

int* pi = (int*) calloc( ARRAY_SIZE, sizeof(int) ) ; // notice the difference in args

assert(pi) ; // check that a valid address was returned

pi[0] = 233 ;

printf( "After malloc\n") ;

for( unsigned int i = 0 ; i < ARRAY_SIZE ; ++i )

printf( "pi[%d] = %d\t", i, pi[i] ) ;

printf( "\n" ) ;

printf("\n") ;

return 0 ;

}Compare the differences, if any, in the outputs of the above two pieces of code.

4.3.3.4 realloc

Signature: void* realloc(void *ptr, size_t size);

- reallocate the memory pointed to by

ptr - i.e., grow/shrink it to the new

size - grows/shrink in place if possible

- if not enough space to grow,

- allocate new memory of

size(create a new pointer,ptr2) - copy as much of old data as possible, i.e., from

ptr→ptr2 - free old pointer, i.e.,

ptr - returns → one of,

*ptrif the new size fits*ptr2, i.e., pointer to new allocation

There are some “oddities” you need to be aware of while using realloc():

- if original allocation was using

calloc()→ remember it sets the memory to0 reallocwill not set extended memory to0- e.g.,

- if

calloccreated a 10 byte array,pa - initialized to

0 realloc(pa, 20)- last

10bytes not set to0

- if

Adapting the code example from earlier, let’s assume we now have a much longer poem to copy,

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

#include <string.h>

// ALWAYS good to define array sizes as such constants

#define HAIKU_SIZE 128

int main()

{

// Create a new string to store the Haiku

char haiku[] = "'You Laughed While I Slept'\n\

- by Bertram Dobell\n\

\n\

You laughed while I wept,\n\

Yet my tears and your laughter\n\

Had only one source." ;

// get space to store it

char* new_haiku = (char*)malloc( sizeof(char)*128 ) ;

// Deep Copy

strcpy( new_haiku, haiku ) ;

// ... same code as before

// Now we have a new, LONGER, poem

char twain_poem[] = "'These Annual Bills'\n\

- Mark Twain\n\

\n\

These annual bills! these annual bills!\n\

How many a song their discord trills \n\

Of 'truck' consumed, enjoyed, forgot,\n\

Since I was skinned by last year's lot!\n\

Those joyous beans are passed away;\n\

\n\

Those onions blithe, O where are they?\n\

Once loved, lost, mourned-now vexing ILLS\n\

Your shades troop back in annual bills! \n\

\n\

And so 'twill be when I'm aground \n\

These yearly duns will still go round, \n\

While other bards, with frantic quills,\n\

\n\

Shall damn and damn these annual bills!" ;

// Deep Copy?

strcpy( new_haiku, twain_poem ) ;

printf( "\n---------------\n" ) ;

printf( "%s\n\n", twain_poem ) ;

printf( "new_haiku = %s\n\n", new_haiku ) ;

printf( "new_haiku size = %lu \t twain size = %lu", sizeof(new_haiku), sizeof(twain_poem) ) ;

printf("\n") ;

return 0 ;

}We see some random behaviors. Program can crash!

Some important issues: 1. strcpy() does not do a bounds check while copying! Use strncpy() instead. 2. we need to increase the space for new_haiku, so we use realloc().

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

#include <string.h>

// ALWAYS good to define array sizes as such constants

#define HAIKU_SIZE 128

#define POEM_SIZE 1024

int main()

{

// Create a new string to store the Haiku

char haiku[] = "'You Laughed While I Slept'\n\

- by Bertram Dobell\n\

\n\

You laughed while I wept,\n\

Yet my tears and your laughter\n\

Had only one source." ;

// get space to store it

char* new_haiku = (char*)malloc( sizeof(char)*128 ) ;

// Deep Copy

strcpy( new_haiku, haiku ) ;

// ... same code as before

// Now we have a new, LONGER, poem

char twain_poem[] = "'These Annual Bills'\n\

- Mark Twain\n\

\n\

These annual bills! these annual bills!\n\

How many a song their discord trills \n\

Of 'truck' consumed, enjoyed, forgot,\n\

Since I was skinned by last year's lot!\n\

Those joyous beans are passed away;\n\

\n\

Those onions blithe, O where are they?\n\

Once loved, lost, mourned-now vexing ILLS\n\

Your shades troop back in annual bills! \n\

\n\

And so 'twill be when I'm aground \n\

These yearly duns will still go round, \n\

While other bards, with frantic quills,\n\

\n\

Shall damn and damn these annual bills!" ;

// realloc for more space

new_haiku = (char*) realloc( new_haiku, POEM_SIZE ) ;

assert(new_haiku) ; // ALWAYS CHECK!

// Deep Copy?

strcpy( new_haiku, twain_poem ) ;

printf( "\n---------------\n" ) ;

printf( "%s\n\n", twain_poem ) ;

printf( "new_haiku = %s\n\n", new_haiku ) ;

printf( "new_haiku size = %lu \t twain size = %lu", sizeof(new_haiku), sizeof(twain_poem) ) ;

printf("\n") ;

return 0 ;

}4.3.3.5 free()

Signature: void free(void *ptr);

- releases memory block referenced by

ptr- other processes/OS can now use it

- only works for dynamically allocated memory

- do not try for static variables

- “undefined” behavior

- no action if

ptrisNULL - does not return anything

Modifying the above code block:

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

// ALWAYS good to define array sizes as such constants

#define ARRAY_SIZE 16

int main()

{

// replace with calloc()

// int* pi = (int*) malloc( sizeof(int) * ARRAY_SIZE ) ;

int* pi = (int*) calloc( ARRAY_SIZE, sizeof(int) ) ; // notice the difference in args

assert(pi) ; // check that a valid address was returned

pi[0] = 233 ;

printf( "After malloc\n") ;

for( unsigned int i = 0 ; i < ARRAY_SIZE ; ++i )

printf( "pi[%d] = %d\t", i, pi[i] ) ;

printf( "\n" ) ;

// double the size o

realloc( pi, ARRAY_SIZE*2 ) ;

printf( "After realloc\n") ;

for( unsigned int i = 0 ; i < ARRAY_SIZE*2 ; ++i )

printf( "pi[%d] = %d\t", i, pi[i] ) ;

printf( "\n" ) ;

// I WANT TO BREAK FREE!

free(pi) ;

printf("\n") ;

return 0 ;

}A few things to keep in mind w.r.t. memory allocation in C:

- If you want to allocate an array, then you have to do the math yourself for the array size. For example,

int *arr = malloc(sizeof(int) * n);to allocate an array ofints with a length ofn. mallocis not guaranteed to initialize its memory to0. You must make sure that your array gets initialized. It is not uncommon to do amemset(arr, 0, sizeof(int) * n);to set the memory0.callocis guaranteed to initialize all its allocated memory to0.

4.3.4 Common Memory Allocation Errors

Be aware of these issues as you use pointers and memory allocation/free.

Note: valgrind will help you debug the last three of these issues.

- allocation error → memory not allocated

- maybe not enough memory in system

- returns

NULL - check for the return value before use!

#include <stdlib.h>

int

main(void)

{

int *a = malloc(sizeof(int));

/* Error: did not check return value! */

*a = 1;

free(a);

return 0;

}Program output:

- dangling pointer → accessing free’d pointer

- mem may have been reallocated → someone else did a

malloc()maybe - bad things happen (crashes, etc.)

- avoid

free()until all references are done

- mem may have been reallocated → someone else did a

#include <stdlib.h>

int

main(void)

{

int *a = malloc(sizeof(int));

if (a == NULL) return -1;

free(a);

/* Error: accessing what `a` points to after `free`! */

return *a;

}Program output:

- memory leaks → allocate but forget to

free()!- memory never get freed/released

- over time, less memory available

- can slow down/crash entire system!

- always pair a

free()with an allocation

#include <stdlib.h>

int

main(void)

{

int *a = malloc(sizeof(int));

if (!a) return -1;

a = NULL;

/* Error: never `free`d `a` and no references to it remain! */

return 0;

}Program output:

- double free →

freememory twice- bad (unpredictable) things happen!

- accidentally free memory used elsewhere

- memory allocation logic can crash!

#include <stdlib.h>

int

main(void)

{

int *a = malloc(sizeof(int));

if (!a) return -1;

free(a);

free(a);

/* Error: yeah, don't do that! */

return 0;

}Program output:

free(): double free detected in tcache 2

make[1]: *** [Makefile:30: inline_exec] Aborted4.4 Exercises

4.4.1 C is a Thin Language Layer on Top of Memory

We’re going to look at a set of variables as memory. When variables are created globally, they are simply allocated into subsequent addresses.

#include <stdio.h>

#include <string.h>

void print_values(void);

unsigned char a = 1;

int b = 2;

struct foo {

long c_a, c_b;

int *c_c;

};

struct foo c = (struct foo) { .c_a = 3, .c_c = &b };

unsigned char end;

int

main(void)

{

size_t vars_size;

unsigned int i;

unsigned char *mem;

/* Q1: What would you predict the output of &end - &a is? */

printf("Addresses:\na @ %p\nb @ %p\nc @ %p\nend @ %p\n"

"&end - &a = %ld\n", /* Note: you can split strings! */

&a, &b, &c, &end, &end - &a);

printf("\nInitial values:\n");

print_values();

/* Q2: Describe what these next two lines are doing. */

vars_size = &end - &a;

mem = &a;

/* Q3: What would you expect in the following printout (with the print uncommented)? */

printf("\nPrint out the variables as raw memory\n");

for (i = 0; i < vars_size; i++) {

unsigned char c = mem[i];

// printf("%x ", c);

}

/* Q4: What would you expect in the following printout (with the print uncommented)? */

memset(mem, 0, vars_size);

/* memset(a, b, c): set the memory starting at `a` of size `c` equal `b` */

printf("\n\nPost-`memset` values:\n");

// print_values();

return 0;

}

void

print_values(void)

{

printf("a = %d\nb = %d\nc.c_a = %ld\nc.c_b = %ld\nc.c_c = %p\n",

a, b, c.c_a, c.c_b, c.c_c);

}Program output:

Addresses:

a @ 0x555555558010

b @ 0x555555558014

c @ 0x555555558020

end @ 0x555555558039

&end - &a = 41

Initial values:

a = 1

b = 2

c.c_a = 3

c.c_b = 0

c.c_c = 0x555555558014

Print out the variables as raw memory

Post-`memset` values:Question Answer Q1-4 in the code, uncommenting and modifying where appropriate.

4.4.1.1 Takeaways

- Each variable in C (including fields in structs) want to be aligned on a boundary equal to the variable’s type’s size. This means that a variable (

b) with an integer type (sizeof(int) == 4) should always have an address that is a multiple of its size (&b % sizeof(b) == 0, so anint’s address is always divisible by4, along’s by8). - The operation to figure out the size of all the variables,

&end - &a, is crazy. We’re used to performing math operations values on things of the same type, but not on pointers. This is only possible because C sees the variables are chunks of memory that happen to be laid out in memory, one after the other. - The crazy increases with

mem = &a, and our iteration throughmem[i]. We’re able to completely ignore the types in C, and access memory directly!

Question: What would break if we changed char a; into int a;? C doesn’t let us do math on variables of any type. If you fixed compilation problems, would you still get the same output?

4.4.2 Quick-and-dirty Key-Value Store

Please read the man pages for lsearch and lfind. man pages can be pretty cryptic, and you are aiming to get some idea where to start with an implementation. An simplistic, and incomplete initial implementation:

#include <stdio.h>

#include <assert.h>

#include <search.h>

#define NUM_ENTRIES 8

struct kv_entry {

int key; /* only support keys for now... */

};

/* global values are initialized to `0` */

struct kv_entry entries[NUM_ENTRIES];

size_t num_items = 0;

/**

* Insert into the key-value store the `key` and `value`.

* Return `0` on successful insertion of the value, or `-1`

* if the value couldn't be inserted.

*/

int

put(int key, int value)

{

return 0;

}

/**

* Attempt to get a value associated with a `key`.

* Return the value, or `0` if the `key` isn't in the store.

*/

int

get(int key)

{

return 0;

}

int

compare(const void *a, const void *b)

{

/* We know these are `int`s, so treat them as such! */

const struct kv_entry *a_ent = a, *b_ent = b;

if (a_ent->key == b_ent->key) return 0;

return -1;

}

int

main(void)

{

struct kv_entry keys[] = {

{.key = 1},

{.key = 2},

{.key = 4},

{.key = 3}

};

int num_kv = sizeof(keys) / sizeof(keys[0]);

int queries[] = {4, 2, 5};

int num_queries = sizeof(queries) / sizeof(queries[0]);

int i;

/* Insert the keys. */

for (i = 0; i < num_kv; i++) {

lsearch(&keys[i], entries, &num_items, sizeof(entries) / sizeof(entries[0]), compare);

}

/* Now lets lookup the keys. */

for (i = 0; i < num_queries; i++) {

struct kv_entry *ent;

int val = 0;

ent = lfind(&queries[i], entries, &num_items, sizeof(entries[0]), compare);

if (ent != NULL) {

val = ent->key;

}

printf("%d: %d @ %p\n", i, val, ent);

}

return 0;

}Program output:

0: 4 @ 0x55555555804c

1: 2 @ 0x555555558044

2: 0 @ (nil)You want to implement a simple “key-value” store that is very similar in API to a hash-table (many key-value stores are implemented using hash-tables!).

Questions/Tasks:

- Q1: What is the difference between

lsearchandlfind? Themanpages should help here (man 3 lsearch) (you can exit from amanpage using ‘q’). What operations would you want to perform with each (and why)? - Q2: The current implementation doesn’t include values at all. It returns the keys instead of values. Expand the implementation to properly track values.

- Q3: Encapsulate the key-value store behind the

putandgetfunctions. - Q4: Add testing into the

mainfor the relevant conditions and failures ingetandput.

4.5 Pointers | Memory Layouts and Interfaces

4.5.1 Basic Memory Layouts

It is important to understand how memory in C (e.g., variables, dynamic memory such from malloc(), .), once allocated, is laid out in memory. This particularly important when dealing with pointers, arrays, complex types built using struct, etc.

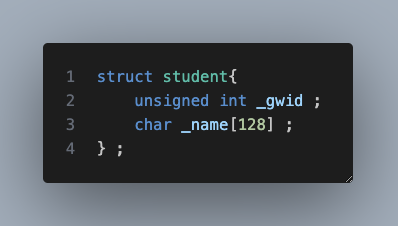

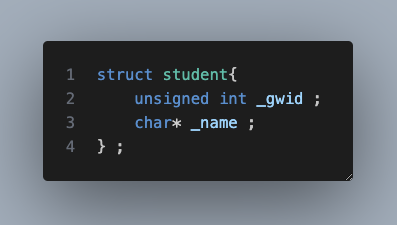

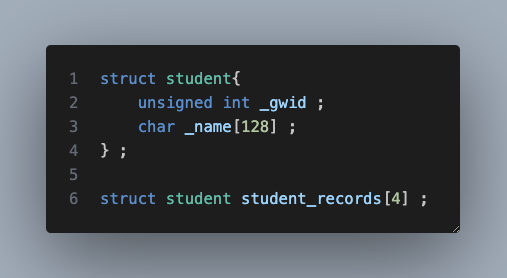

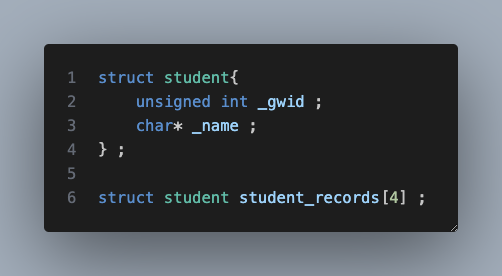

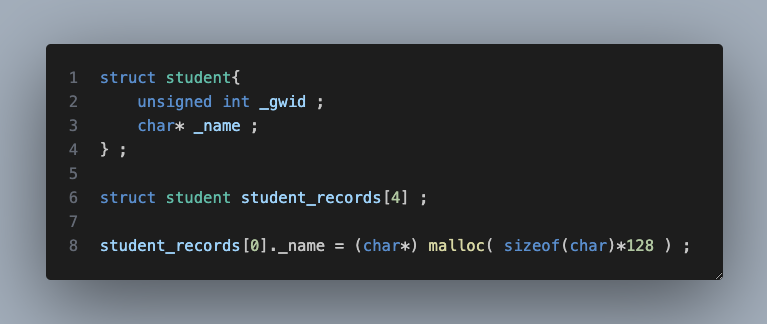

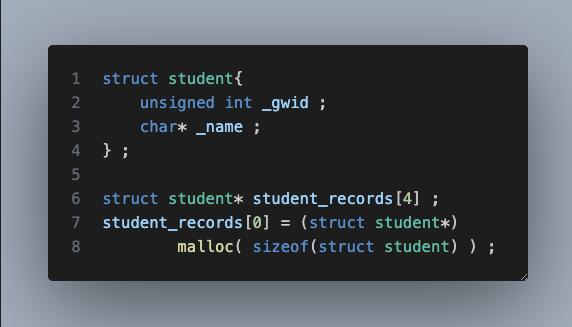

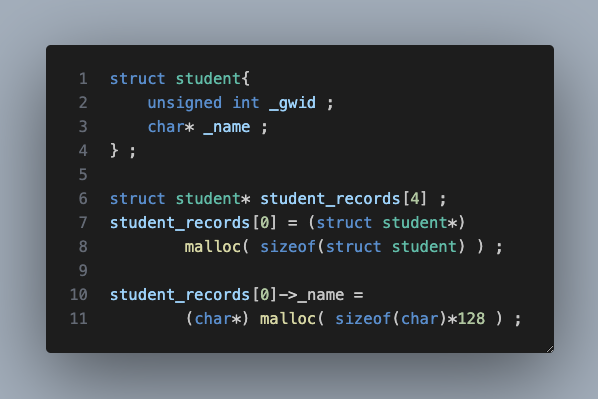

Consider the following example: suppose we need to create a database of student records. We can, perhaps, create a simle struct as follows:

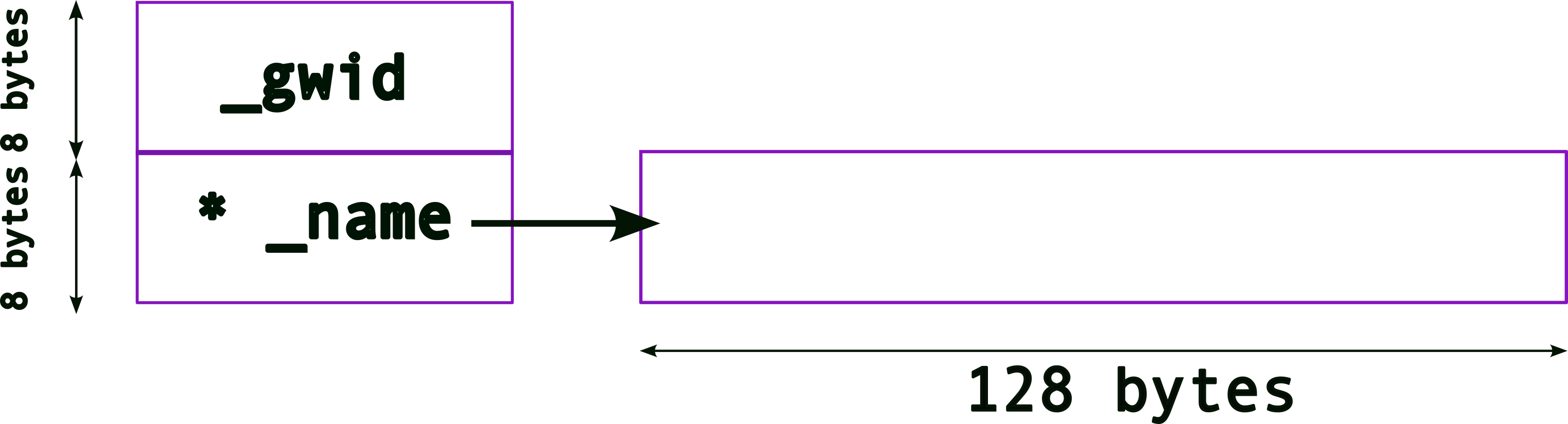

The memory layout for this struct will look like:

where the memory for the two variables, _gwid and name are (likely) consecutively laid out in memory (note: unsigned int is typically 4 bytes for 32-bit architectures but can be 8 bytes in 64-bit architectures.).

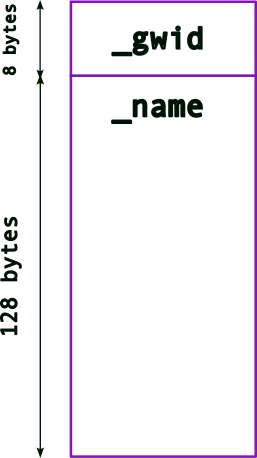

In the above example, the array, _name[128] has a fixed size, 128 bytes. While this may be enough for most names, there’s a likelihood that names can be longer. Hence, 128 bytes may not be enough. On the other hand, if most names in the database are much smaller, then we will end up wasting a lot of memory (especially as the number of student records grows). For instance, if most names take up only 64 bytes and if we have a 100, 000 records, then we’re wasting 6.4 million bytes! In some systems this can be prohibitive. Even otherwise, this is memory that other applications (or the OS) can use.

What is the solution then?

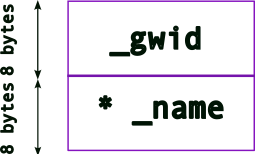

We can dynamically allocate memory for _name (based on how much memory is needed at runtime), i.e., make it a pointer!

So, once we update the struct, we get the following memory layout:

Note: a pointer’s size depends on the architecture:

architecture size (bytes) 32-bit 4 bytes 64-bit 8 bytes

So, now we must allocate memory for us to store names, .e.g.,

new_student._name = (char*) malloc( sizeof(char) * 128 ) ;

The memory layout will now look like,

4.5.2 Complex Memory Layouts

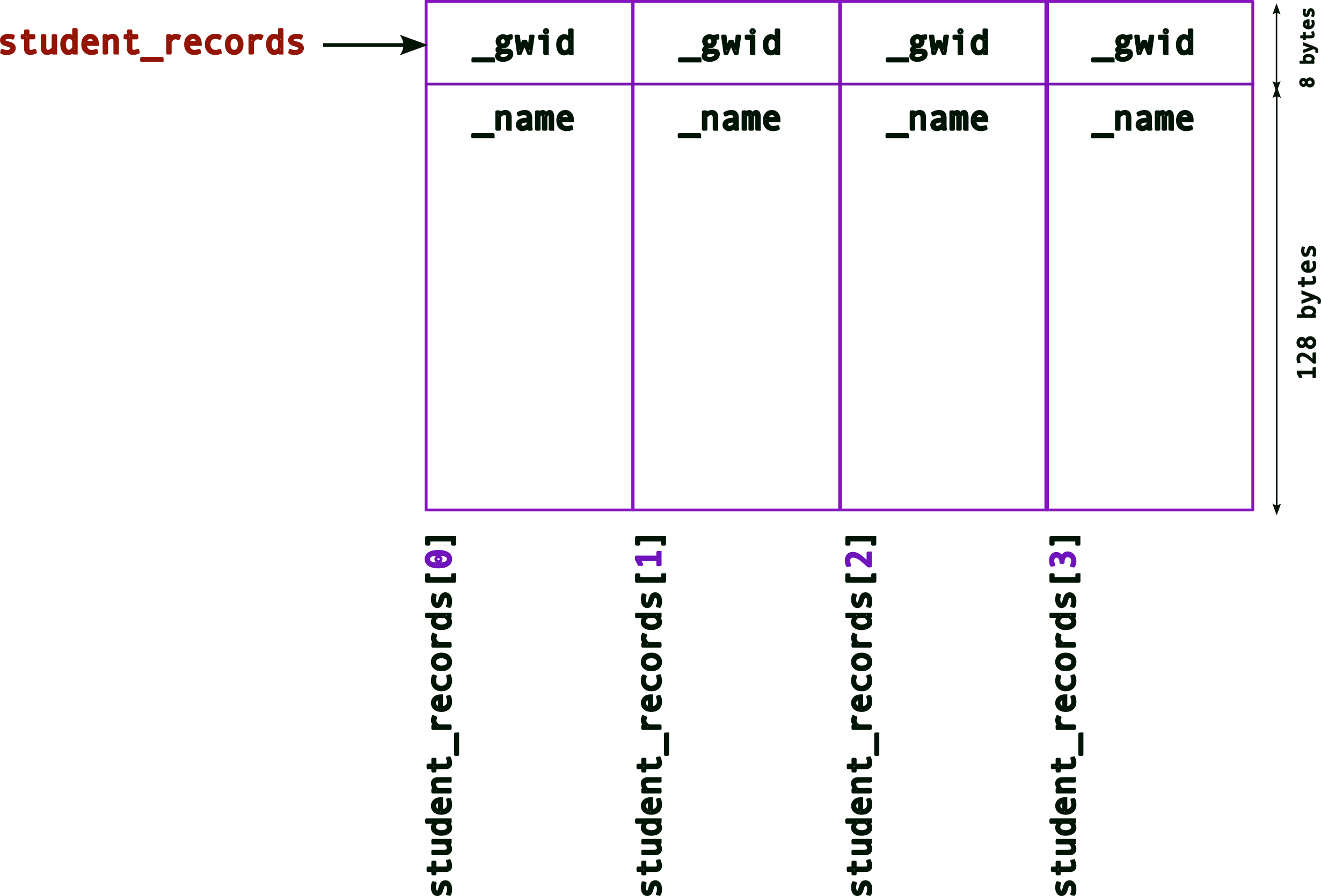

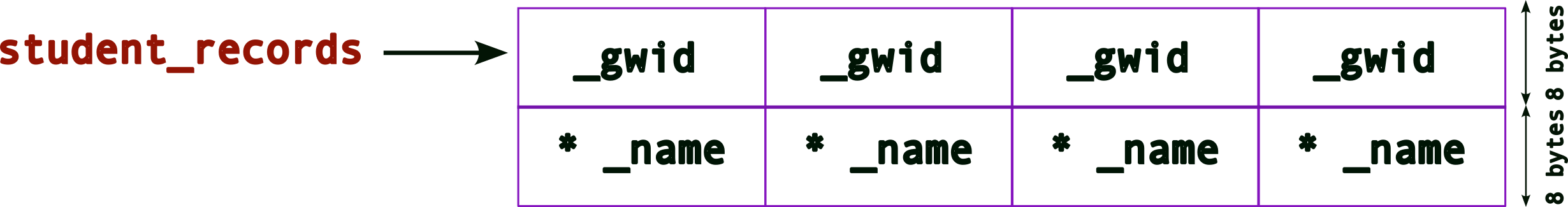

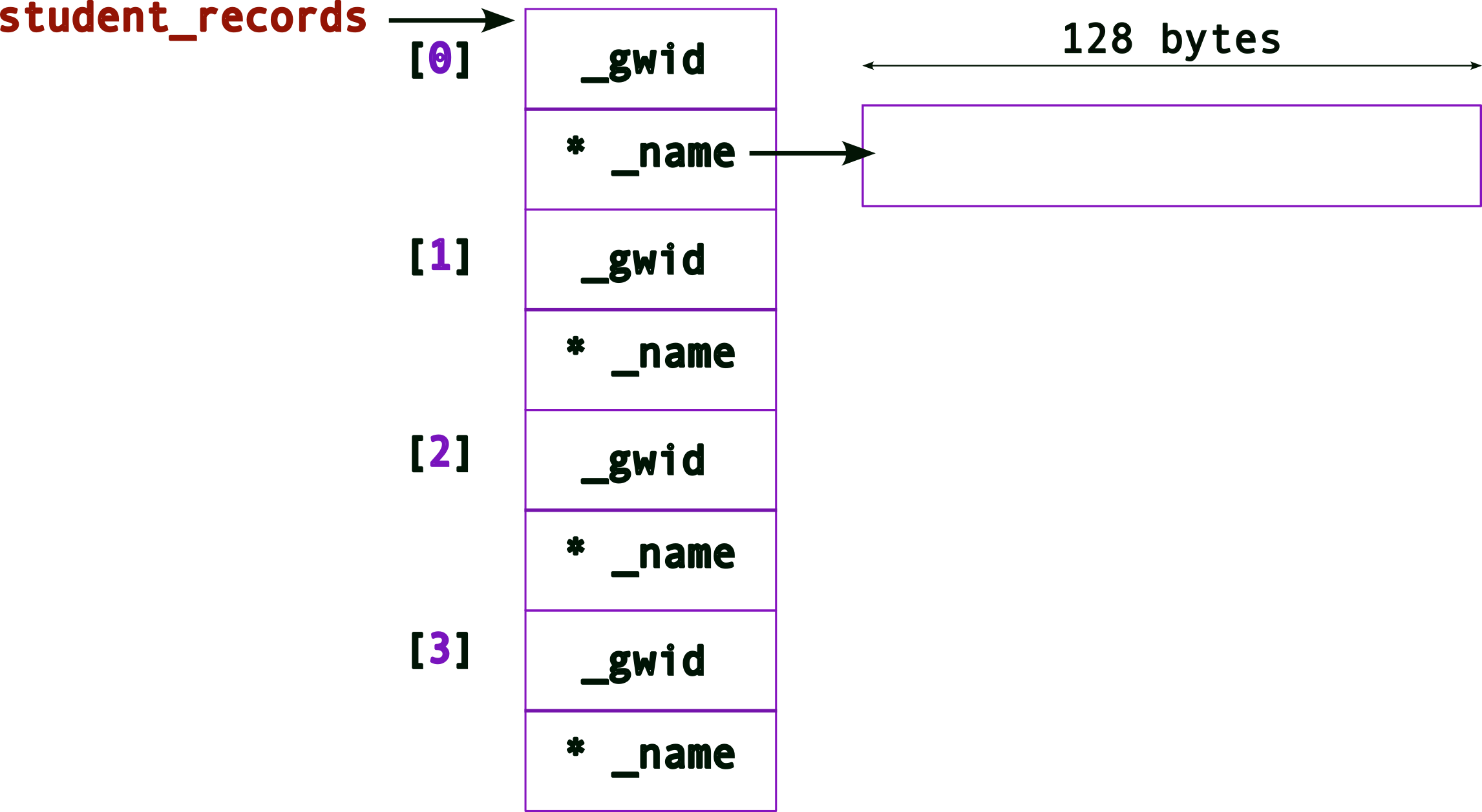

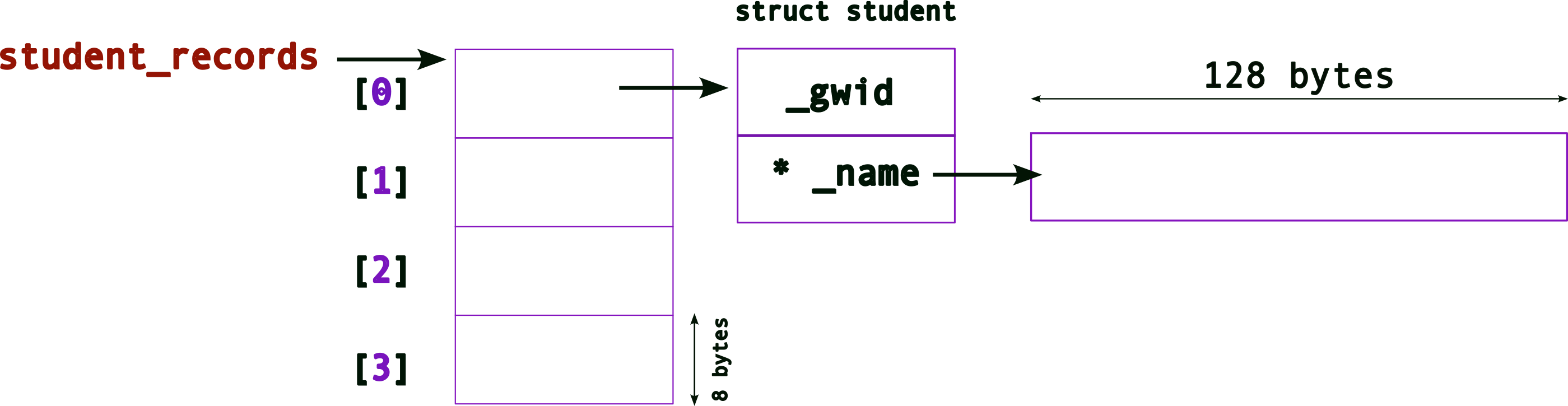

Since we want to create a database of student records, we need to story more than one. One way could be to create an array of struct student,

Recall (from above) what the memory layout for one struct (still with the hardcoded array for _name, i.e., an array of 128 bytes) looks like. Now, the memory layout for an array will look like,

Remember that,

- an array is contiguous memory of the same type

_student_records, the array name is a pointer to the start of the array memory address.

Hence, when you access each element of the array, e.g., `student_records[n]

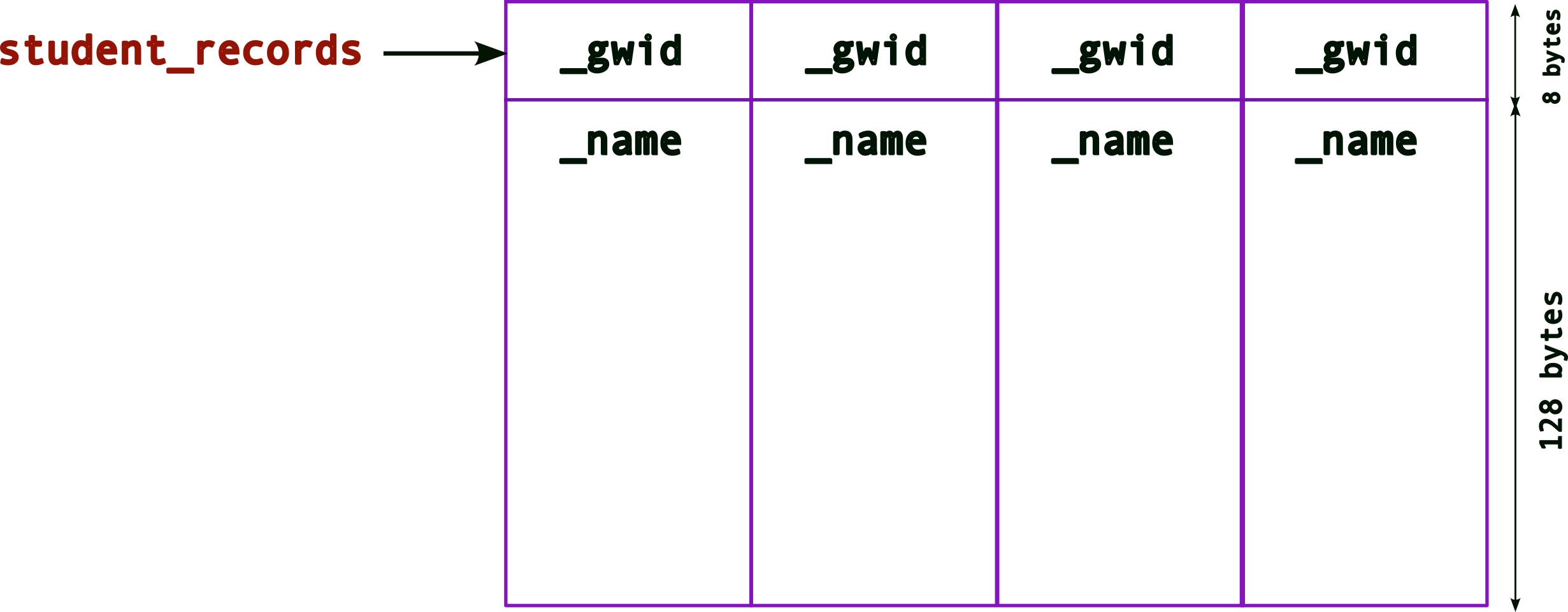

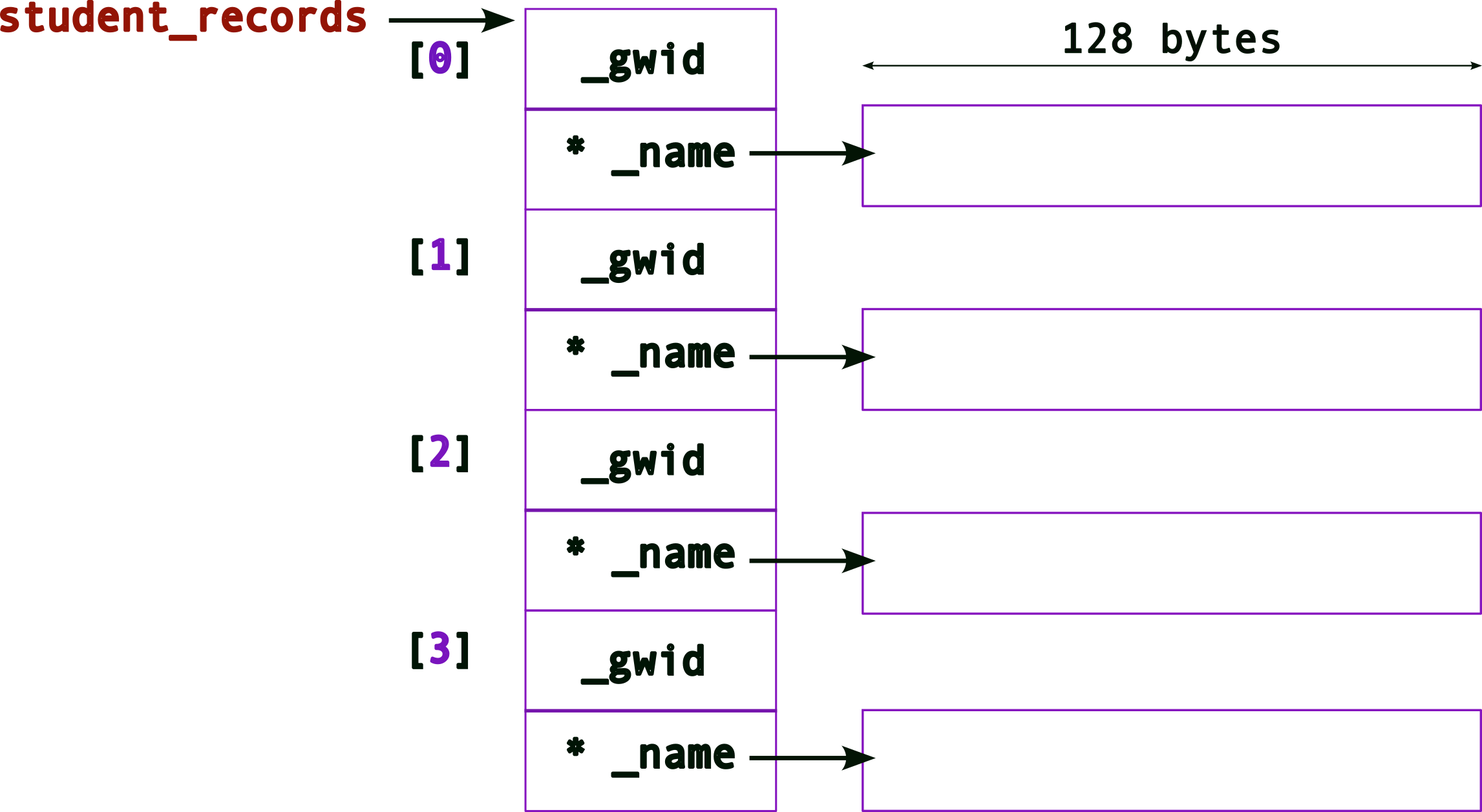

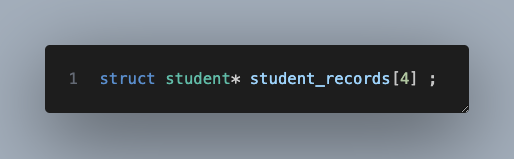

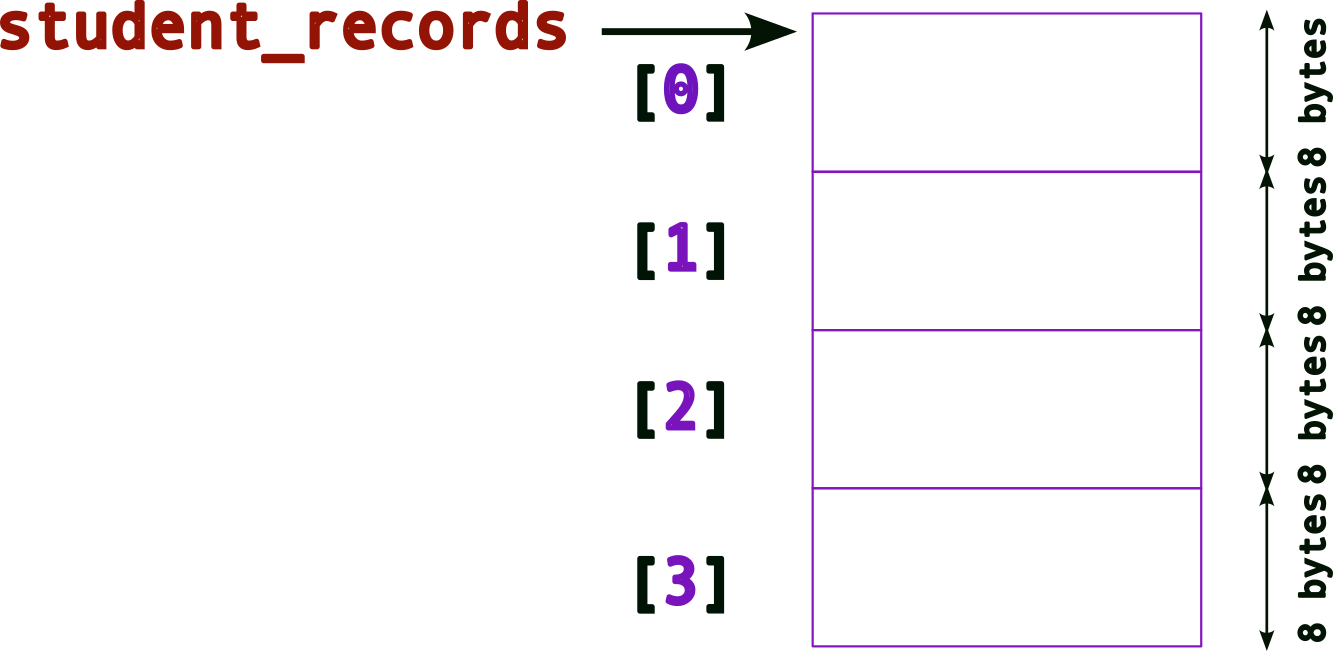

Of course, this still suffers from the earlier issue with the fixed value for the size of _name. Hence, we want to use the pointer version of the struct. So, if we create an array of struct student as follows,

struct student{

unsigned int _gwid ;

char* _name ; // this is a pointer now

} ;

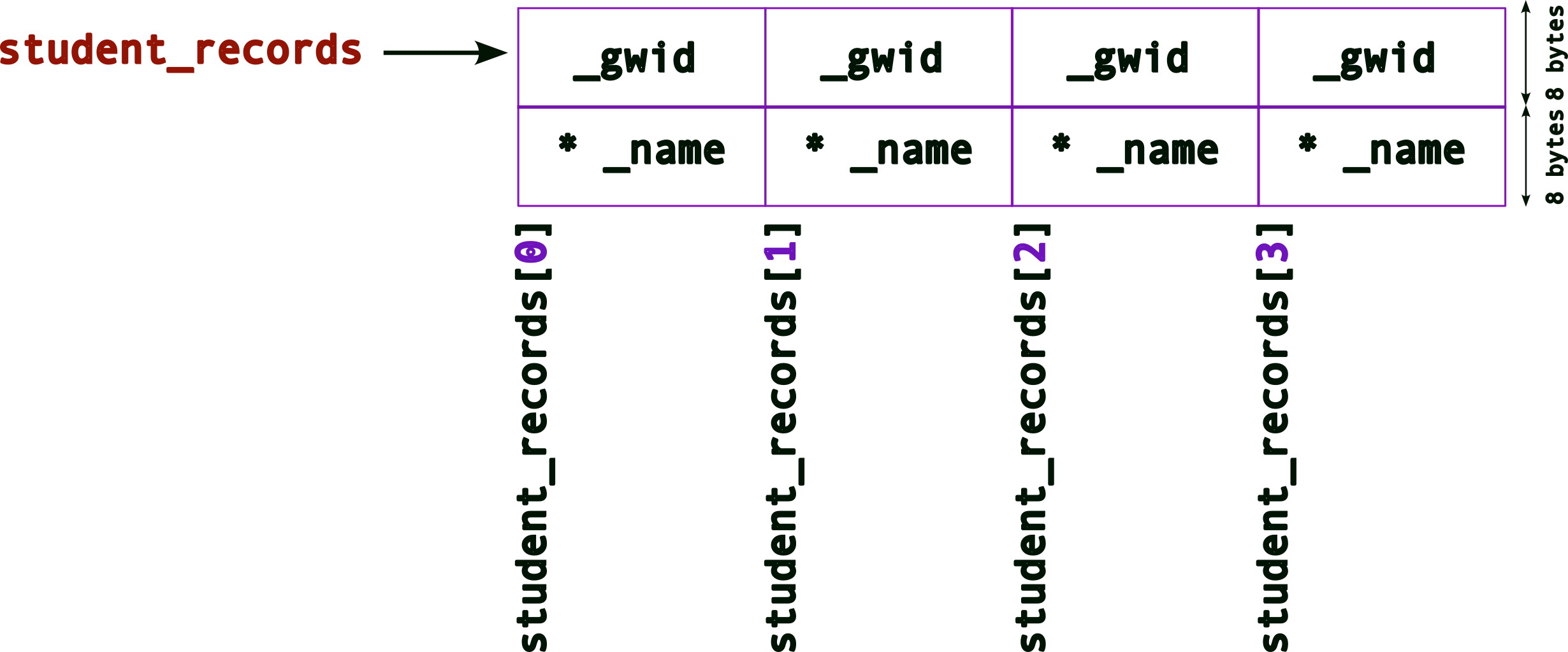

struct student student_records[4] ;The memory layout for the student_records array will look like (again recall what the memory layout for one struct will look like from above),

As before, to access each element of this array, we can use the [] operator,

Note, we need to be careful with how we access/allocate/use the memory now. More of that later.

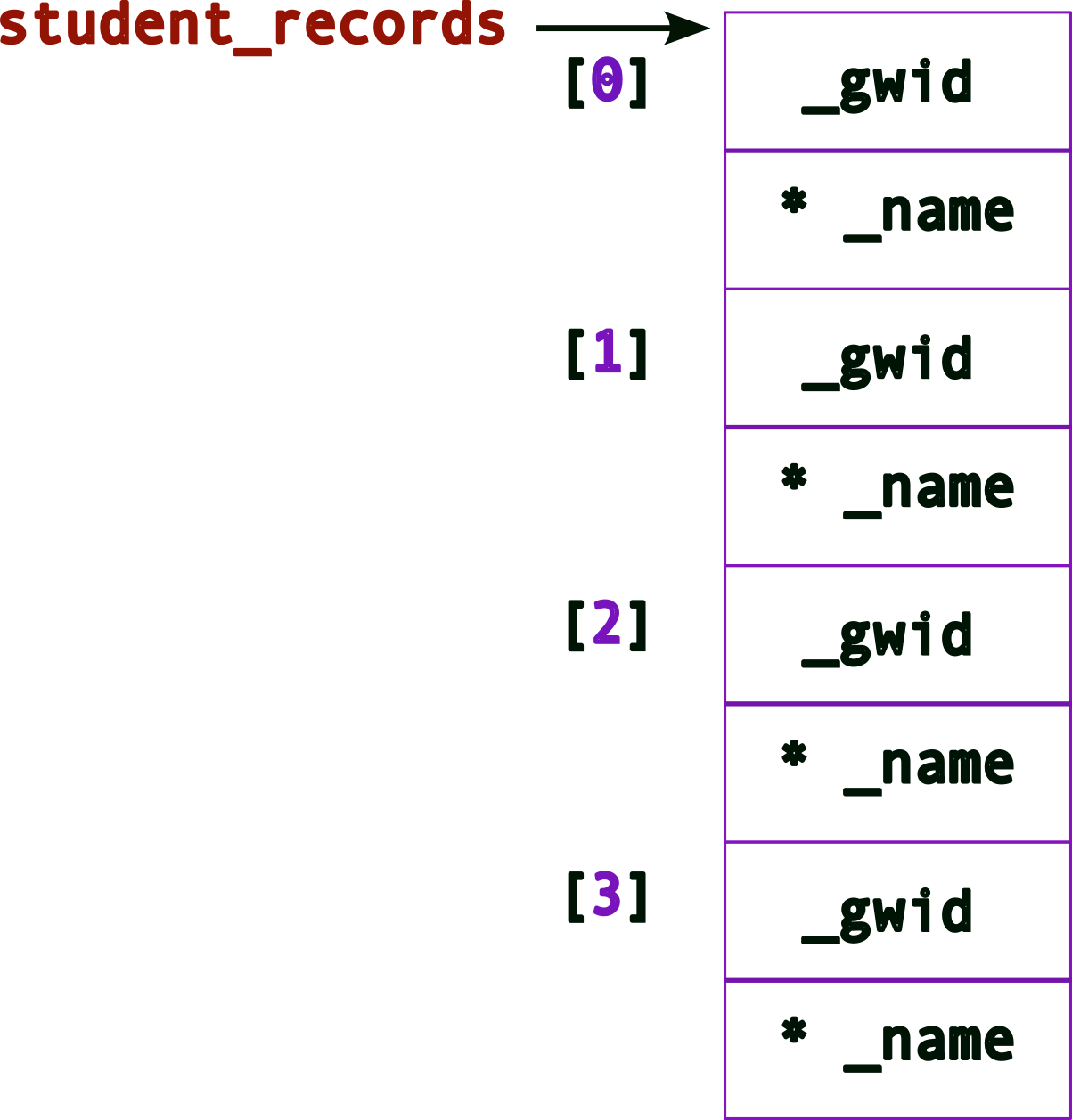

Let’s draw the same figure this way for convenience:

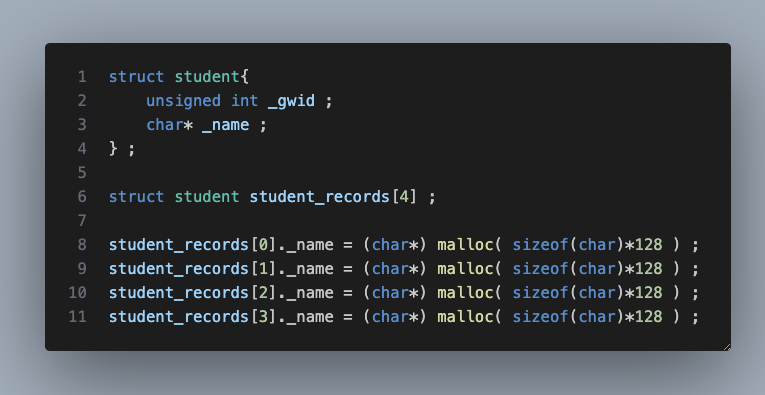

Remember that _name still needs memory! So we can allocate it, say using malloc(). Hence, allocating memory for the first _name may look like this:

struct student{

unsigned int _gwid ;

char* _name ; // this is a pointer now

} ;

struct student student_records[4] ;

student_records[0]._name = (char*) malloc( sizeof(char)*128 ) ; // allocate memory for first nameThe memory layout, after one _name allocation will look like:

Updated memory layout after all four _name allocations

and the corresponding code will look like,

struct student{

unsigned int _gwid ;

char* _name ; // this is a pointer now

} ;

struct student student_records[4] ;

student_records[0]._name = (char*) malloc( sizeof(char)*128 ) ; // allocate memory for first name

student_records[1]._name = (char*) malloc( sizeof(char)*128 ) ; // allocate memory for second name

student_records[2]._name = (char*) malloc( sizeof(char)*128 ) ; // allocate memory for third name

student_records[3]._name = (char*) malloc( sizeof(char)*128 ) ; // allocate memory for fourth nameLet’s go one step further…

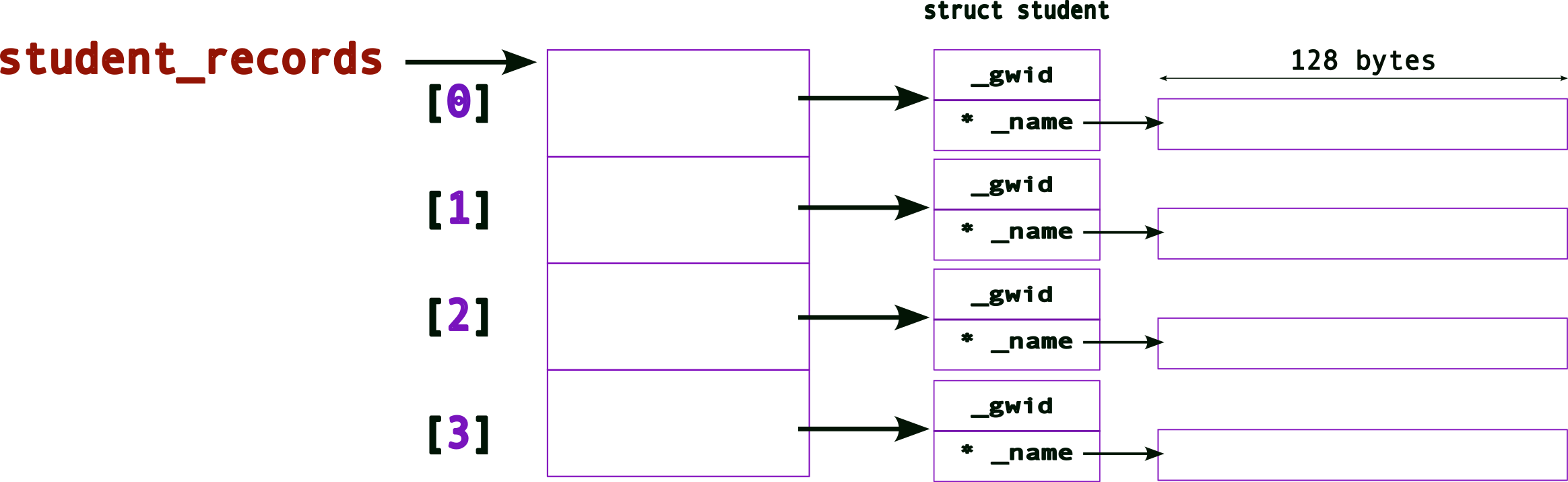

what does this do?

It creates an array of pointers to struct (remember to read the definitions from right to left)!

The memory layout now looks like,

(Compare this to the previous layouts)

Hence, when we dereference each element of the array, e.g., student_records[0], we get back, a struct student*, i.e., a pointer.

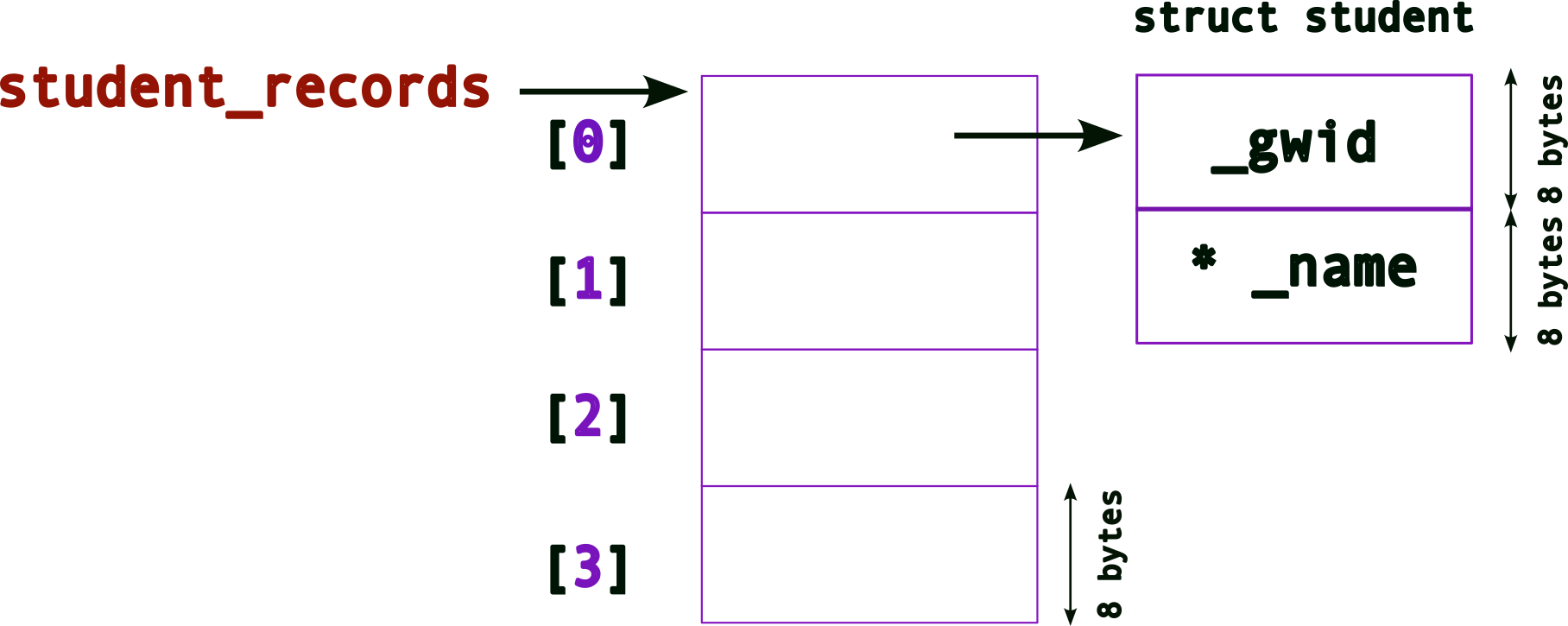

So, as a first step, we must allocate a struct!

struct student{

unsigned int _gwid ;

char* _name ; // this is a pointer now

} ;

struct student* student_records[4] ; // an array of pointers!

student_records[0] = (struct student*) malloc( sizeof(struct student) ) ; // allocate memory for a struct first!The memory layout after allocating the struct:

We’re still missing something → no memory space for _name (remember it is still a pointer)! As before, we need to use malloc() for this:

struct student{

unsigned int _gwid ;

char* _name ; // this is a pointer now

} ;

struct student* student_records[4] ; // an array of pointers!

student_records[0] = (struct student*) malloc( sizeof(struct student) ) ; // allocate memory for a struct first!

student_records[0]->name = (char*) malloc( sizeof(char) * 128 ) ; // allocate memory for name, note the -> operatorNote the

->operator!When we’re trying to access members of a

struct(orunion) using pointers, we use the->operator. note the differences between the following:

variable type access examples normal variable

struct student sibin ;sibin._name = malloc(...) ;

printf( "name = %s\n", sibin._name ) ;pointer variable

struct student* psibin = &sibin ;psibin->_name = malloc(...) ;

printf( "gwid = %d\n", psibin->_gwid ) ;

Now, the memory layout after allocating both, the struct and _name,

To allocate all of the required memory for the entire array, i.e., allocating for all struct and _name pointers, we need to,

struct student{

unsigned int _gwid ;

char* _name ; // this is a pointer now

} ;

struct student* student_records[4] ; // an array of pointers!

student_records[0] = (struct student*) malloc( sizeof(struct student) ) ; // allocate memory for a struct first!

student_records[0]->name = (char*) malloc( sizeof(char) * 128 ) ; // allocate memory for name, note the -> operator

student_records[1] = (struct student*) malloc( sizeof(struct student) ) ; // allocate memory for a struct first!

student_records[1]->name = (char*) malloc( sizeof(char) * 128 ) ; // allocate memory for name, note the -> operator

student_records[2] = (struct student*) malloc( sizeof(struct student) ) ; // allocate memory for a struct first!

student_records[2]->name = (char*) malloc( sizeof(char) * 128 ) ; // allocate memory for name, note the -> operator

student_records[3] = (struct student*) malloc( sizeof(struct student) ) ; // allocate memory for a struct first!

student_records[3]->name = (char*) malloc( sizeof(char) * 128 ) ; // allocate memory for name, note the -> operatorThe final memory layout will look like,

4.5.3 Interfaces

The problem is that the code to initialize the entire array (struct and _name) is quite laborious and ugly since we are initializing each one explicitly in code. Imagine if we had hundreds or thousands of new student records to initialize/store!

To solve this problem, we create an interface for creating new records. An “interface” is usually a fancy way of saying function. So, we define a new function say, create_student_record() as follows:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

// a macro to define the largest size for a name

#define MAX_NAME_SIZE 256

struct student{

unsigned int _gwid ;

char* _name ;

} ;

struct student create_student_record( unsigned int gwid, char* name )

{

struct student new_student ;

new_student._gwid = gwid ;

new_student._name = (char*)malloc( sizeof(char)*MAX_NAME_SIZE ) ;

strcpy( new_student._name, name ) ; // deep copy

return new_student ;

}Program output:

/usr/bin/ld: /usr/lib/gcc/x86_64-linux-gnu/9/../../../x86_64-linux-gnu/Scrt1.o: in function `_start':

(.text+0x24): undefined reference to `main'

collect2: error: ld returned 1 exit status

make[1]: *** [Makefile:33: inline_exec_tmp] Error 1And we use the function as follows:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

// a macro to define the largest size for a name

#define MAX_NAME_SIZE 256

struct student{

unsigned int _gwid ;

char* _name ;

} ;

struct student create_student_record( unsigned int gwid, char* name )

{

struct student new_student ;

new_student._gwid = gwid ;

new_student._name = (char*)malloc( sizeof(char)*MAX_NAME_SIZE ) ;

strcpy( new_student._name, name ) ; // deep copy

return new_student ;

}

int main()

{

struct student me = create_student_record( 920348, "sibin" ) ;

// An array of struct OBJECTS

struct student student_records[4] ;

// Use the create INTERFACE to fill the array

student_records[0] = create_student_record( 123, "ABC" ) ;

student_records[1] = create_student_record( 456, "DEF" ) ;

student_records[2] = create_student_record( 789, "GHI" ) ;

student_records[3] = create_student_record( 987, "JKL" ) ;

printf( "\n" ) ;

return 0 ;

}Program output:

What, if anything, is the problem here?

Consider the first line, struct student me = create( 920348, "sibin" ) ;. The return value from create_student_record() is a struct so the values of the original struct, created inside the function, are copied over to the the one in main, i.e., me. Except, when copying over the _name variables, we’re doing a shallow copy since we’re only copying the pointers and not the underlying data (names in this case)!

To avoid this problem, let’s return a pointer to struct student, as follows:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

// a macro to define the largest size for a name

#define MAX_NAME_SIZE 256

struct student{

unsigned int _gwid ;

char* _name ;

} ;

// copied struct but shallow copy returned (for _name)

struct student create_student_record( unsigned int gwid, char* name )

{

struct student new_student ;

new_student._gwid = gwid ;

new_student._name = (char*)malloc( sizeof(char)*MAX_NAME_SIZE ) ;

strcpy( new_student._name, name ) ; // deep copy

return new_student ;

}

// return a pointer to the struct to avoid shallow copy

// note: we're returning a pointer to the ORIGINAL memory that was created

struct student* create_student_record_pointer( unsigned int gwid, const char* name )

{

struct student* pnew_student = (struct student*) malloc( sizeof(struct student) ) ;

pnew_student->_gwid = gwid ;

pnew_student->_name = (char*)malloc( sizeof(char)*MAX_NAME_SIZE ) ;

strcpy( pnew_student->_name, name ) ;

return pnew_student ;

}

int main()

{

struct student* pme = create_student_record_pointer( 920348, "sibin" ) ; // returning/storing a pointer

// An array of POINTERS to struct

struct student* pstudent_records[4] ;

// Use the (pointer) create INTERFACE to fill the array

pstudent_records[0] = create_student_record_pointer( 123, "ABC" ) ;

pstudent_records[1] = create_student_record_pointer( 456, "DEF" ) ;

pstudent_records[2] = create_student_record_pointer( 789, "GHI" ) ;

pstudent_records[3] = create_student_record_pointer( 987, "JKL" ) ;

printf( "\n" ) ;

return 0 ;

}Program output:

This code should work. In fact, if we want tp print the records, we can define an interface for that as well.

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

// a macro to define the largest size for a name

#define MAX_NAME_SIZE 256

struct student{

unsigned int _gwid ;

char* _name ;

} ;

// return a pointer to the struct to avoid shallow copy

// note: we're returning a pointer to the ORIGINAL memory that was created

struct student* create_student_record_pointer( unsigned int gwid, const char* name )

{

struct student* pnew_student = (struct student*) malloc( sizeof(struct student) ) ;

pnew_student->_gwid = gwid ;

pnew_student->_name = (char*)malloc( sizeof(char)*MAX_NAME_SIZE ) ;

strcpy( pnew_student->_name, name ) ;

return pnew_student ;

}

// interface to print a SINGLE student record, given a pointer to it

void print_student_record( const struct student* record )

{

printf( "gwid = %d\t name = %s\n", record->_gwid, record->_name ) ;

}

int main()

{

struct student* pme = create_student_record_pointer( 920348, "sibin" ) ; // returning/storing a pointer

// An array of POINTERS to struct

struct student* pstudent_records[4] ;

// Use the (pointer) create INTERFACE to fill the array

pstudent_records[0] = create_student_record_pointer( 123, "ABC" ) ;

pstudent_records[1] = create_student_record_pointer( 456, "DEF" ) ;

pstudent_records[2] = create_student_record_pointer( 789, "GHI" ) ;

pstudent_records[3] = create_student_record_pointer( 987, "JKL" ) ;

// UGLY way to print, call the interface instead

printf( "\nAll Student Records (FOR LOOP):\n----------------------\nGIWD \t Name\n" ) ;

for(unsigned int i = 0 ; i < 4 ; ++i)

printf( "Student record %d: gwid = %d\t name = %s\n", i,

pstudent_records[i]->_gwid, pstudent_records[i]->_name ) ;

printf( "\nAll Student Records (INTERFACE):\n----------------------\nGIWD \t Name\n" ) ;

// print using INTERFACE -- same effect as above printf

for(unsigned int i = 0 ; i < 4 ; ++i)

{

printf( "Student record %d: ", i ) ;

print_student_record( pstudent_records[i] ) ;

}

printf( "\n" ) ;

return 0 ;

}Program output:

All Student Records (FOR LOOP):

----------------------

GIWD Name

Student record 0: gwid = 123 name = ABC

Student record 1: gwid = 456 name = DEF

Student record 2: gwid = 789 name = GHI

Student record 3: gwid = 987 name = JKL

All Student Records (INTERFACE):

----------------------

GIWD Name

Student record 0: gwid = 123 name = ABC

Student record 1: gwid = 456 name = DEF

Student record 2: gwid = 789 name = GHI

Student record 3: gwid = 987 name = JKL

There still remains a serious problem with this code (even though it compiles and runs). There are lots of memory leaks! We need to call free() to release the memory back to the system once we are done with it. In this case, at the end of the main() function.

So, will this work?

It won’t work since we’re only releasing the memory for the array. Remember we used malloc() for each of the following: * each struct student* in the array * each char* _name in each of the structs thus allocated!

A lot of memory will leak. Hence, we need to carefully release all of it.

// FIRST, free the memory for _name

free(pstudent_records[0]->_name) ;

// SECOND, free the struct

free(pstudent_records[0]) ;

// REPEAT for all

free(pstudent_records[1]->_name) ;

free(pstudent_records[1]) ;

free(pstudent_records[2]->_name) ;

free(pstudent_records[2]) ;

free(pstudent_records[3]->_name) ;

free(pstudent_records[4]) ;But this is fairly ugly code as well – and quite unmanageable for a large number of records. As before, we create a new interface, delete_student_record() to properly delete a record

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

// a macro to define the largest size for a name

#define MAX_NAME_SIZE 256

struct student{

unsigned int _gwid ;

char* _name ;

} ;

// return a pointer to the struct to avoid shallow copy

// note: we're returning a pointer to the ORIGINAL memory that was created

struct student* create_student_record_pointer( unsigned int gwid, const char* name )

{

struct student* pnew_student = (struct student*) malloc( sizeof(struct student) ) ;

pnew_student->_gwid = gwid ;

pnew_student->_name = (char*)malloc( sizeof(char)*MAX_NAME_SIZE ) ;

strcpy( pnew_student->_name, name ) ;

return pnew_student ;

}

// interface to print a SINGLE student record, given a pointer to it

void print_student_record( const struct student* record )

{

// WHY is this 'const'?

printf( "gwid = %d\t name = %s\n", record->_gwid, record->_name ) ;

}

void delete_student_record( struct student* pstudent )

{

print_student_record( pstudent ) ; // reuse the print interface!

free(pstudent->_name) ;

free(pstudent) ;

}

int main()

{

struct student* pme = create_student_record_pointer( 920348, "sibin" ) ; // returning/storing a pointer

// An array of POINTERS to struct

struct student* pstudent_records[4] ;

// Use the (pointer) create INTERFACE to fill the array

pstudent_records[0] = create_student_record_pointer( 123, "ABC" ) ;

pstudent_records[1] = create_student_record_pointer( 456, "DEF" ) ;

pstudent_records[2] = create_student_record_pointer( 789, "GHI" ) ;

pstudent_records[3] = create_student_record_pointer( 987, "JKL" ) ;

// UGLY way to print, call the interface instead

printf( "\nAll Student Records (FOR LOOP):\n----------------------\nGIWD \t Name\n" ) ;

for(unsigned int i = 0 ; i < 4 ; ++i)

printf( "Student record %d: gwid = %d\t name = %s\n", i,

pstudent_records[i]->_gwid, pstudent_records[i]->_name ) ;

// print using INTERFACE -- same effect as above printf

printf( "\nAll Student Records (INTERFACE):\n----------------------\nGIWD \t Name\n" ) ;

for(unsigned int i = 0 ; i < 4 ; ++i)

{

printf( "Student record %d: ", i ) ;

print_student_record( pstudent_records[i] ) ;

}

// Use the DELETE interface to PROPERLY clean up memory

printf( "\nDeleting all Student Records (INTERFACE):\n----------------------\nGIWD \t Name\n" ) ;

for(unsigned int i = 0 ; i < 4 ; ++i )

{

// send each element of the array to be "cleaned up

delete_student_record( pstudent_records[i] ) ;

}

// ALL Memory properly released!

printf( "\n" ) ;

return 0 ;

}Program output:

All Student Records (FOR LOOP):

----------------------

GIWD Name

Student record 0: gwid = 123 name = ABC

Student record 1: gwid = 456 name = DEF

Student record 2: gwid = 789 name = GHI

Student record 3: gwid = 987 name = JKL

All Student Records (INTERFACE):

----------------------

GIWD Name

Student record 0: gwid = 123 name = ABC

Student record 1: gwid = 456 name = DEF

Student record 2: gwid = 789 name = GHI

Student record 3: gwid = 987 name = JKL

Deleting all Student Records (INTERFACE):

----------------------

GIWD Name

gwid = 123 name = ABC

gwid = 456 name = DEF

gwid = 789 name = GHI

gwid = 987 name = JKL

Remember that properly designing and using programmatic interfaces is a critical part of system design and software development. All of the system calls, e.g., printf(), malloc(), etc. are interfaces that we use to interact with the system, i.e., the operating system!

4.5.4 Interface Design Issues | Return “by value” and “by reference”

As mentioned earlier, returning a struct object from a function (say create_student_record) can result in some problems such as shallow copy. There is another serious problem – performance!

Consider the following code (name the file return_value.c):

/*

* CSC 2410 Code Sample

* interface design | return by VALUE

* Fall 2024

* (c) Sibin Mohan

*/

#include <stdio.h>

#include <stdlib.h>

#define MAX_VALUE 1000

struct value

{

char _array[MAX_VALUE] ;

} ;

struct value create_value()

{

struct value new_value ;

return new_value ;

}

int main( int argc, char* argv[] )

{

if( argc != 2 )

{

printf( "usage: ./return_value <size_of_loop>\n\n" ) ;

return -1 ;

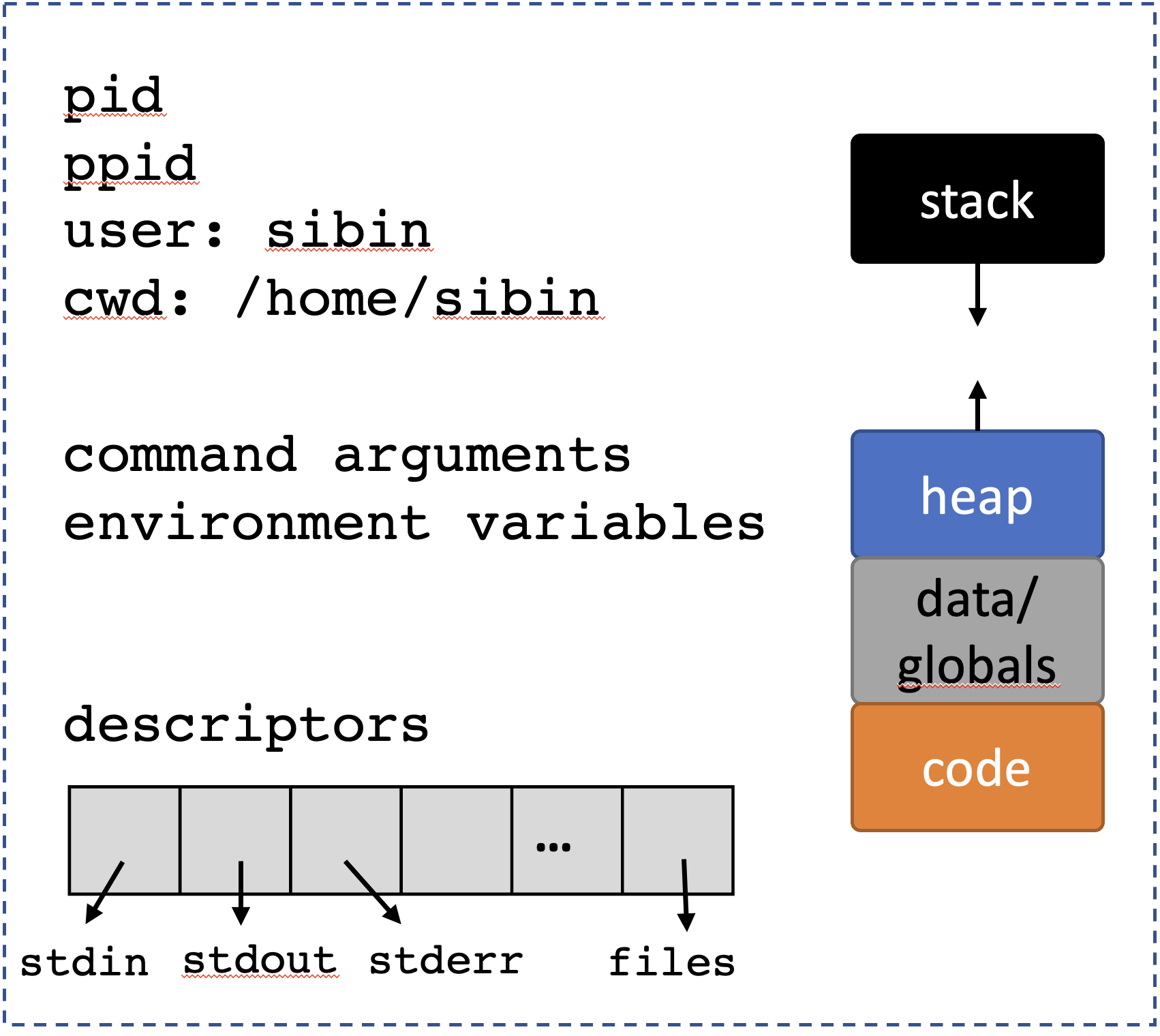

}